Exactly mate. Seems people like you and I are some of the few with enough common sense to see that the 3080 should have had more than 10GB. Thankfully, Nvidia agree and are releasing a 20GB version. Or get the 16GB 3070 if you want a save a few bucks.

A quick appeal to people using this argument. Putting aside for a second that these are so far just rumors and not official announcements, can we at least acknowledge that Nvidia are a for-profit organization and that if there's a market demand for something then it's in there best interest to fill that demand. The "fact" that Nvidia would make such a card is not acknowledgement that such a vRAM config is necessary for gaming, anymore than people who make gold plated HDMI connectors is an acknowledgement that gold plated HDMI connectors are better (Hint: they're not). The only thing it indicates is that there's a market to sell to.

I would expect a 16gb 3070 to get beat by a 10gb 3080 in everything now. In a few years (which is really what this vram discussion is about) I bet the 10gb 3080 will still beat a 16gb 3070 in the vast majority of the new games. There may be a game here or there that is coded in such a way that it gobbles up vram without hammering the GPU....those select few titles might put a 16gb 3070 ahead of a 3080 if and when the settings are carefully selected to get that outcome.

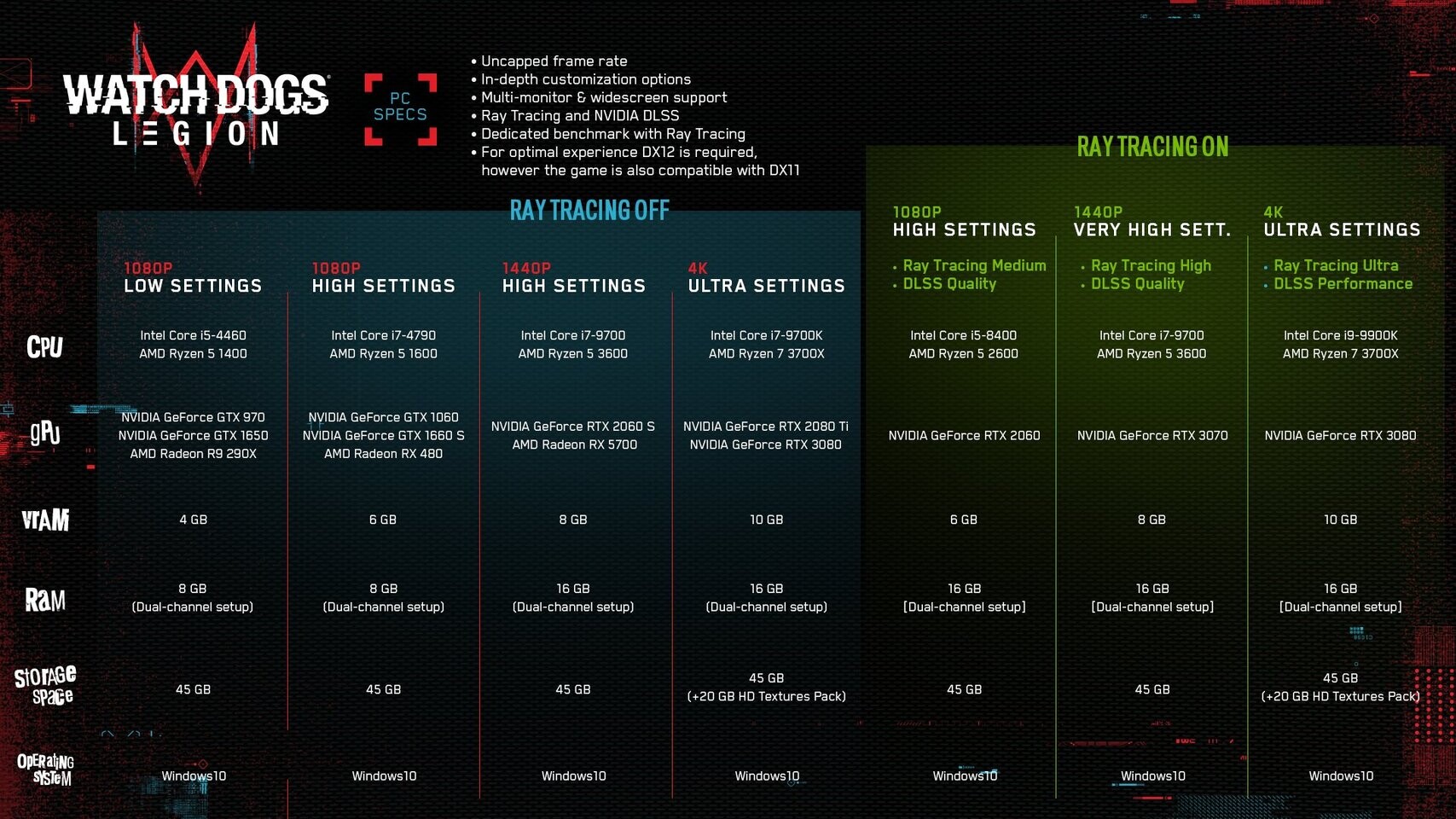

Basically what I said. Those things are generally speaking, edge cases. And who cares about them seriously. People care about the mainstream trends. You do not pile up assets into vRAM in a modern game engine and it not impact frame rate. An open world game isn't going to load in an asset 300 miles away into vRAM for no good reason. If it's going into vRAM it's because it's on some close game tile/zone that will likely used in the next few seconds/minutes. In the Nvidia 8nm Ampere thread when we discussed this issue people brought up numerous examples of "games that use more than 10Gb of vRAM" but didn't even check the performance charts to see the very examples they posted are obliterated in terms of frame rate.

I actually expect my components to become obsolete over time. I also expect to have to turn down settings as my equipment ages and new games come out....and then, at some point, I expect to buy new hardware.

A natural progression felt by anyone who has been in this game for very long. The important part of this post is that as you turn down settings of newer games on older hardware, the vRAM requirements drop. This argument that it's the FUTURE games that will be the vRAM problem is a bad argument for this reason. Future games like all current games will do the same thing, they will demand varying amounts of vRAM based on their relative settings used.

I'm reminded of the big debate around CPUs a few years ago, when Crysis came out. One of the big things was that it was the game of the future, that you can keep coming back to again and again at every generation with new futuristic CPUs with their eleventy billion GHZ clock speeds...

As it turned out gaming didn't go that way, clock speeds didn't go up by much and instead CPUs went the route of multiple cores. Crysis never got to unlock its true potential because it banked on the wrong thing.

My point is there's no need to get too wound up about VRAM or anything, because no one knows what advances this generation will bring or what route devs will take in future. Maybe DLSS will become amazing and we'll all be able to render at 360 blown up to 8k, who knows.

This is a good point. And one last thing to add is that Crysis is a game, they took a gamble on how hardware would go. But if you look at the next gen consoles they're making hardware bets that will define how the games will respond. And the bets that both new consoles are making are for very fast SSDs and improvement in technology that allows direct SSD to vRAM (or vRAM equivelent) transfer. With Xbox and windows this is Microsofts DirectStorage and Nvidias implementation of this is RTX IO. If you want to make a bet on the future, bet on the massive investment made in these areas. That and the fact that these console devs basically paired their modern GPUs with no more than 10GB of memory themselves.