Soldato

Not even Port Royal. The leaked benchmarks showed Port Royal. The 3080 is fine at 4k. DLSS has that covered.

VRAM Usage

You can see in my testing that having DLSS 2.0 enabled cuts down on the amount of VRAM used at 8K but a big chunk. Looking at the GeForce RTX 2080 Ti which uses over 10GB of VRAM at 8K without DLSS 2.0 enabled, but under 8GB of VRAM when DLSS 2.0 is enabled to the Quality preset. https://www.tweaktown.com/articles/...hmarked-at-8k-dlss-gpu-cheat-codes/index.html

VRAM Usage

Something that has come up with other games tested in 8K is VRAM usage. For some, astronomical numbers that consume the 24 GB memory of an TITAN RTX are not unheard of. Once again, DLSS 2.0 triumphs on the RTX 2080 Ti. Using it in quality mode lowered VRAM consumption by nearly 2 GB. After enabling it, usage went from 10.3 GB down to 8.4 GB, well within the limits of the card’s 11 GB VRAM. By not hammering the card’s VRAM limits, DLSS 2.0 has allowed it to perform to its fullest potential. In the end, Mr. Garreffa has likened DLSS 2.0 to GPU cheat codes, and we can now easily see why. https://www.thefpsreview.com/2020/0...8k-with-dlss-2-0-using-a-geforce-rtx-2080-ti/

The issue with 8k, is games like Control are reported to use 20GB of vRAM. This means that the 6900XT with 16GB of vRAM may have issues keeping up. https://www.eurogamer.net/articles/digitalfoundry-2020-dlss-ultra-performance-analysis

More Death Stranding

Graphics memory (VRAM) usage

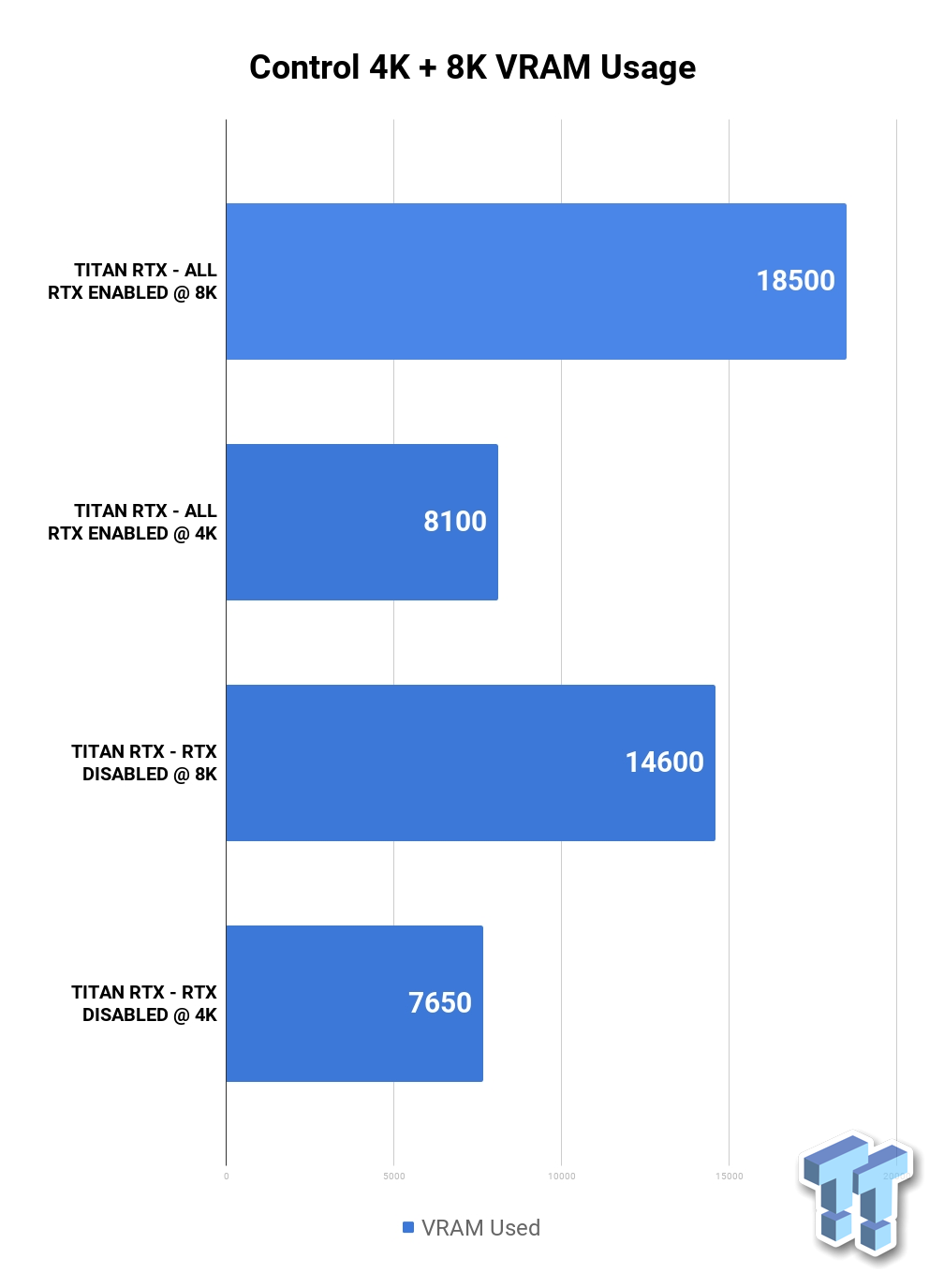

How much graphics memory does the game utilize versus your monitor resolution with different graphics cards and respective VRAM sizes? Well, let's have a look at the chart below compared to the three main tested resolutions. The listed MBs used in the chart are the measured utilized graphics memory during our testing. Keep in mind; these are never absolute values. Graphics memory usage can fluctuate per game scene and activity in games. This game will consume graphics memory once you start to move around in-game, memory utilization is dynamic and can change at any time. Often the denser and more complex a scene is (entering a scene with lots of buildings or vegetation, for example) results in higher utilization. With your close to the max "High" quality settings this game tries to stay at a five towards 5~6 GB threshold. We noticed that 4GB cards can have difficulties running the game at our settings. Especially the Radeons.

Control if you turn on everything and 8k you get 19GB vRAM usage.

https://www.tweaktown.com/articles/9131/control-tested-8k-nvidia-titan-rtx-uses-18gb-vram/index.html

List of games at different resolutions including 4k. None going near 10GB.

https://www.tweaktown.com/tweakipedia/90/much-vram-need-1080p-1440p-4k-aa-enabled/index.html

We do not know how things will change with the next gen console generation. Thats last gen. We are literally on the cusp of a new era for console gaming. They have double the memory compared to last generation.