You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Home Server + 10G ethernet

- Thread starter Kei

- Start date

More options

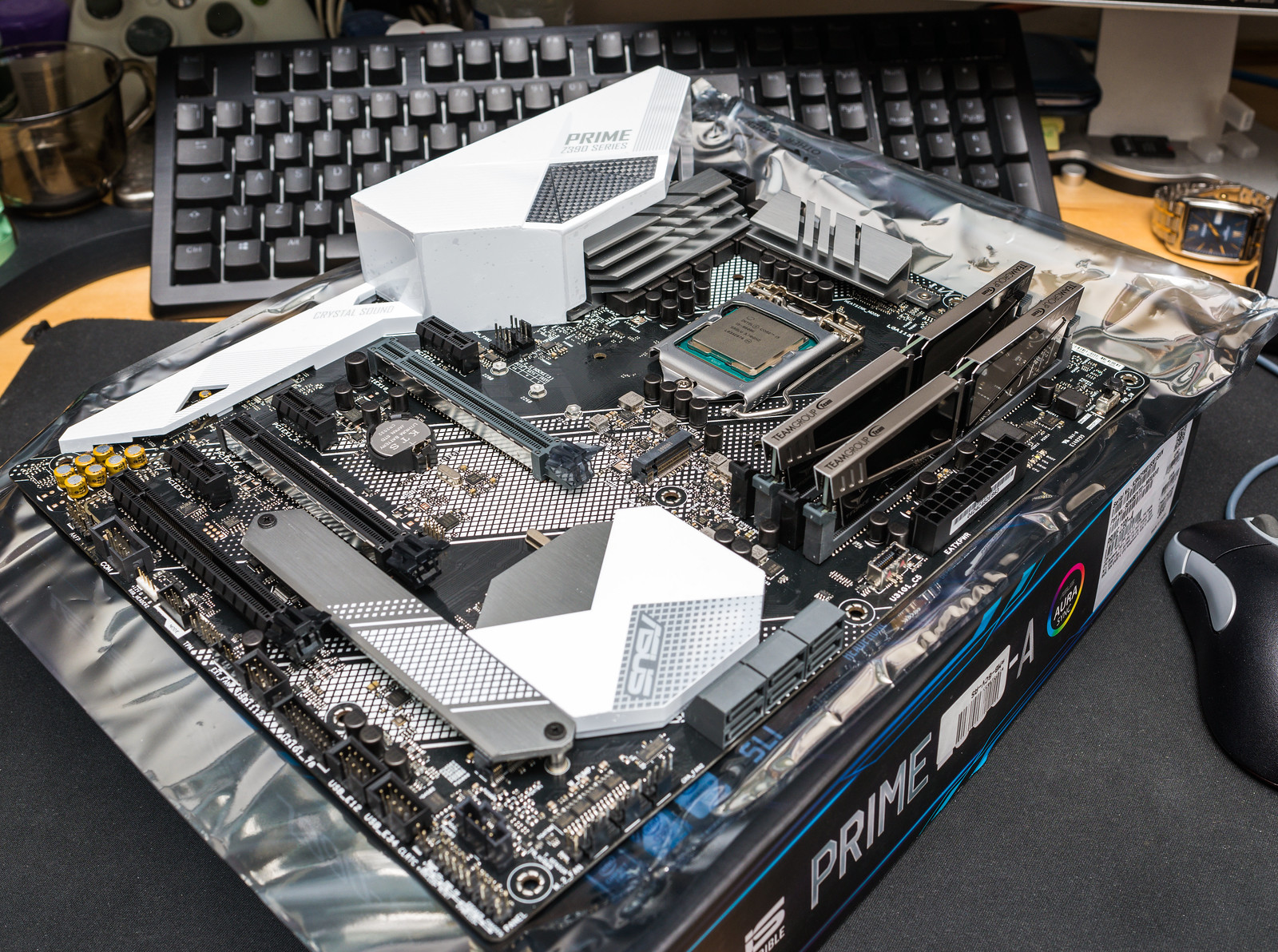

View all postsStarted work on the bracket mods needed in order to make my original megahalems (775/1366 only) cooler fit on 1151. Decided that the best approach would be to swipe a backplate from one of my other coolers and make it work with a mixture of mounting hardware. One noctua backplate combined with some bolts with the same threads as the original prolimatech parts and the noctua black plastic spacers filed down to match the thickness of the prolimatech metal spacers. The only bolts I had which were the correct thread and sufficient length had countersunk heads so I found some suitable washers for them. The upper mounting plates then needed the 775 holes filing out towards the 1366 mounting holes as 775 is 72mm spacing, 115x is 75mm and 1366 is 80mm.

Backplate fitted.

Mounting plates fitted.

Test run with paste to see how the spread looked. IMO, pretty much as good as any stock mounting setup.

Built. Currently tested and working ok, albeit, the install media for centos is not playing ball. It's locking up with a black screen after selecting install centos 7. The old opensuse 42.3 install boots ok, but it's definitely got driver issues as the gui is dog slow suggesting no gpu acceleration and again, no network interfaces are working.

In other news, the mellanox connectx-3 that I picked up for the server is as dead as a doornail. I've tried it in 4 different systems in a variety of different pcie slots and it doesn't show up as a device in either windows or linux.

Backplate fitted.

Mounting plates fitted.

Test run with paste to see how the spread looked. IMO, pretty much as good as any stock mounting setup.

Built. Currently tested and working ok, albeit, the install media for centos is not playing ball. It's locking up with a black screen after selecting install centos 7. The old opensuse 42.3 install boots ok, but it's definitely got driver issues as the gui is dog slow suggesting no gpu acceleration and again, no network interfaces are working.

In other news, the mellanox connectx-3 that I picked up for the server is as dead as a doornail. I've tried it in 4 different systems in a variety of different pcie slots and it doesn't show up as a device in either windows or linux.

Had a hell of job installing a new version of linux. Some kind of issue regarding the integrated intel gpu driver (i915) resulting in complete system freezes once you select an install option from the usb boot menu. After many hours of trying various kernel options via grub, I gave up and put the quadro back in. That immediately fixed the installation issues. Once I had fedora installed and setup using the quadro, I shut it down and took it out and re-enabled the intel igpu and haven't had a single issue since doing so.

So far I've managed to get everything except samba, vnc and tftp back to the way it was prior to the initial botched "upgrade". I've got so stumped with the samba issue that I've had to post a question on it in the linux section. At least I've now got plex working properly again.

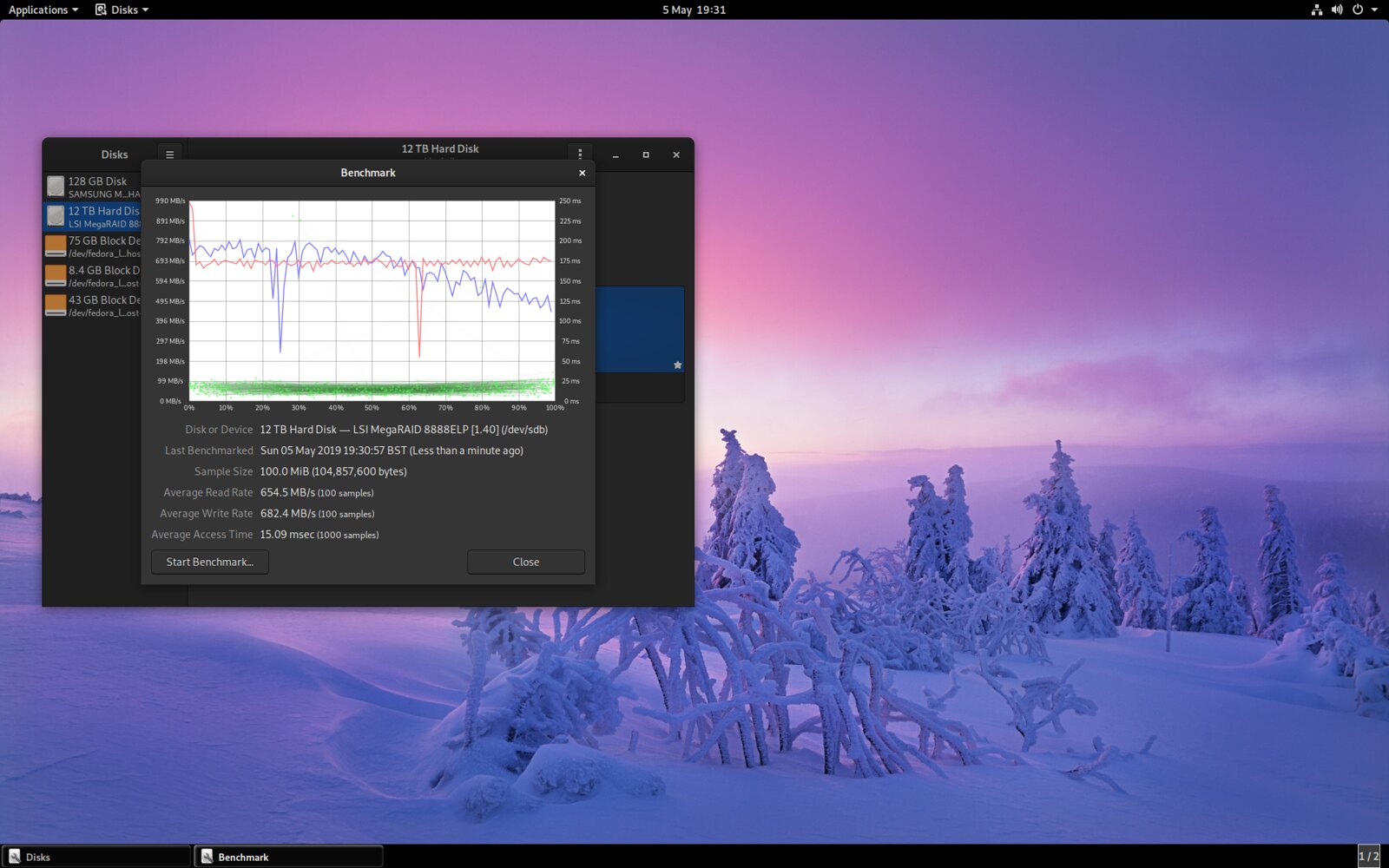

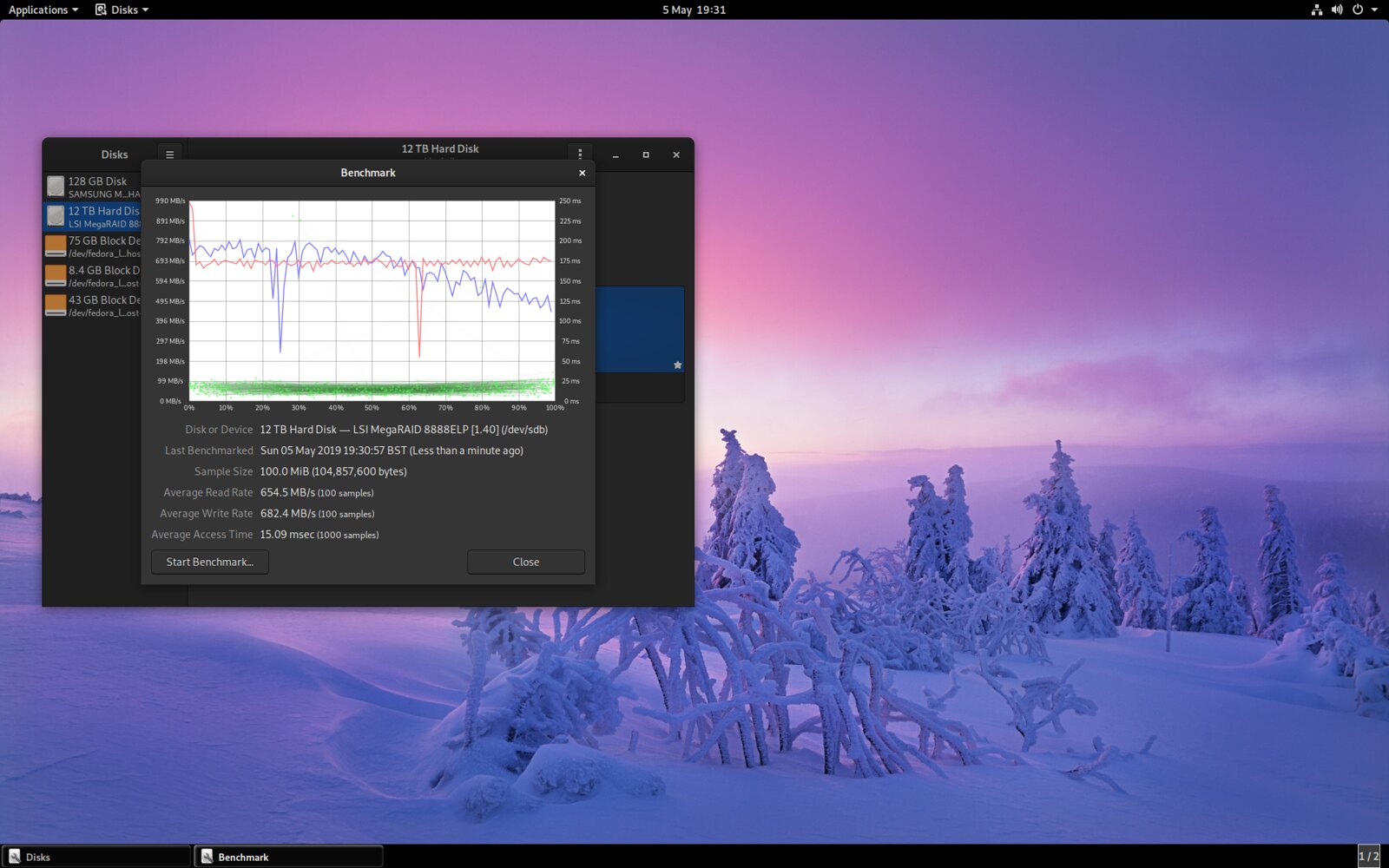

I also took the opportunity to run the disk benchmark on the array as I've not run it since I built it nearly 5 years ago. Performance seems pretty decent considering the age of the raid controller. (2007)

So far I've managed to get everything except samba, vnc and tftp back to the way it was prior to the initial botched "upgrade". I've got so stumped with the samba issue that I've had to post a question on it in the linux section. At least I've now got plex working properly again.

I also took the opportunity to run the disk benchmark on the array as I've not run it since I built it nearly 5 years ago. Performance seems pretty decent considering the age of the raid controller. (2007)

Finally finished sorting out the OS. Had a hell of a job dealing with setting up vncserver due to policykit issues making executing gui applications with elevated permissions difficult. Simple solution was to switch over to using x0vncserver instead which made a lot more sense as it means I'm not running a separate desktop. Still suffering from some intel i915 driver issues. Weirdly, the problems returned when I changed screen. Turning off the window compositing in xfce stopped the shed load of errors and it makes little difference to the usability of the OS.

Memory usage seems a tad high but from what I can tell, most of it is the lsi megaraid storage manager server. (1.7GB being used by java)

All that I need to sort now is finding a replacement SFP+ NIC for 10Gbe and re-cable to the loft and my room. I've been looking at solarflare NICs as mellanox connect-x 3's are pretty expensive still and having had one DOA makes me less keen on them. Another intel x710 is a potential option albeit the most expensive.

Memory usage seems a tad high but from what I can tell, most of it is the lsi megaraid storage manager server. (1.7GB being used by java)

All that I need to sort now is finding a replacement SFP+ NIC for 10Gbe and re-cable to the loft and my room. I've been looking at solarflare NICs as mellanox connect-x 3's are pretty expensive still and having had one DOA makes me less keen on them. Another intel x710 is a potential option albeit the most expensive.

Last edited:

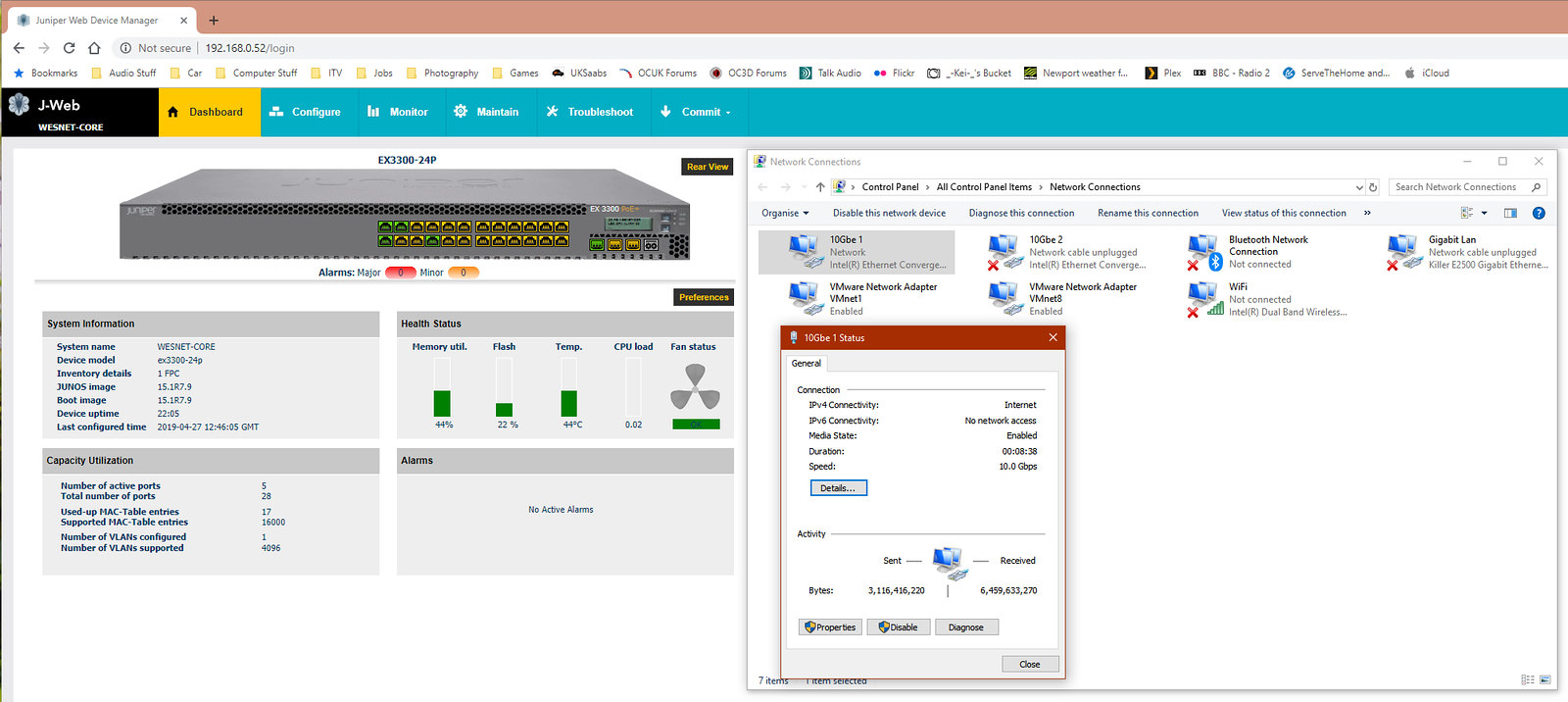

Simply put, the switch is the main reason why I went sfp+ instead of copper. I got this 24 port Poe+ / 4 sfp+ Juniper switch for a lot less than a 10GbaseT netgear or Ubiquiti.Why are you using SFP+ rather than straight RJ45 cards? There are umpteen different SFP dongles and they need to be matched. Granted this mainly applies to fibre links but RJ45 keeps it simple and seems sufficient for your use case.

The other issue is my flood wiring is cat 5 which was installed nearly 20 years ago when 100Mb was the norm. Fibre will have the advantage that in principle, it’s less limited by speed and distance than copper so it could potentially be reused in the distant future on a 40 or even 100Gb upgrade.

Replacement NIC bought. After some lengthy research, I decided to try solarflare and picked up a SFN7122F card nice and cheap to replace the DOA Mellanox CX3.

Tested it on one of the windows machines first and all signs were good, although the drivers would not install. Kept causing an NDIS SYSTEM_THREAD_EXCEPTION_NOT_HANDLED bluescreen. Not sure on the cause, possibly the firmware on the card could have done with being updated. But the card showed a link with my intel SFP installed and windows recognised the device which was good enough for me. Swapped it back out with my X710 card and put it into the server and surprisingly, it worked straight from the get go. SFC9120 driver present and a 10Gb link. Yay. Now all that I need to sort out is running some fibres.

Things have been running well so far. Ironed out the vivaldi framework ram issues but plex DLNA server seems hell bent on slowly chewing through ram too.

Tested it on one of the windows machines first and all signs were good, although the drivers would not install. Kept causing an NDIS SYSTEM_THREAD_EXCEPTION_NOT_HANDLED bluescreen. Not sure on the cause, possibly the firmware on the card could have done with being updated. But the card showed a link with my intel SFP installed and windows recognised the device which was good enough for me. Swapped it back out with my X710 card and put it into the server and surprisingly, it worked straight from the get go. SFC9120 driver present and a 10Gb link. Yay. Now all that I need to sort out is running some fibres.

Things have been running well so far. Ironed out the vivaldi framework ram issues but plex DLNA server seems hell bent on slowly chewing through ram too.

Finally picked up a second OM3 fibre so I can do a test run at 10Gb. Performance in one direction looks pretty good, but not so good in the other direction.

Running my threadripper workstation with the intel x710 as the iperf server net 7Gbps with the 9600k/solarflare NAS as the client. The other way around only got 3.5Gbps. Currently I've adjusted the Tx/Rx buffers on the intel card to their maximums but not made any changes to the solarflare as I'm less familiar with linux driver tweaks.

Running my threadripper workstation with the intel x710 as the iperf server net 7Gbps with the 9600k/solarflare NAS as the client. The other way around only got 3.5Gbps. Currently I've adjusted the Tx/Rx buffers on the intel card to their maximums but not made any changes to the solarflare as I'm less familiar with linux driver tweaks.

I was seriously considering another intel x710 for the server too but it was 2.5x the cost of the solarflare/mellanox card. I tried the mellanox connectx-3 first but it was DOA and there were no others available at the time. Having got some info on solarflare from STH, I took a punt which paid off as it seems to perform very well. The x710's I bought cost £125 each and the intel SFP's were £36 each. The solarflare was £50 and the avago transceivers were free which is why I went that route. In hindsight, doing this again, I'd probably go solarflare for the whole lot as I could have gotten some current gen SFN8522 cards for £70 ish each which would have been a bargain had I known they were good at the time.Sorry to ask but you've kind of done the upgrade that I am wanting to do.

You've used Intel X710 cards into your machines apart from the server which has a Solarflare, any reason you didn't go with another Intel?

I have my main PC, Fileserver, ESXi box with the VM's pulling from said file server and Backup machine all needing to be lashed together (thinking something MikroTik in the middle of them all) but have been really holding off as I don't know what hardware to put in or of it will work.

Then will run a 10GB backbone from the MikroTik switch in the cave to my "core" switch in the attic to widen the pipe for futureproofing.

So....Intel SFP+ cards & SFP's, what kind of pricing did they work out at?

The windows 1903 update seems to have mostly resolved the issues I was seeing with iperf, though I'm still getting better speeds to the server than I do from it.

I bought some more OM3 fibres from FS along with some LC-LC links for the wall boxes I got from RS a few months back. Wall boxes should be pretty resilient as the fibres will enter at the base of the boxes keeping the connection virtually flush with the wall to avoid breaking them.

Since I now have sufficient fibres for a proper test run, it'd be rude not to. Just a quick test to make sure all ports and fibres work ok, I connected the server with two links using the 3 avago transceivers I have. All working great, the juniper doesn't seem to mind the avago transceiver I put in it.

Quick file transfer test from my pc to the server. This is off an nvme ssd to avoid sata bottlenecks. Normal transfers will be a fair bit slower sadly but at least now I can have two pc's copying to the server and two tv's streaming from it without interruption.

Last edited:

Bought some new fans for the juniper switch to try and quieten it down a bit without triggering a fan failure warning.

2x 40x40x28mm 12,500rpm San Ace fans instead of 18,000rpm. Idle fan voltage is 4.5V so something like a noctua that maxes out at 5000rpm would run too slow at idle.

A pack of molex plug bodies and pins for fan headers (as the fans above come with bare ends)

A pack of molex ATX pins and sockets for making up custom PSU cables in the future

A molex pin extraction tool for the ATX pins

Crimping dies for insulated and un-insulated terminals

Fitted and now tested. All working ok, significantly quieter both at idle and full speed and no fan failure warnings. Best of all, it is still idling at 44 degrees.

Server cpu temps looking rather chilly at this time of year.

2x 40x40x28mm 12,500rpm San Ace fans instead of 18,000rpm. Idle fan voltage is 4.5V so something like a noctua that maxes out at 5000rpm would run too slow at idle.

A pack of molex plug bodies and pins for fan headers (as the fans above come with bare ends)

A pack of molex ATX pins and sockets for making up custom PSU cables in the future

A molex pin extraction tool for the ATX pins

Crimping dies for insulated and un-insulated terminals

Fitted and now tested. All working ok, significantly quieter both at idle and full speed and no fan failure warnings. Best of all, it is still idling at 44 degrees.

Server cpu temps looking rather chilly at this time of year.

Last edited:

Yes the dies do fit the minifit crimp. The dies are for the more common crimping frames like a paladin crimpall 8000. I have an Eclipse ergo lunar crimping frame. (used to be Proskit) Sadly the die isn't the best on the fan pins as they are designed for 24-26AWG wire and the die is for 18-20AWG in the smallest section which I hadn't realised at the time. Ideally, I need to find a die for 24-30 AWG open barrel contacts. I think on the ATX size pins with 18-20 AWG cable, it'll do the job nicely.Are the dies for the molex minifit crimps? Been after a tool but the 'official' tools are super expensive

I got rather tired buying a new crimping tool for every job as it had already gotten to this.

1x Boot lace ferrules 16-26AWG

1x Boot lace ferrules 4-16AWG

1x Insulated terminal

1x Coax crimper (RG58/59 etc)

1x Uninsulated lug crimper

Last edited:

Figured it out this morning, looks like a disk has possibly died. It's simply disappeared hence my not realising the issue. I have ordered two 2TB ultrastars to replace the dead drive and provide a hot spare. Now I've got the joy of writing down all of the serial numbers and finding which drive has given up.

Can't moan too much as it's been running pretty much 24/7 for nearly 6 years

Can't moan too much as it's been running pretty much 24/7 for nearly 6 years

Replacement disk arrived plus an extra to run as a hot spare.

Rebuild started. I reckon the estimate is a tad optimistic. It'll still be going in 12 hours time let alone 6, probably longer than that.

Found the disk that has gone AWOL which is where I will put the hot spare once the rebuild finishes.

Rebuild started. I reckon the estimate is a tad optimistic. It'll still be going in 12 hours time let alone 6, probably longer than that.

Found the disk that has gone AWOL which is where I will put the hot spare once the rebuild finishes.

The rebuild completed successfully in around 7 hours, which was a nice surprise, I was really expecting it to take an eternity.

This morning I removed the old disk that I had assumed had failed and installed the replacement into the backplane in order to mark it as a hot spare. Strangely, I can't see the disk, which is leading me to believe that the original disk may not have actually failed and it's simply that slot on the backplane that's failed. I'm going to test the disks to make sure. It's not the end of the world if the backplane has failed as I'm not using all 12 locations yet. Replacing it may be a little difficult as the two backplanes in the 5.25" bays were an extremely tight fit.

This morning I removed the old disk that I had assumed had failed and installed the replacement into the backplane in order to mark it as a hot spare. Strangely, I can't see the disk, which is leading me to believe that the original disk may not have actually failed and it's simply that slot on the backplane that's failed. I'm going to test the disks to make sure. It's not the end of the world if the backplane has failed as I'm not using all 12 locations yet. Replacing it may be a little difficult as the two backplanes in the 5.25" bays were an extremely tight fit.

I decided that the old CM690 II case with it's limited drive support was going to become a hurdle. The failed backplane didn't help matters so I wanted to eliminate them from the build. I found that most cases that were large enough and supported the necessary quantity of disks had been discontinued already. The only stand out options that were still available were the Corsair 750D and the Fractal Define XL. The drive cages for the define were pretty much unobtainium whereas the cages for the corsair are still available. I prefer the fractal side panel without the window but c'est la vie.

New case bought. Went to order the drive cages from corsair directly and, typically, they had gone out of stock.

I'm hoping to find a fan bracket that fits the pcie slot brackets so I can get good airflow across the expansion cards as the controller, expander and 10Gbe card all get quite hot without direct airflow.

New case bought. Went to order the drive cages from corsair directly and, typically, they had gone out of stock.

I'm hoping to find a fan bracket that fits the pcie slot brackets so I can get good airflow across the expansion cards as the controller, expander and 10Gbe card all get quite hot without direct airflow.

Last edited:

They do seem a bit bendy. Compared to the CM690, the whole case feels a bit bendy, but I reckon that's down to the gauge of steel used in order to keep the weight and cost of production down.That looks very nice, lovely case the 750D

I've got a couple of the Corsair 3 drive bracket/cages in my own system for similar reasons. I only problem I have with them is the pathetically bendy plastic Corsair have chosen for the tray, why they couldn't have spent a few pence more per unit I shall never understand!!!

I'm also looking at buying a second solid side panel to replace the window as I really don't need nor want the window and it's much easier to drill steel than it is plexi-glass if I want to add some side panel fans to cool the expansion cards.

Three more hard drive cages purchased. All fitted snugly, giving me capacity for 18x 3.5" disks, 4x 2.5" disks and 3x 5.25" bays that can fit another 4x 3.5" drives with an adaptor. Not a lot of point in more than that as the 550W supply doesn't have anywhere near enough connectors for it and at full stretch, I could only run 24x disks in an array without needing either an extra expander, a different raid card or bigger expander.

I'm trying to work out whether to replace the windowed side panel with a solid one by buying another metal one from corsair. (pretty cheap at £9.99) I have no need nor want for a window on a server, but could do with a side panel fan to blow cool air across the expansion cards as they are all passively cooled server cards that get quite hot.

I'm trying to work out whether to replace the windowed side panel with a solid one by buying another metal one from corsair. (pretty cheap at £9.99) I have no need nor want for a window on a server, but could do with a side panel fan to blow cool air across the expansion cards as they are all passively cooled server cards that get quite hot.

Last edited:

Started work on sorting out the server today. Odd place to start, but I looked at the keyboard as it was utterly filthy and the key action felt almost gritty. It is an antique PS/2 Acer mechanical from roughly 20 years ago. Having pulled all the keycaps off, I found the extent of the dirt. Most, if not all of it originates from the loft where the keyboard has spent the last decade. Incredibly, it still worked perfectly.

Having washed all of the keycaps and cleaned the body of the keyboard. I have also cleaned all of the switches, washed them off then applied some anti-corrosion lubricant. (Kontakt 60, WL then 61) The switches felt massively better.

No idea who made the switches as they are branded as acer. They feel similar to a cherry MX brown/blue.

Finished. Sadly the yellowing of the keycaps couldn't be removed.

On to the case swap. The 750D is quite a bit bigger than the CM690. I put a new 8TB drive in already as that is going to be used as an rsync disk for certain files from the main array.

Deconstructing the 690 was simple enough but the last 6 years in the loft haven't been kind. That green cloth was clean to start. All of the fans and filters are caked in a fine black dust.

The add in cards out. I really should upgrade the RAID controller as it's the oldest item but I can't be bothered finding a replacement.

All the drives now transferred across. Just the PSU and motherboard and fans to put in.

Having washed all of the keycaps and cleaned the body of the keyboard. I have also cleaned all of the switches, washed them off then applied some anti-corrosion lubricant. (Kontakt 60, WL then 61) The switches felt massively better.

No idea who made the switches as they are branded as acer. They feel similar to a cherry MX brown/blue.

Finished. Sadly the yellowing of the keycaps couldn't be removed.

On to the case swap. The 750D is quite a bit bigger than the CM690. I put a new 8TB drive in already as that is going to be used as an rsync disk for certain files from the main array.

Deconstructing the 690 was simple enough but the last 6 years in the loft haven't been kind. That green cloth was clean to start. All of the fans and filters are caked in a fine black dust.

The add in cards out. I really should upgrade the RAID controller as it's the oldest item but I can't be bothered finding a replacement.

All the drives now transferred across. Just the PSU and motherboard and fans to put in.

It's not too bad. I estimate it's around 16-20Kg. It's nowhere near as bad as my enthoo primo. That weighs a shade over 30Kg and is so unwieldy that I can't carry it without help.Looking good! I bet that ways a ton!

I got the board out quite quickly. This still looks pretty clean considering the black dust caked over most other parts.

Installed with all of the add in cards. I had to swap the position of the RAID controller and the 10Gbe NIC as the SAS cables wouldn't fit around the drive cage. Without changing the controller, I can't fit the 6th cage in. (A good incentive to replace it)

Some of the wiring nightmare dealt with. Those silverstone 4x sata power extensions were a godsend and have made things far neater than I was expecting.

With the SSD installed and power connected. I've been using wire ties instead of cable ties to test out routes before committing to using single use cable ties. I have fitted cable ties and had to cut and replace them too many times. I've also realised that I forgot to connect the power connector to the last drive in the left stack which I'll sort tomorrow. The SATA connector on the SSD is missing as I need to find another cable. I also have the joys of fitting the rest of the fans and figuring out where to connect them.