Am sure you have inside infoYOU WASH YOUR MOUTH OUT!!!!

let me know which brand i can hit on at 17th thurs 2pm

let me know which brand i can hit on at 17th thurs 2pmPlease remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

Am sure you have inside infoYOU WASH YOUR MOUTH OUT!!!!

let me know which brand i can hit on at 17th thurs 2pm

let me know which brand i can hit on at 17th thurs 2pmYup PCIE support bracket. Also no ventilation on the backplate like with the other companies.

RTX 3080 VS RTX 3090 4K ULTRA SETTINGS PERFORMANCE & BENCHMARK COMPARISON || TESTED IN 5 GAMES (Only one I have found so far)

(His Typed Overlay says 1080p so not sure if he made typo or running 1080p, either way it still made a difference (must have a GOOD CPU lol).

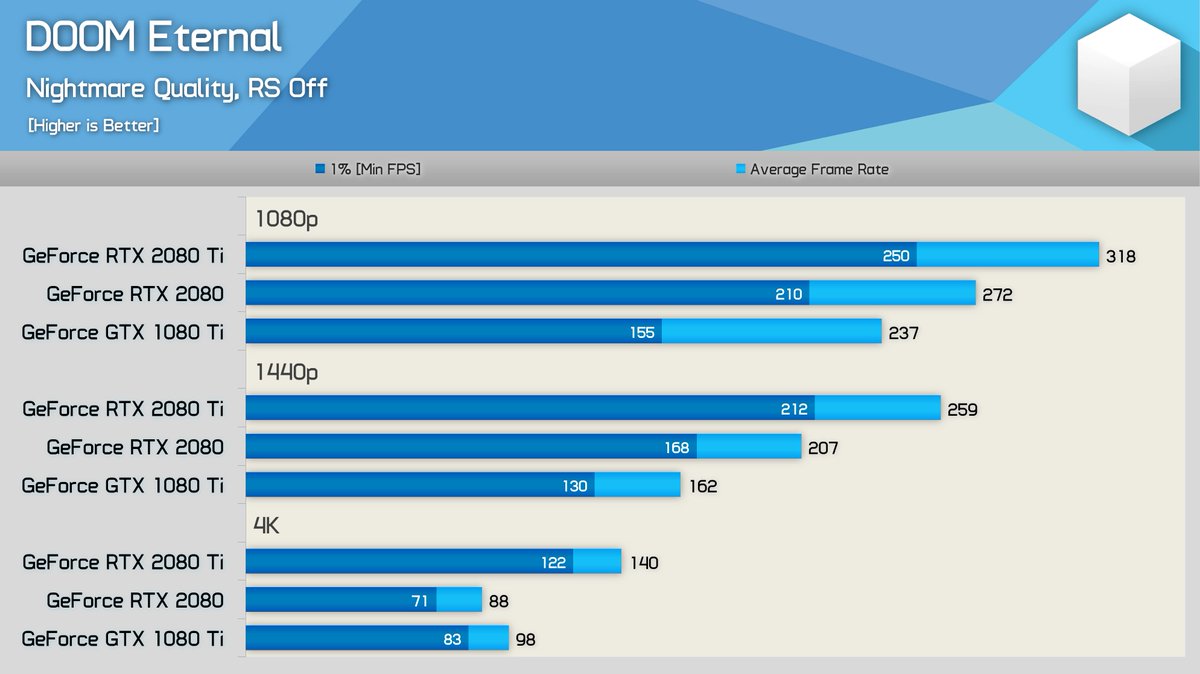

I think you're missing the point. It's not about the VRAM. The 3090 can be at most 20% faster than a 3080, and will likely be less, since even 20% would require perfect scaling of on-paper specs, which never happens. Let's look at the 2080 vs the 2080 Ti:The 3080 Ti would just be a 3090 with a few SMs disabled. Just like the 1080 Ti and 2080 Ti.

The 3080 has 68 SMs whilst the 3090 has 82 SMs.

For comparison:

3080 Ti would be 95% of the performance of the 3090.

- RTX Titan has 72 SMs and the 2080 Ti has 68 SMs.

- Titan Xp has 28 SMs and the 1080 Ti also has 28 SMs.

VRAM isn't a measure of performance. 1070/1080 had 8GB, 1080 Ti has 11GB and Titan Xp had 12GB. Not that different but completely different classes of cards in price.

Hmm interesting, I haven't seen any of these brackets on any of the others apart from the galax, Which doesn't seem to be listed anywhere in the UK.

I'd rather they just make it a proper 3 slot, than 2 or 2.5, and need a bracket.

You know I'm kinda hoping stocks are delayed, if AMD came out with a GPU within spitting distance of the 3080 which is being reported but it's too early to tell, It will more than likely have 16gb ram and 100-150 quid cheaper I would jump on it simply because the market needs shaking up, everyone loves an underdog.

Sorry, I think I get it now, you are talking about cherry-picking results to show what you want?

Would anyone but this... even if it were the last 3080 in stock

True I agree, I still think no matter how many slots it is it's the other end of the card that will sag.

Would anyone but this... even if it were the last 3080 in stock

It will probably use less power too, since AMD are on a much better process (TSMC 7nm). Fingers crossed!

And when exactly, is that likely to be?But you can't do that if there's a 20GB 3080, which AIBs are saying privately that there is.

Indeed. It's one of those situations where, if you see one thing wrong then all of a sudden you're on the look-out and see lots of other things wrong that were there before but you never noticed. For me the Vega reviews served as a great way to triage tech sites for which had a clue & which were pretending. Sadly very few made the cut. At the end of the day there's no substitute for thinking for yourself. Someone's always gonna try to sell you (on) something, and unlikely to your benefit.Indeed. DF come across as just a paid for preview outlet, I tend to just ignore.

IIRC they did a preview for the Stadia and didn't even use the same displays in their latency test...

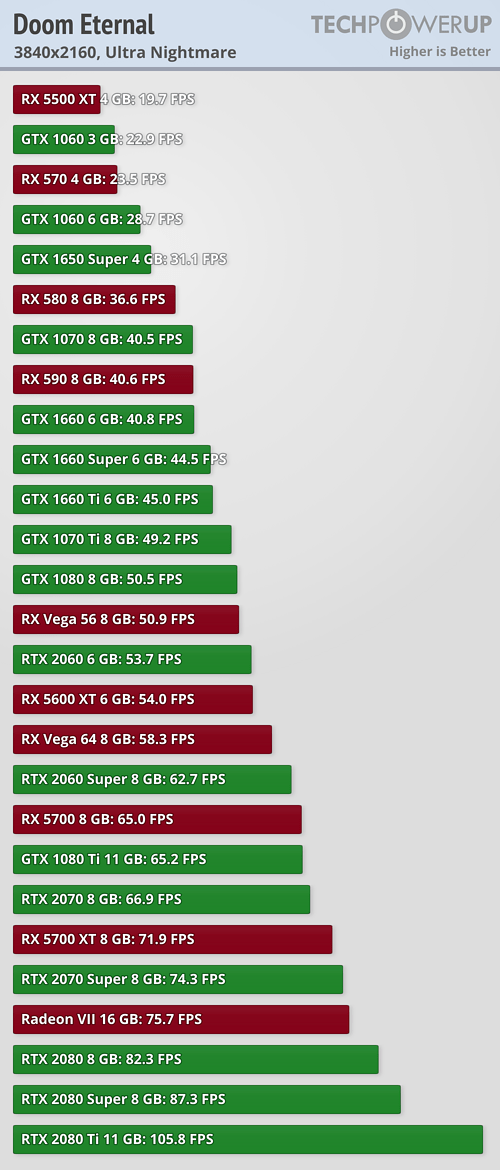

Really This graph is ****** I got video what happens when you run out of Vram in doom after steves ****** videoReally?...

RTX 3080 VS RTX 3090 4K ULTRA SETTINGS PERFORMANCE & BENCHMARK COMPARISON || TESTED IN 5 GAMES (Only one I have found so far)

(His Typed Overlay says 1080p so not sure if he made typo or running 1080p, either way it still made a difference (must have a GOOD CPU lol).

I got quoted! Didn't say that tho.And when exactly, is that likely to be?