It's as if people can't grasp something quite simple and fundamental. The way you get 4 or 8GB cards is by using double density memory, you can't design a gpu(sensibly) that can use 8 or 16 memory chips. IE one 290x that has 8 memory chips for 4GB and another with 16 chips for 8GB. The silicon itself needs to be designed with the number of connections.

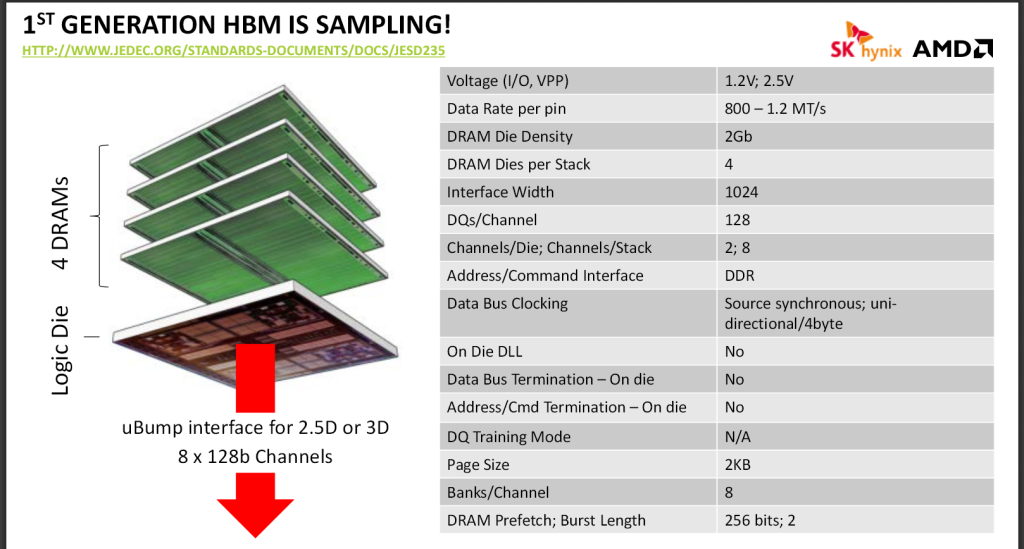

Thus you can't just make a gpu that can use 4 or 8 stacks of HBM, it has to be designed up front for one or the other. Likewise the 4GB max is based on the concept that it will have only 4 stacks for engineering reasons and the supposed current maximum of 1GB per stack.

An AIB can't add 4 more stacks, likewise if there isn't both 1GB and 2GB stacks, AMD CAN'T offer two different capacity cards. I personally wouldn't want to pay significantly more for 8GB when I don't need it.

Not Often that I agree with DM, but I do agree with him here.

I also assume that unlike previous graphics cards the AIB's are not going to be able to change the configurations of the chips like they can at the moment.

What I mean is as it stands Gigabyte for example, gets the GPU from AMD, the Memory from Hynix, the PCB from PCB's R us, etc etc. They then assemble it all together how they want to and sell it to us, be it 4GB or 8GB.

Now a lot of the time the AIB's just go with the reference design as made by AMD (yes I know AMD get Foxconn or some other TW company to make it for them) but are they now going to have to have the get the GPU and Memory package as one component. Or, not that I can see it, they are going to be assembling the GPU die, interposer and HBM stacks themselves.