Associate

- Joined

- 19 Oct 2009

- Posts

- 234

- Location

- Moray,Scotland

Last April I was looking for a new graphics card (well add on anther 5 years prior really I think). I've held out thus far though and weathered the storm of the bit mining craze and waited till black friday, but alas whilst tempted, the type of card I was looking at, the Nvidia GForce GTX 1060 6 Gig, was still and pretty much still is hovering around £230 when one adds up all the costs, including the postage to Scotland. The 3 Gig version didn't seem like a great leap memory wise from the 1 Gig that I've been using for the past 9 years. It's been a great card, and has fine playing games like Battlefield 3, 4 not so much though at 1080P which I'm still using.

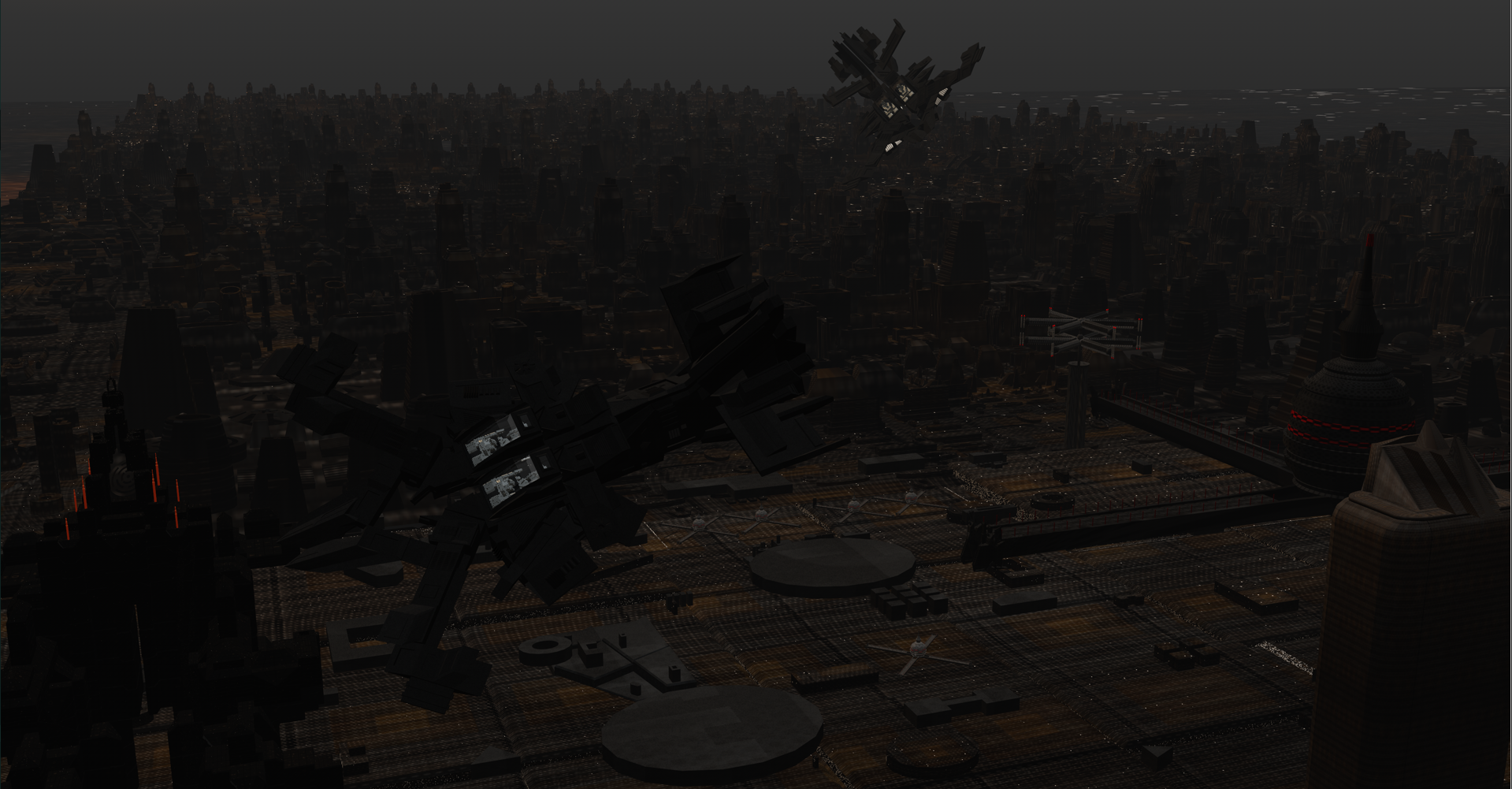

Gaming is really secondary to why I want to upgrade though, it's really because I've been working on big 3D rendering and graphic projects in Cinema 4D such as futuristic city scapes and flying space ships. Now all this has also involved video editing with all manner of visual effects, but my current graphic card just can't cope in being able to process this movie which is just over 10 minutes long and results in being corrupt in not properly rendering and displaying what it does in the viewport of Hitfilm. In Cinema 4D, displaying high poly objects is a bit of a nightmare, rendering isn't so much of an issue with my quadcore 17 920 CPU.

For the past 19 years in using PC's, an AMD Athlon 750 with an ATI Rage 128 was my first, I have always stuck with Nvidia. I bought the last GForce Gainward (the fastest AGP card with 512MB of ram) back in 2006 for my Athlon 64 3500 2.2 Gig PC, for like £240, which I consider pretty silly, even with about 3 and half years out of it. The Palit 1 Gig cost just £95 as a comparison, which I had expected to upgrade a few years later, but strangely.. that didn't happen.

With having used what I've trusted and that being with Nvidia, it's really not until the past few days where I've seriously considered switching to what AMD (previously ATI as I one knew it). This is really primary down to the price / performance and value for money aspects. What does concern me though is reliability and power consumption that AMD graphic cards have when compared with Nvidia. I also produce music with Presonus Studio One 4 with my PC so being relatively quiet is at present is important.

I'm aware of 2 graphic cards, one that being Sapphire Radeon RX 570 Pulse 8192MB

and it's Nitro sister as being options here.. the latter having just a 54 Mhz overclock which doesn't seem much. Given what I do creatively and the amount of polygons/ textured scenes and video editing I'm doing, when I look at Nvidia and their equivalent GTX1060's, 3 Gig, 6 Gig, and the RX of AMD with 8 Gigs of builtin ram, I'm both curious about the application benefits that has, other than with games and higher resolution displays. Nvidia seems pretty mean and giving the 40 - 60 price difference of similar spec cards out there.

I'm not looking to upgrade my CPU to a new PC ect...till well.. it stops functioning as a working PC. It actually has 18 Gigs of ram but Windows 10 ( pre release ) and Linux Mint 18 is able to see.

I also need a 6 pin PSU to 8 pin graphics card cable as my current one for the Antec 750 Watt New Blue PSU only has a 6 pin, for these cards I think.

My Setup. (Jan 2010 Build)

Coolermaster Scout Case.

Windows 7 64Bit Home Premium.

64 Bit-Intel I7 920 CPU @3.57GHz,

(with a Zalman CNPS10X Extreme CPU Cooler)

Gigabyte EX58 UD5 Motherboard.

16 Gigs of DDR3 1600 Kingston Ram.

Nvidia Geforce Pailt GTS 250 1 Gig Graphics Card. (9 years old and still kicking)

Antec 750 Watt New Blue PSU

Dual 1080P capable LED Monitors.

Western Digital 500Gig Hard Disk - 1 & 2 TB External Disks

Gaming is really secondary to why I want to upgrade though, it's really because I've been working on big 3D rendering and graphic projects in Cinema 4D such as futuristic city scapes and flying space ships. Now all this has also involved video editing with all manner of visual effects, but my current graphic card just can't cope in being able to process this movie which is just over 10 minutes long and results in being corrupt in not properly rendering and displaying what it does in the viewport of Hitfilm. In Cinema 4D, displaying high poly objects is a bit of a nightmare, rendering isn't so much of an issue with my quadcore 17 920 CPU.

For the past 19 years in using PC's, an AMD Athlon 750 with an ATI Rage 128 was my first, I have always stuck with Nvidia. I bought the last GForce Gainward (the fastest AGP card with 512MB of ram) back in 2006 for my Athlon 64 3500 2.2 Gig PC, for like £240, which I consider pretty silly, even with about 3 and half years out of it. The Palit 1 Gig cost just £95 as a comparison, which I had expected to upgrade a few years later, but strangely.. that didn't happen.

With having used what I've trusted and that being with Nvidia, it's really not until the past few days where I've seriously considered switching to what AMD (previously ATI as I one knew it). This is really primary down to the price / performance and value for money aspects. What does concern me though is reliability and power consumption that AMD graphic cards have when compared with Nvidia. I also produce music with Presonus Studio One 4 with my PC so being relatively quiet is at present is important.

I'm aware of 2 graphic cards, one that being Sapphire Radeon RX 570 Pulse 8192MB

and it's Nitro sister as being options here.. the latter having just a 54 Mhz overclock which doesn't seem much. Given what I do creatively and the amount of polygons/ textured scenes and video editing I'm doing, when I look at Nvidia and their equivalent GTX1060's, 3 Gig, 6 Gig, and the RX of AMD with 8 Gigs of builtin ram, I'm both curious about the application benefits that has, other than with games and higher resolution displays. Nvidia seems pretty mean and giving the 40 - 60 price difference of similar spec cards out there.

I'm not looking to upgrade my CPU to a new PC ect...till well.. it stops functioning as a working PC. It actually has 18 Gigs of ram but Windows 10 ( pre release ) and Linux Mint 18 is able to see.

I also need a 6 pin PSU to 8 pin graphics card cable as my current one for the Antec 750 Watt New Blue PSU only has a 6 pin, for these cards I think.

My Setup. (Jan 2010 Build)

Coolermaster Scout Case.

Windows 7 64Bit Home Premium.

64 Bit-Intel I7 920 CPU @3.57GHz,

(with a Zalman CNPS10X Extreme CPU Cooler)

Gigabyte EX58 UD5 Motherboard.

16 Gigs of DDR3 1600 Kingston Ram.

Nvidia Geforce Pailt GTS 250 1 Gig Graphics Card. (9 years old and still kicking)

Antec 750 Watt New Blue PSU

Dual 1080P capable LED Monitors.

Western Digital 500Gig Hard Disk - 1 & 2 TB External Disks

Last edited: