Soldato

- Joined

- 8 Aug 2010

- Posts

- 6,453

- Location

- Oxfordshire

@necrontyr

Impressive, I particularly like "A dialectic in its truest form means you conceed where concession is due, always. And work together to reach a consensus on a question/problem."

Below is my opinion on your points:

1) Correct

2) Correct

3) Correct if both companies play their cards right, however to dominate you need a solid economically viable foundation, which Fermi just doesn't have as it stands.

Also I think it's important to acknowledge not just the issues affecting the architecture, but the issues affecting both AMD and Nvidia as a company with the elephant in the room being there will soon be no low-end and large revenue streams will soon be diverted into different hands.

Impressive, I particularly like "A dialectic in its truest form means you conceed where concession is due, always. And work together to reach a consensus on a question/problem."

1.I currently think the amd Cypress architecture is winning on most fronts of performance and power in the gpu market...

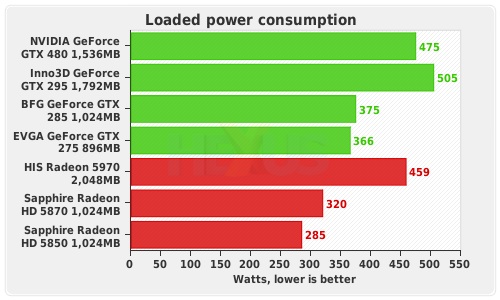

2.I beleive its strongest benefit is the ability to deliver better flops per watt then its main competitor at this time.

3.I dont think its succeeded where Nvidia cannot, both companies can dominate if they play their cards right...

Below is my opinion on your points:

1) Correct

2) Correct

3) Correct if both companies play their cards right, however to dominate you need a solid economically viable foundation, which Fermi just doesn't have as it stands.

Also I think it's important to acknowledge not just the issues affecting the architecture, but the issues affecting both AMD and Nvidia as a company with the elephant in the room being there will soon be no low-end and large revenue streams will soon be diverted into different hands.

i understand the approach.

i understand the approach.