Computerbase have a new article about what performance AMD and Nvidia have been able to get out of their drivers since the release of the 6800XT and the 3080:

https://www.computerbase.de/2022-03/geforce-radeon-treiber-benchmark-test/

(https://www-computerbase-de.transla...uto&_x_tr_tl=en&_x_tr_hl=en-US&_x_tr_pto=wapp)

Some highlights:

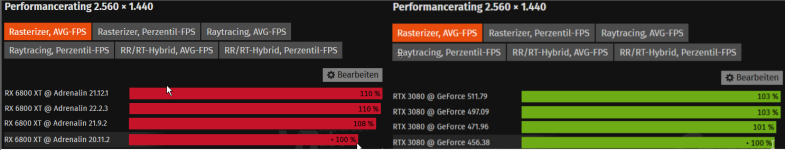

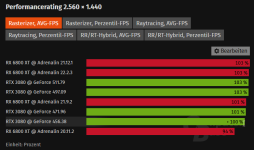

So for Average FPS at Raster, AMD managed to squeeze another 10% compared to launch drivers vs 3% for Nvidia. Min FPS (Rasterrizer, Perzentil-FSP) the same.

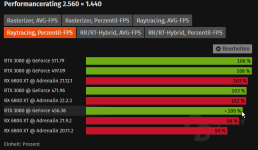

RT min FPS:

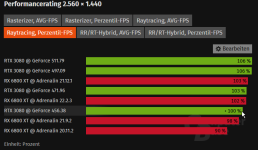

the figures are +15% and +6%.

https://www.computerbase.de/2022-03/geforce-radeon-treiber-benchmark-test/

(https://www-computerbase-de.transla...uto&_x_tr_tl=en&_x_tr_hl=en-US&_x_tr_pto=wapp)

Some highlights:

So for Average FPS at Raster, AMD managed to squeeze another 10% compared to launch drivers vs 3% for Nvidia. Min FPS (Rasterrizer, Perzentil-FSP) the same.

RT min FPS:

the figures are +15% and +6%.