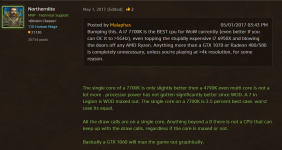

Caporegime

OFC,I am talking more about PC gaming.

Nvidia ATM,seems to forging forward. Volta is out,and we are now starting to see Turing/Ampere prototypes. AMD,OTH still is finding it hard to match Nvidia on performance/watt in many segments,meaning they are loosing out on laptops with regards to dGPU,Vega got delayed,and if it were not for mining,would have been uneconomic to sell at RRP,against cheaper to make Nvidia cards.

Rumours say Raja Koduri wasn't given as much funds as he wanted to make more gaming specific GPUs,as R and D was pushed towards non-gaming segments.

It seems we won't see a new gaming GPU this year from AMD meaning Nvidia will probably push ahead again. Navi apparently is only a midrange chip for release in 2019,which could only mean a high end chip in 2020. Intel also is entering the market in 2020,meaning more compeitition.

So,what do you guys/gal think,will this turn out like after Bulldozer was released,and Intel was reining supreme,where competitors will do the minimum to get sales,and prices will start to increase?? Or do you think AMD might be able to pull something out of the bag(like they did with Ryzen)??

Nvidia ATM,seems to forging forward. Volta is out,and we are now starting to see Turing/Ampere prototypes. AMD,OTH still is finding it hard to match Nvidia on performance/watt in many segments,meaning they are loosing out on laptops with regards to dGPU,Vega got delayed,and if it were not for mining,would have been uneconomic to sell at RRP,against cheaper to make Nvidia cards.

Rumours say Raja Koduri wasn't given as much funds as he wanted to make more gaming specific GPUs,as R and D was pushed towards non-gaming segments.

It seems we won't see a new gaming GPU this year from AMD meaning Nvidia will probably push ahead again. Navi apparently is only a midrange chip for release in 2019,which could only mean a high end chip in 2020. Intel also is entering the market in 2020,meaning more compeitition.

So,what do you guys/gal think,will this turn out like after Bulldozer was released,and Intel was reining supreme,where competitors will do the minimum to get sales,and prices will start to increase?? Or do you think AMD might be able to pull something out of the bag(like they did with Ryzen)??