I've been wanting to do end-to-end latency measurements for a long time, as it's easy to do. All it requires is something to generate a measurable signal on an input, and another when the monitor responds to that input.

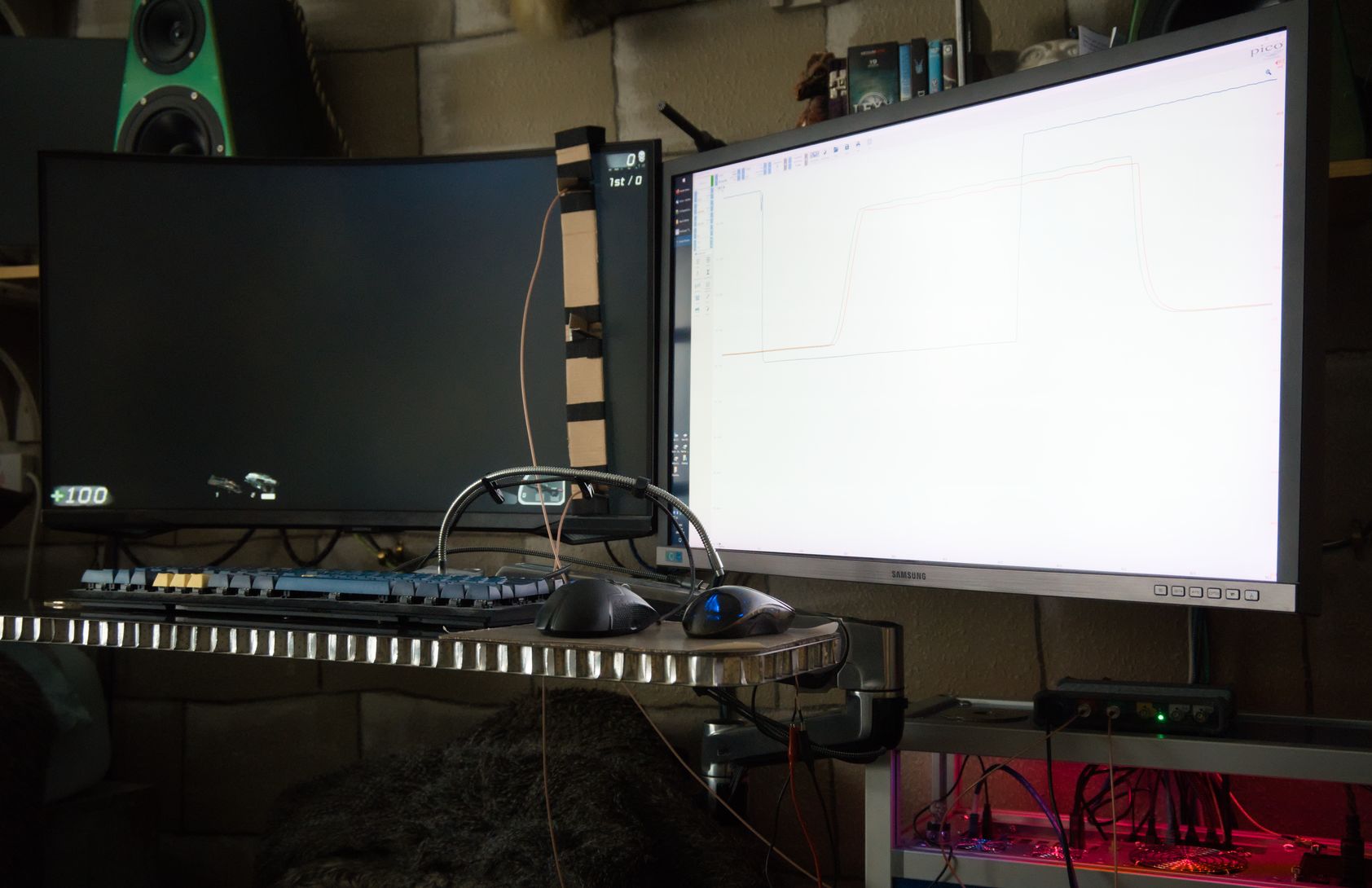

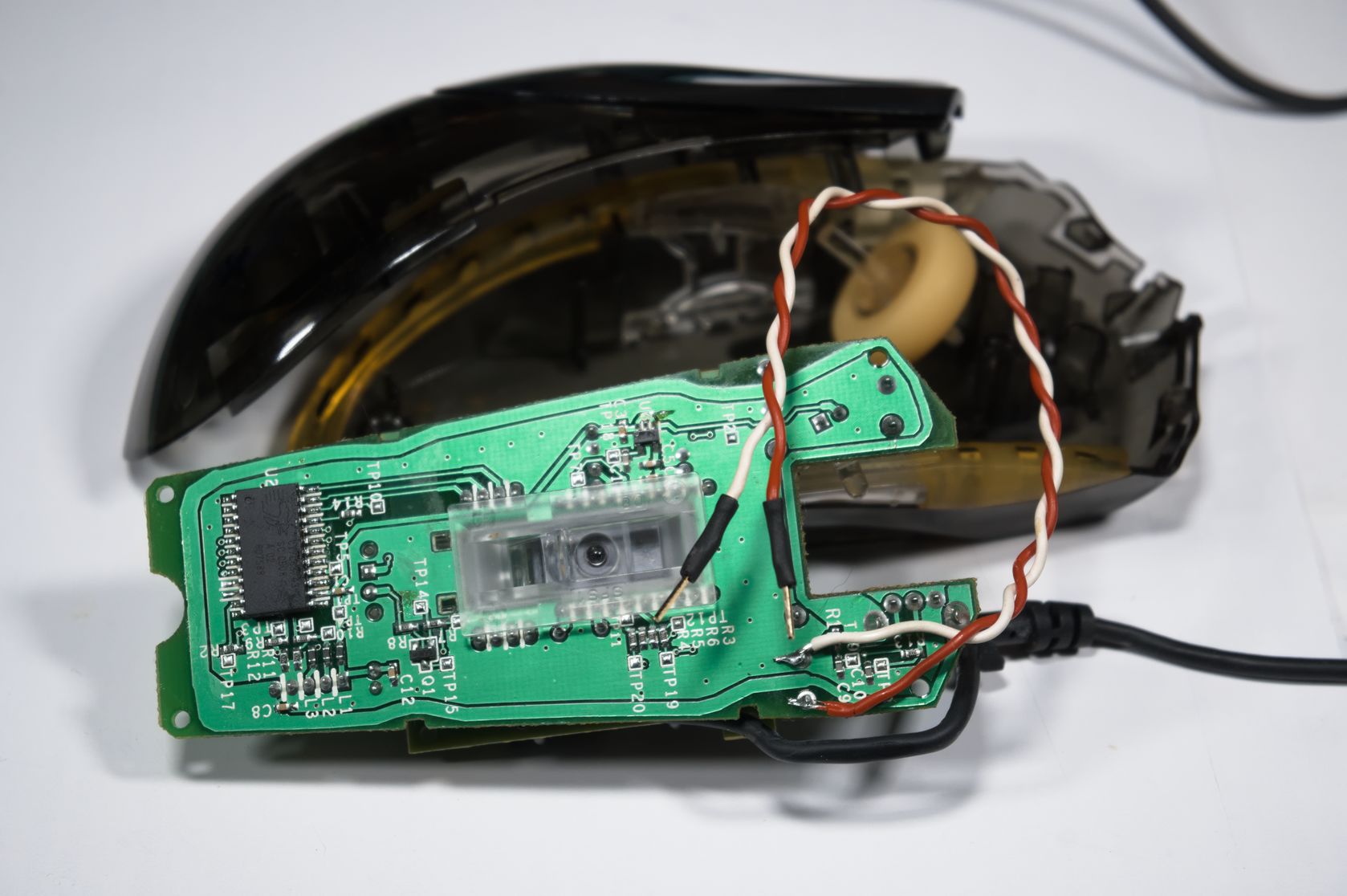

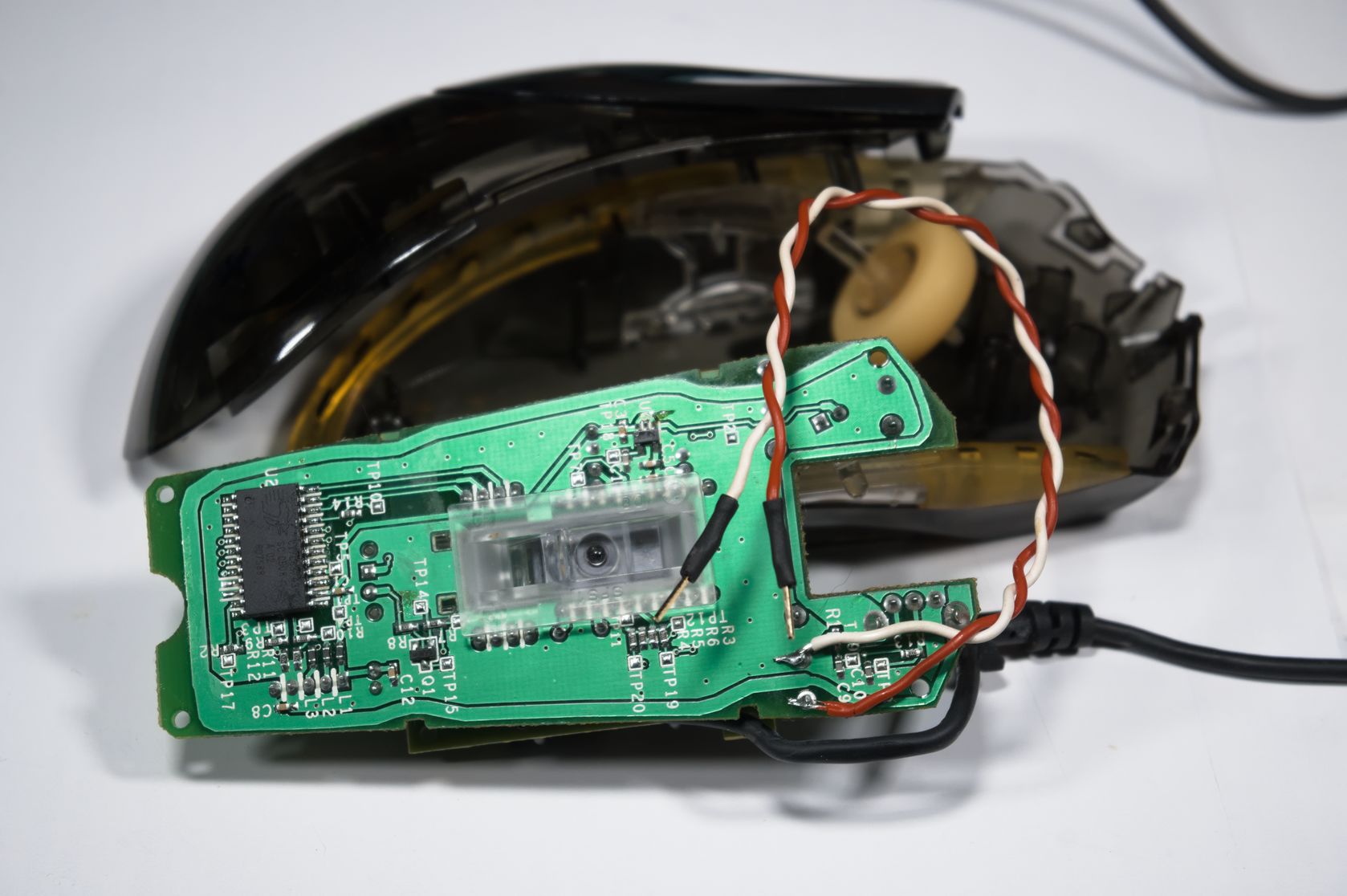

Sensing the input is trivial, since that's what mouse buttons inherently do. Here's a photo of an old mouse I modified by tacking a couple of wires across the left button, so that the click can be detected by an oscilloscope, like this:

Sensing the change in output can be achieved with some sort of light sensor, such as a photodiode. I have some of those lying around already bodged together into a form suitable for connecting to an oscilloscope. The particular one I used was a SLD-70BG2, which is sensitive to visible light, exactly like a monitor produces. I added a 100kΩ resistor in parallel to improve the response time. This is what it looks like:

So what to measure? How about web browsers? People will choose browsers based on general performance, but I've never heard anyone consider input latency differences before. I wrote a simple script to flash the screen on left click for this test (full code below if anyone cares).

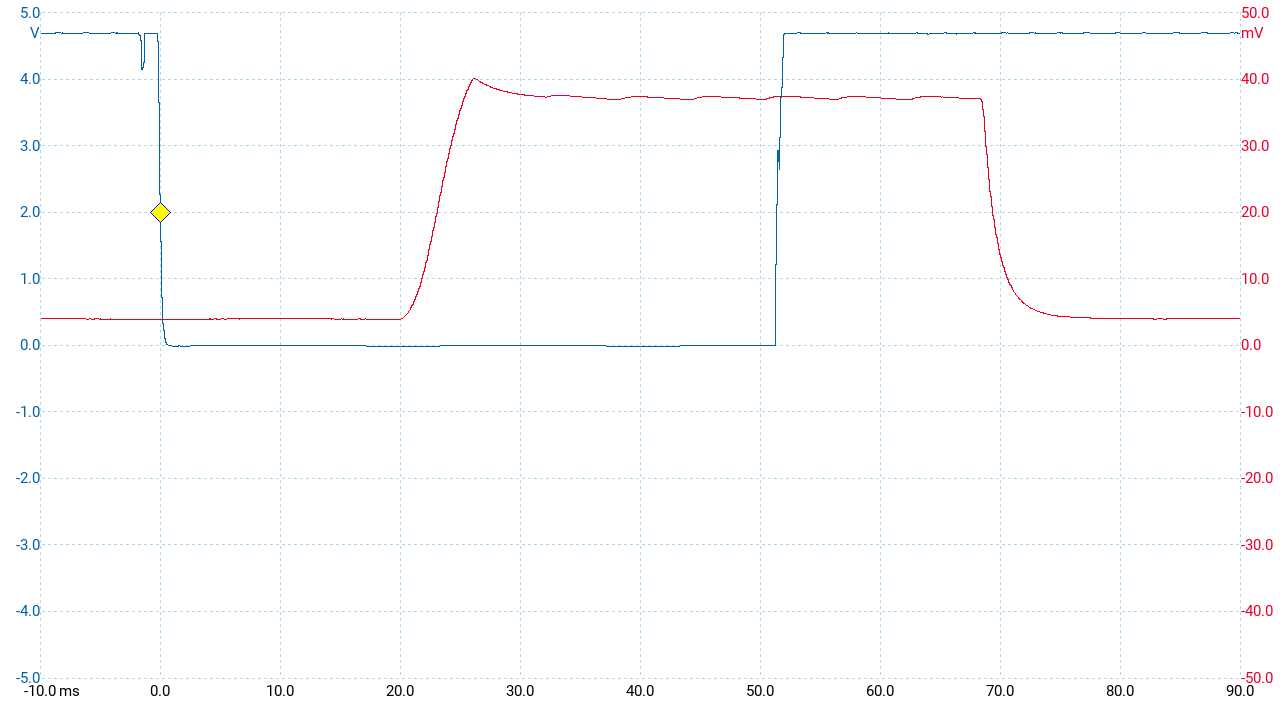

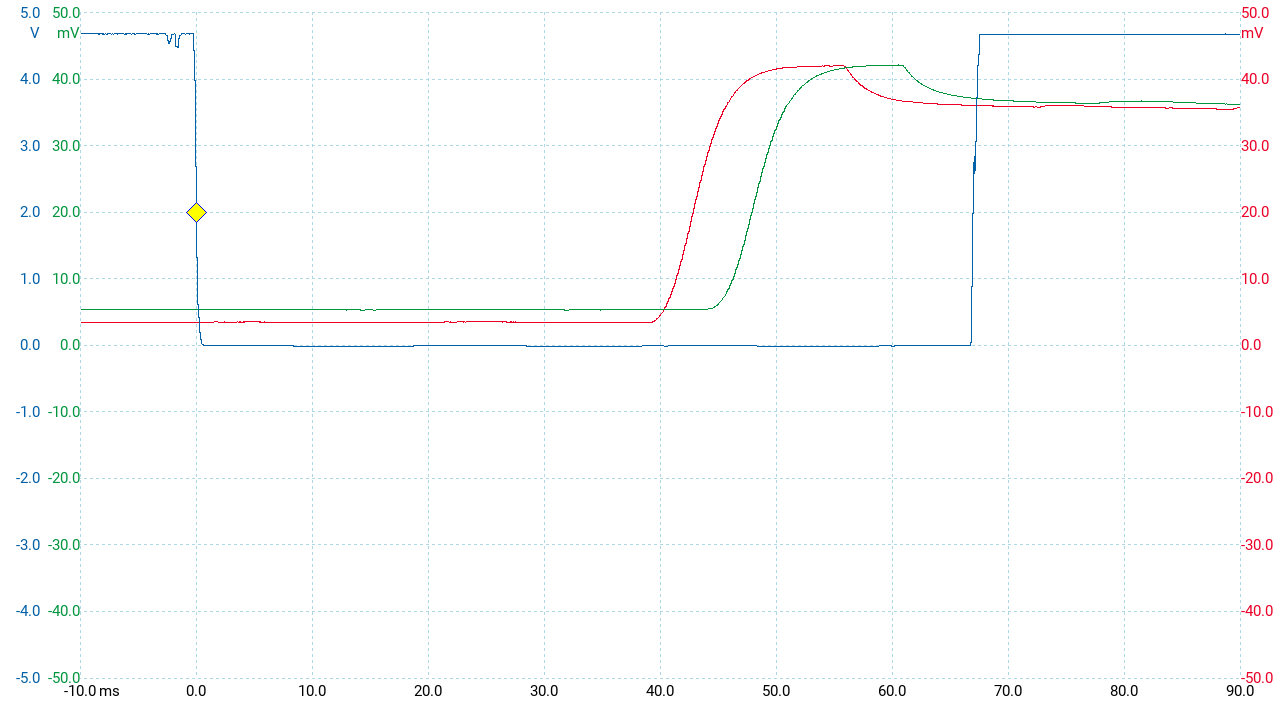

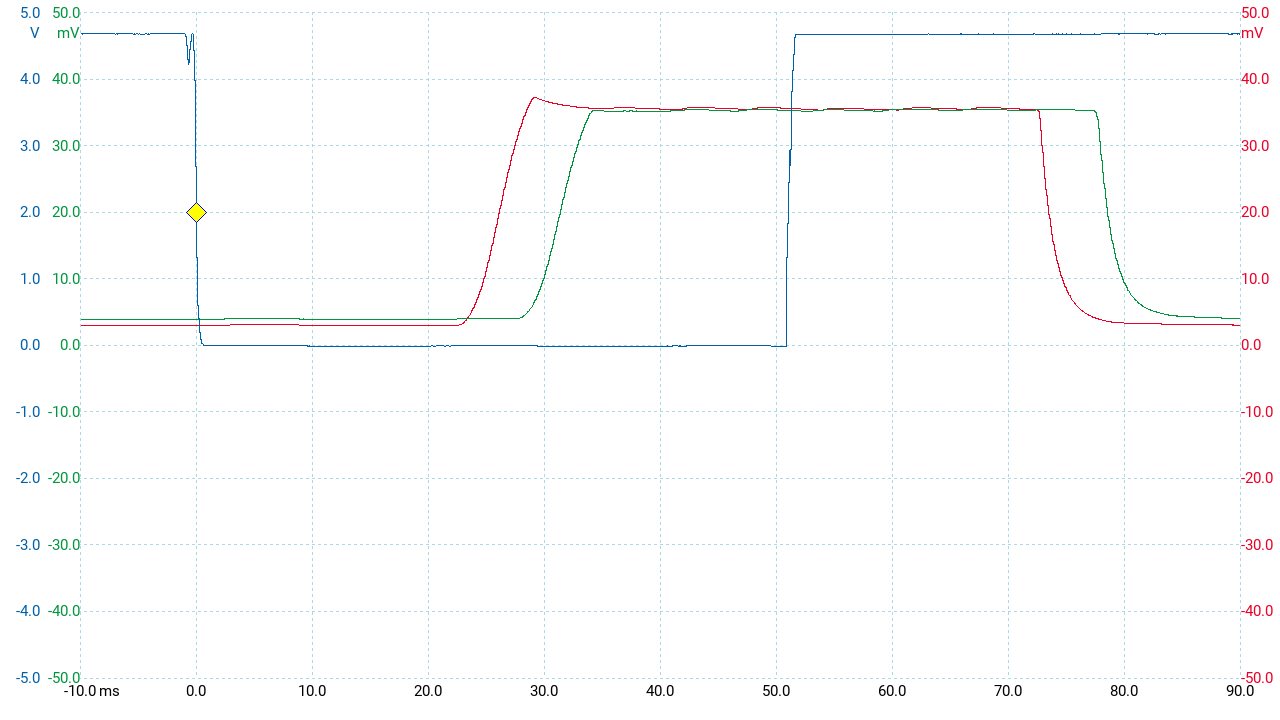

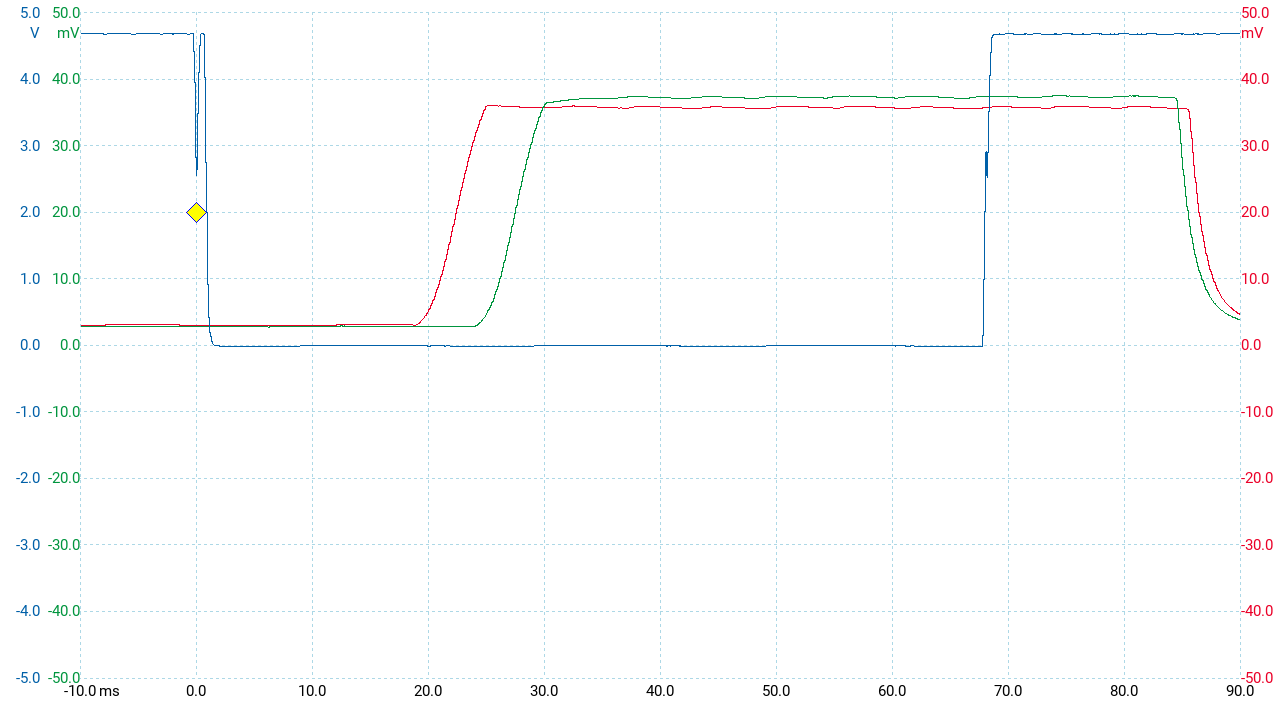

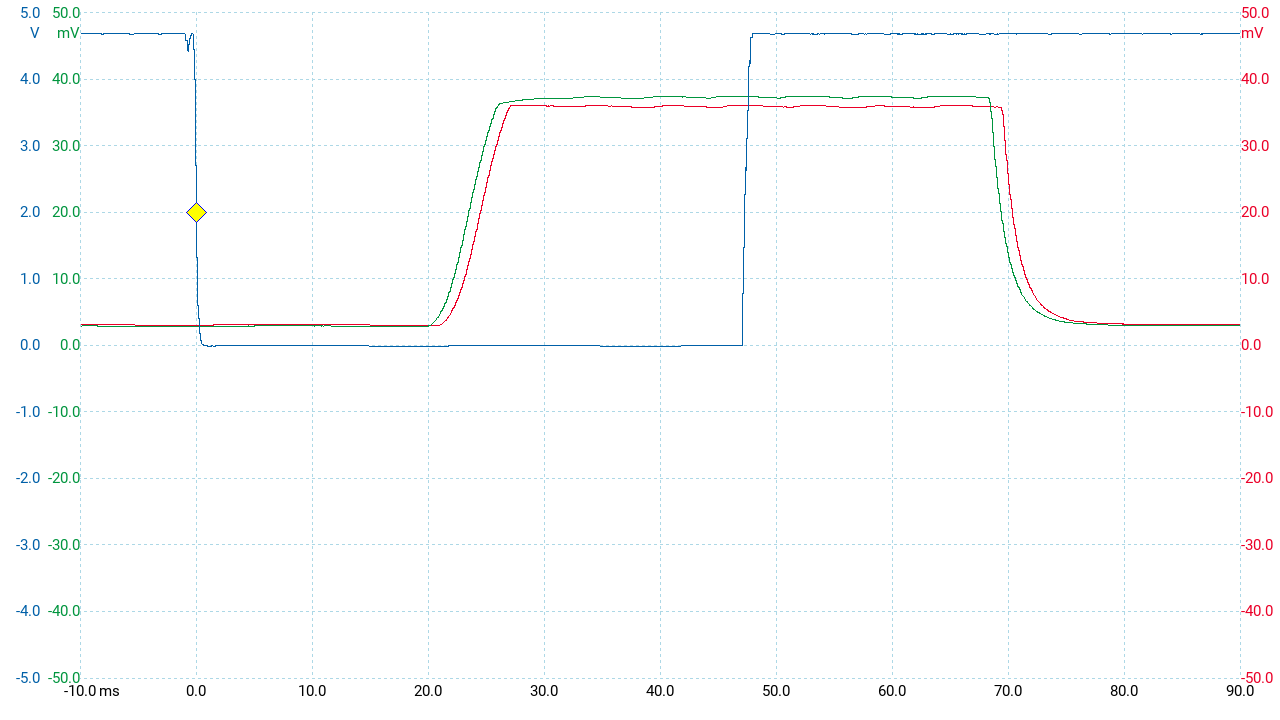

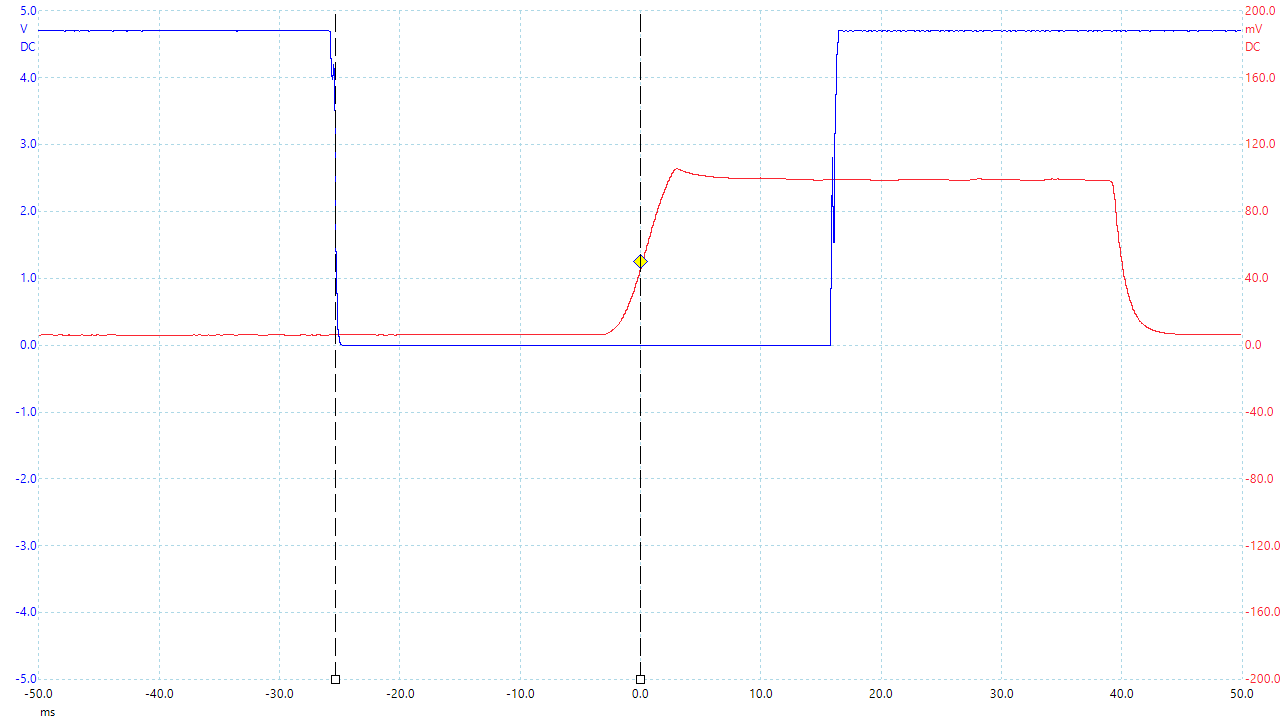

Here's a pic of what it looks like on the oscilloscope:

The blue line is the mouse button, which is pulled up to 5V normally (actually 4.7V here), and shorted to ground when the button is pressed. The red line is from the photodiode. You can clearly see the monitor's response time. There are two latencies available here - one for mouse-down and one for mouse-up. I used only the mouse-down latency. I measured it as the time from mouse-down to when the monitor reached 50% (marked by vertical lines in the pic).

The monitor is a Samsung Neo G7, running at its default 165Hz, and with local dimming disabled for this test because it messes with the response time. It's in HDR mode, and the browsers are only outputing SDR, which is why it's possible for there to be overshoot on the transition from black to white.

Here's a summary of the results:

Raw data below:

So... no difference. The range of values is quite wide, which is not surprising given that it depends on the JavaScript event loop, which isn't designed for low and consistent latency like a game engine is.

It's not possible to work out what makes up the total latency, apart from monitor reponse time which the photodiode measures directly. Since the measured latency covers the entire chain end-to-end, it includes all sorts of latencies that aren't normally measured, like the time the mouse takes to debounce the switch.

Sensing the input is trivial, since that's what mouse buttons inherently do. Here's a photo of an old mouse I modified by tacking a couple of wires across the left button, so that the click can be detected by an oscilloscope, like this:

Sensing the change in output can be achieved with some sort of light sensor, such as a photodiode. I have some of those lying around already bodged together into a form suitable for connecting to an oscilloscope. The particular one I used was a SLD-70BG2, which is sensitive to visible light, exactly like a monitor produces. I added a 100kΩ resistor in parallel to improve the response time. This is what it looks like:

So what to measure? How about web browsers? People will choose browsers based on general performance, but I've never heard anyone consider input latency differences before. I wrote a simple script to flash the screen on left click for this test (full code below if anyone cares).

Code:

<!DOCTYPE html>

<html>

<head>

<meta charset="utf-8">

<title>Mouse Click Flasher</title>

</head>

<body>

<script>

(function () {

const toggleBackground = event => {

if (event.button === 0) {

document.body.style.background = event.buttons && 1 ? 'white' : 'black';

}

};

document.body.style.background = 'black';

document.addEventListener('mousedown', toggleBackground);

document.addEventListener('mouseup', toggleBackground);

}());

</script>

</body>

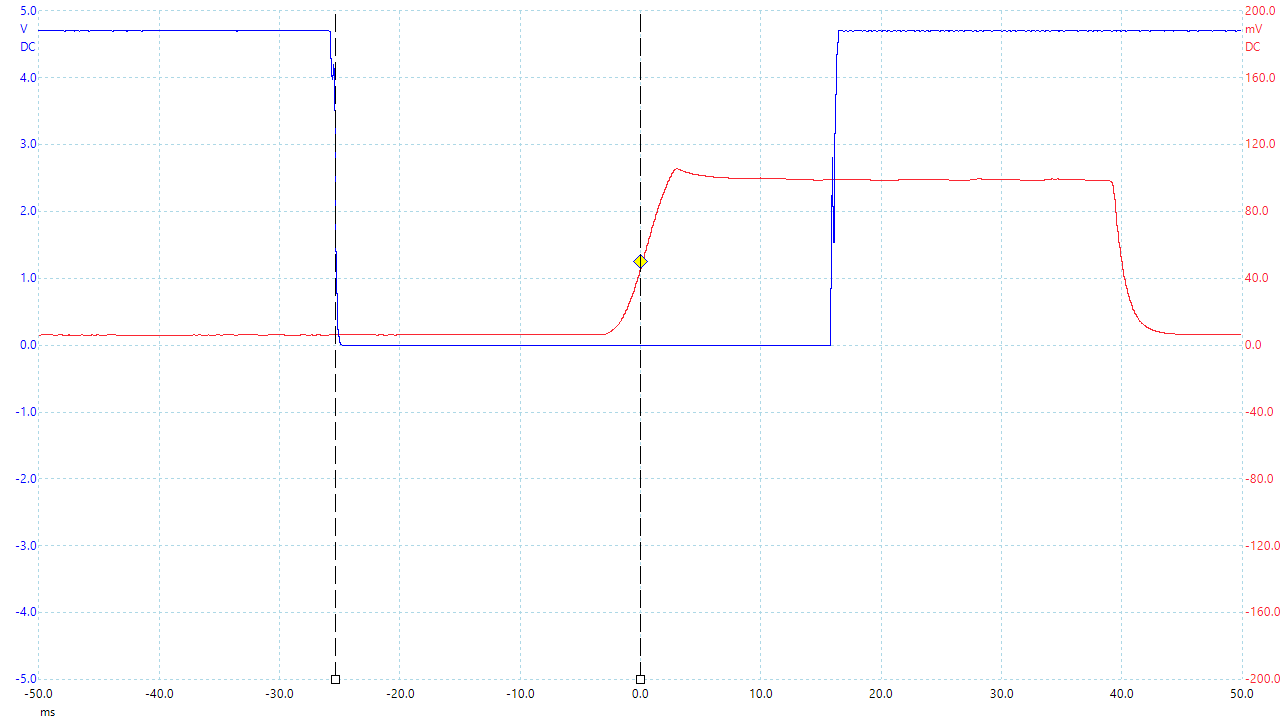

</html>Here's a pic of what it looks like on the oscilloscope:

The blue line is the mouse button, which is pulled up to 5V normally (actually 4.7V here), and shorted to ground when the button is pressed. The red line is from the photodiode. You can clearly see the monitor's response time. There are two latencies available here - one for mouse-down and one for mouse-up. I used only the mouse-down latency. I measured it as the time from mouse-down to when the monitor reached 50% (marked by vertical lines in the pic).

The monitor is a Samsung Neo G7, running at its default 165Hz, and with local dimming disabled for this test because it messes with the response time. It's in HDR mode, and the browsers are only outputing SDR, which is why it's possible for there to be overshoot on the transition from black to white.

Here's a summary of the results:

Code:

Firefox Chromium Edge

Min 24.14 24.61 20.18

Max 45.15 42.82 49.98

Mean 32.78 33.06 34.28Raw data below:

Code:

Firefox Chromium Edge

26.57 36.72 33.53

25.04 31.61 27.47

32.54 24.61 23.97

29.99 26.23 36.08

32.80 26.10 41.20

29.56 27.81 27.51

29.69 30.07 34.00

24.14 29.39 28.06

25.25 29.18 20.18

29.43 24.61 45.60

40.95 40.69 34.25

31.27 39.63 40.31

38.62 25.08 26.91

29.79 33.74 36.72

35.32 37.66 39.33

45.15 42.82 34.38

42.21 39.07 36.26

28.36 40.05 49.98

37.60 41.50 39.70

41.33 34.68 30.06So... no difference. The range of values is quite wide, which is not surprising given that it depends on the JavaScript event loop, which isn't designed for low and consistent latency like a game engine is.

It's not possible to work out what makes up the total latency, apart from monitor reponse time which the photodiode measures directly. Since the measured latency covers the entire chain end-to-end, it includes all sorts of latencies that aren't normally measured, like the time the mouse takes to debounce the switch.