Caporegime

Pretty comprehensive list of benchmarks run by computer base on the fastest CPU (Ryzen 5000 series) and Motherboard PCI-E 4.0 platform (AM4).

Plenty of rasterization, memory utilisation tests and some RT benchmarks too. Something for everyone.

I'll link to a few results, more in the link below for you to peruse at your convenience.

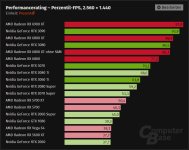

1080P

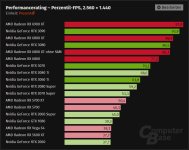

1440P

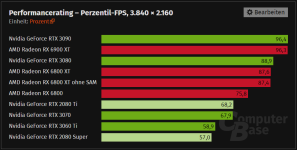

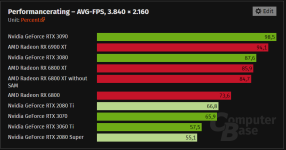

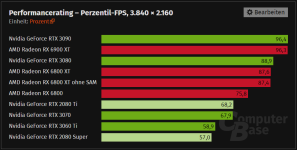

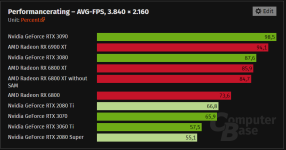

2160P

Couple of things that stand out to me. Does anyone remember when it was proclaimed that the 3080 was faster than the 6900 XT at 4K? That aged badly.

Also worth noting that the benchmark clearly shows and comments on the fact that Big Navi scales well at 4K, instead it is the other GPUs that scale poorly below 4K.

Two common myths busted and put to bed.

Link: AMD Radeon & Nvidia GeForce im Benchmark-Vergleich 2021: Testergebnisse im Detail - ComputerBase

Plenty of rasterization, memory utilisation tests and some RT benchmarks too. Something for everyone.

I'll link to a few results, more in the link below for you to peruse at your convenience.

1080P

1440P

2160P

Couple of things that stand out to me. Does anyone remember when it was proclaimed that the 3080 was faster than the 6900 XT at 4K? That aged badly.

Also worth noting that the benchmark clearly shows and comments on the fact that Big Navi scales well at 4K, instead it is the other GPUs that scale poorly below 4K.

Two common myths busted and put to bed.

Link: AMD Radeon & Nvidia GeForce im Benchmark-Vergleich 2021: Testergebnisse im Detail - ComputerBase

Last edited:

) but it should never get faster. Stay the same if CPU limited sure, but that quite a notable difference.

) but it should never get faster. Stay the same if CPU limited sure, but that quite a notable difference.