-

Competitor rules

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Have CPUs reached the limit?

- Thread starter fraps

- Start date

More options

Thread starter's postsSoldato

- Joined

- 15 Oct 2003

- Posts

- 16,667

- Location

- Chengdu

Definitely not near the limit yet.

Even if there are limits for die sizes such as going smaller than the current 3nm processes, no doubt the boffins at the various manufacturers will work out how to increase performance. The techniques used on Apple Silicon might be the way forward?

The node limits are mostly economic.

Sure TSMC will reach their 2nm node but each wafer will cost around $30k so the cost per transistor will be stagnant. Meanwhile designing, validating, and making the masks for each 2nm design will be astronomical.

Chiplets and tiles will be required even if monolith would perform better (and monolith should perform better all else being equal - which sort of highlights how far behind Intel's huge P cores were since Zen chiplets outperformed then despite being chiplet).

Other tricks taken from phone SOCs and things like Apple Silicon will also be required.

Things like on-package memory, ASIC accelerators, NVMe drives directly connected to the CPUs memory system/cache, etc.

All things which will probably make upgrading and DIY impossible. Apple started soldered memory because they are greedy and anti consumer, but there now are technical reasons too.

Sure TSMC will reach their 2nm node but each wafer will cost around $30k so the cost per transistor will be stagnant. Meanwhile designing, validating, and making the masks for each 2nm design will be astronomical.

Chiplets and tiles will be required even if monolith would perform better (and monolith should perform better all else being equal - which sort of highlights how far behind Intel's huge P cores were since Zen chiplets outperformed then despite being chiplet).

Other tricks taken from phone SOCs and things like Apple Silicon will also be required.

Things like on-package memory, ASIC accelerators, NVMe drives directly connected to the CPUs memory system/cache, etc.

All things which will probably make upgrading and DIY impossible. Apple started soldered memory because they are greedy and anti consumer, but there now are technical reasons too.

Last edited:

Soldato

- Joined

- 6 Sep 2016

- Posts

- 15,081

heard this question dozens of times over the last 20 years

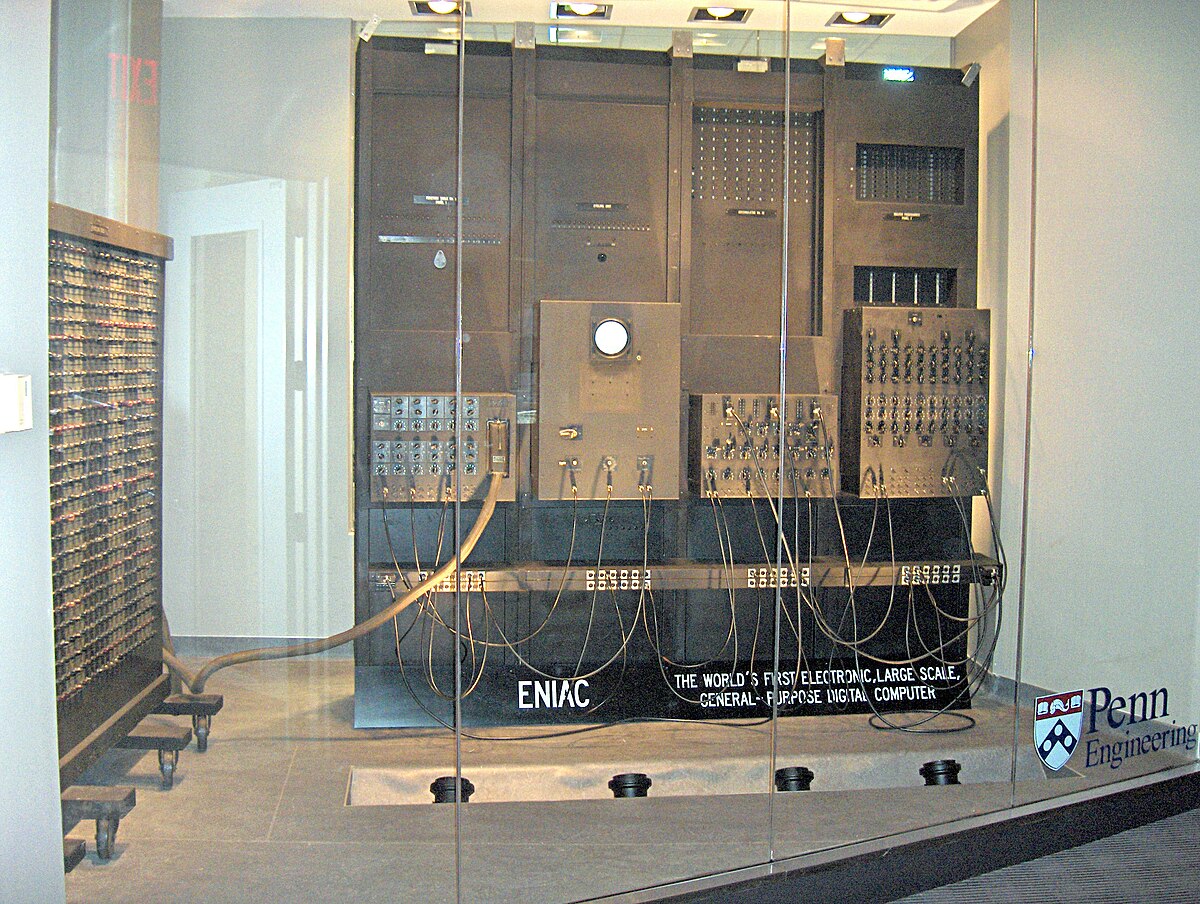

In far far future, a computer will be faster than this. Impossible you say...

ENIAC - Wikipedia

Man of Honour

- Joined

- 23 Mar 2011

- Posts

- 19,221

- Location

- West Side

Look how far we come in technology it's astonishing, slightly of topic but A single strand of DNA can store up to 215 million gigabytes of data. Once they tap into that no more mechanical hard drives.

My wife said I was full of useless information

My wife said I was full of useless information

Last edited:

There will never be a limit, just more cores and more distributed, I guess only factor will be cost and distribution of work over ever increasing bandwidth and faster networks

This.

We might be reaching a limit on process node size, 10 years ago intel CPUs were at 22nm, now we're approaching 2-3nm processes. Are we likely to be at 0.2nm in 10 years time? Obviously not. But we can just make the die bigger to fit more transistors and more logical cores and boom that's a faster processor. This is already happening, the flagship desktop intel CPU has less raw power than a threadripper from a couple of years ago. How? The threadrippers are huge and have dozens or hundreds of cores. The only reason that the entire domestic computing market hasn't gone that way is because videogames and a few other tasks still scale with single-core performance, once developers and development frameworks catch up that should no longer be a factor.

Also there are huge gains to be made in efficiency. Performance-per-core or performance-per-die matters less than performance-per-watt in many applications and that trend will only accelerate.

Last edited:

Yep exactly, Also for anyone thinking dies cant keep increasing, well yes they can just vertically.This.

We might be reaching a limit on process node size, 10 years ago intel CPUs were at 22nm, now we're approaching 2-3nm processes. Are we likely to be at 0.2nm in 10 years time? Obviously not. But we can just make the die bigger to fit more transistors and more logical cores and boom that's a faster processor. This is already happening, the flagship desktop intel CPU has less raw power than a threadripper from a couple of years ago. How? The threadrippers are huge and have dozens or hundreds of cores. The only reason that the entire domestic computing market hasn't gone that way is because videogames and a few other tasks still scale with single-core performance, once developers and development frameworks catch up that should no longer be a factor.

Also there are huge gains to be made in efficiency. Performance-per-core or performance-per-die matters less than performance-per-watt in many applications and that trend will only accelerate.

When will the CPU equivalent of NAND QLC come, and what major caveats will it bring!Yep exactly, Also for anyone thinking dies cant keep increasing, well yes they can just vertically.

Soldato

- Joined

- 31 Jan 2022

- Posts

- 3,648

- Location

- UK

Some would argue that they reached a limit years ago!

It depends what you call a limit. Gone are the days when a new CPU would be twice the power of the previous generation! For a long time, generational increases have been relatively small.

We have reached a limit of size. You just can't make them much smaller because quantum physics says so. There is a thing called quantum tunnelling, where electrons can jump past junctions. The smaller the transistor the larger the problem. That's why chips generate so much heat these days. The electrons are literally jumping out of the transistors because they are so small, doing nothing other than generating heat. Even to the point where they can't make the chip itself occupy a larger area because the substrate deforms. This very problem has held back the 5000 series GPU's from NVIDIA. Glass is the next breakthrough, apparently.

The problems will be solved over time, but it's getting more and more difficult to squeeze more hardware power for the gamer. NVIDIA has been building new features in to the hardware, because it knows that's the only way it can really improve on the previous generation. Well, that's my opinion anyway.

It depends what you call a limit. Gone are the days when a new CPU would be twice the power of the previous generation! For a long time, generational increases have been relatively small.

We have reached a limit of size. You just can't make them much smaller because quantum physics says so. There is a thing called quantum tunnelling, where electrons can jump past junctions. The smaller the transistor the larger the problem. That's why chips generate so much heat these days. The electrons are literally jumping out of the transistors because they are so small, doing nothing other than generating heat. Even to the point where they can't make the chip itself occupy a larger area because the substrate deforms. This very problem has held back the 5000 series GPU's from NVIDIA. Glass is the next breakthrough, apparently.

The problems will be solved over time, but it's getting more and more difficult to squeeze more hardware power for the gamer. NVIDIA has been building new features in to the hardware, because it knows that's the only way it can really improve on the previous generation. Well, that's my opinion anyway.

Last edited:

Permabanned

- Joined

- 28 Sep 2018

- Posts

- 0

not yet it seems

I imagine cooling is going to be the biggest issue, I read somewhere that we may end up with some clever liquid cooling much like the veins in an internal combustion engine. bit above my knowledge though. Interesting times though given AMDs progress with 3d cache etcWhen will the CPU equivalent of NAND QLC come, and what major caveats will it bring!

Last edited:

Soldato

- Joined

- 6 Sep 2016

- Posts

- 15,081

Do all programs even use all the cores and threads yet?

generally yes it will use cores.

ie when encoding music, I can observe all cores being used to high percentage, and the encoder encodes 8 tracks at once. Some games, like War Thunder have core 0 at 95% and the rest are 30% so that's poorly coded game.