Just been watching this video and to me, there seems to be a a clear difference in all of the videos Bryan compares... and yes I do realise there there are slight differences in position which could actually cater for these differences... all I'm doing here is highlighting what I can see from his video... and then it made me think now, I wish in reviews we had a direct picture to picture review, not just FPS... we need video quality as well reviewed!!! No point one game getting 200fps if the picture isn't as detailed as the one getting 150fps?

Or do you guys just think that due to the slight differences in where the images are etc that there is NO DIFFERENCE in PQ between any of these cards? Could it be youtube... could it be a combination of all of these... Just thought it might be an interesting discussion....

THE QUESTION IS, DO WE THINK WE'RE LOOKING AT EXACTLY THE SAME IMAGE WHEN WE'RE BENCHMARKING WHETHER YOU THINK THE BELOW SCREEN SHOTS ARE VALID OR NOT?

That's that the discussion here is really. Do AMD and nVidia really have an apples to apples render and output? if not then basically benchmarks are an indicator only and we also need analysis on the picture quality as well or do people simply just want fps nowadays which does seem to be the measure of a card and quality seems to come second now?

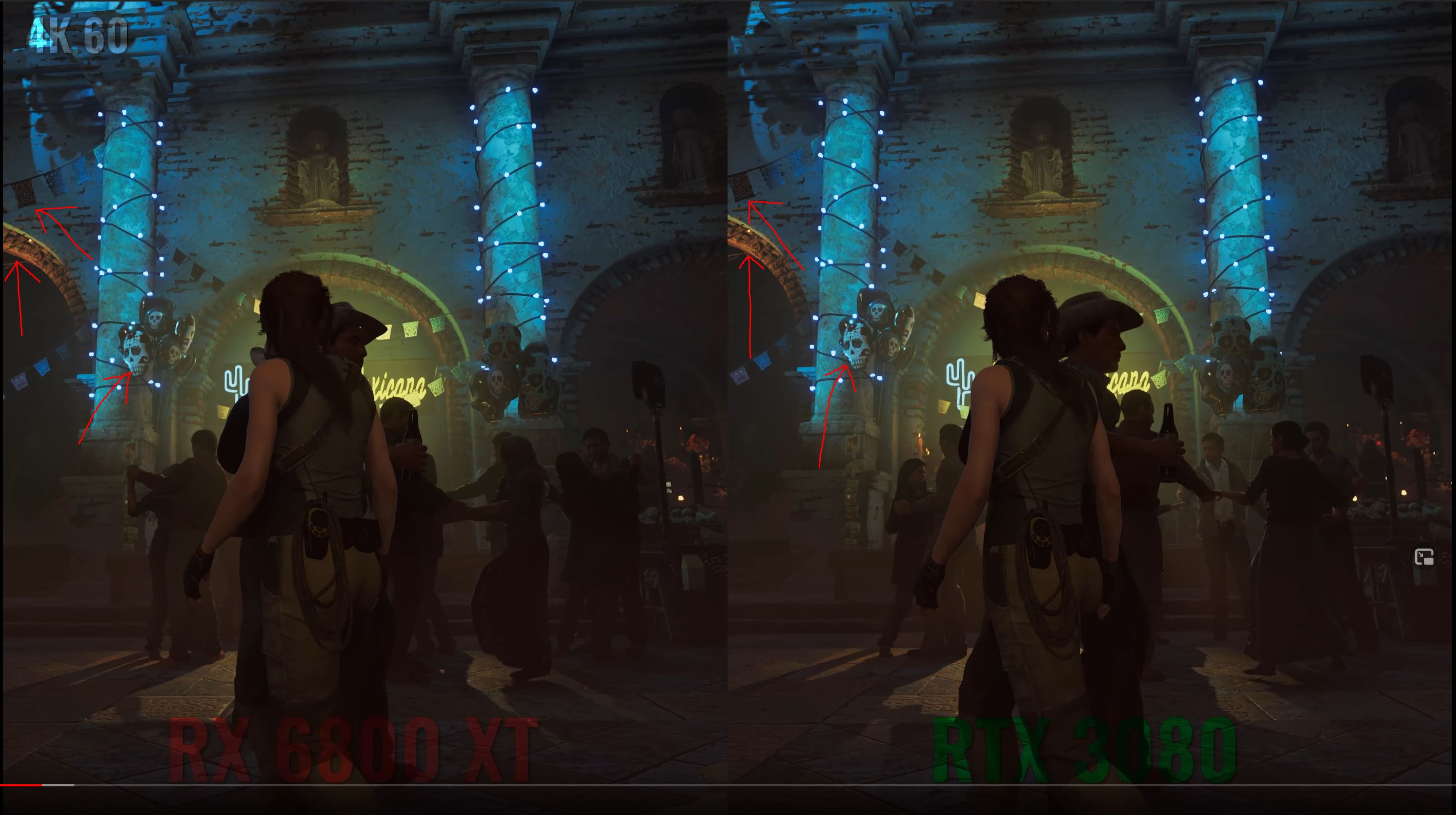

So, for example, I'd say that Shadow of the tomb raider looks brighter on the 3080 series rather than the 6800XT.. more punchy somehow.. the whites are brighter etc... and I'd say the two areas I've pointed at look slightly more defined on the 3080?... which could come down to encoders.... who knows... This raises the question... assuming that the encoder's Bryan is using here are exactly the same... is this saying that all of a sudden now we're not comparing apples to apples between the two cards .. BUT in Shadow I think that the 3080 has the better picture? it's slightly brighter, and I think that there is more detail in the picture? Watch the video in 4k and see what you think?

Dirt 5 looks like a different game between the two... it's like it's not even the same game at some points... all the shadows are different, the definition on the cards is different... suppose there is RT at hand in this now... but look at this screen shot... I suppose the problem with this one is that we've not got 3 tech's all be employed in this title now... but I'd say in the screenie below the 6800 is the worst and the 5700XT is the cleanest? Also does this now invalidate the benchmark if it's showing different things? I'd say it does...

Obviously tons of discussion could ensue here and I'm not causing a war between the two as I think that they both are amazing cards BUT.... I'm not saying one card is better than the other here, however with all the tech these cards have to be seeing differences as blatent as this... when benchmarking now, I'm not convinced we're seeing a fair comparision sometimes? and I've just looked closely at Horizon Zero Dawn and here are my two example where I feel that the 3080 just isn't as defined as the 6800xt... ??? I see with my eye more detail in the 6800XT screen? Howerver the position of the screen is ever so slightly difference, coudl this cause this in the first picture below? Same with the second picture it's slightly out of sync... so could this be the explanation?

So listen, have a watch of the video see what you guys think and maybe do some checks yourself... anyhow thought I'd raise this again... and have a discussion on it...

Or do you guys just think that due to the slight differences in where the images are etc that there is NO DIFFERENCE in PQ between any of these cards? Could it be youtube... could it be a combination of all of these... Just thought it might be an interesting discussion....

THE QUESTION IS, DO WE THINK WE'RE LOOKING AT EXACTLY THE SAME IMAGE WHEN WE'RE BENCHMARKING WHETHER YOU THINK THE BELOW SCREEN SHOTS ARE VALID OR NOT?

That's that the discussion here is really. Do AMD and nVidia really have an apples to apples render and output? if not then basically benchmarks are an indicator only and we also need analysis on the picture quality as well or do people simply just want fps nowadays which does seem to be the measure of a card and quality seems to come second now?

So, for example, I'd say that Shadow of the tomb raider looks brighter on the 3080 series rather than the 6800XT.. more punchy somehow.. the whites are brighter etc... and I'd say the two areas I've pointed at look slightly more defined on the 3080?... which could come down to encoders.... who knows... This raises the question... assuming that the encoder's Bryan is using here are exactly the same... is this saying that all of a sudden now we're not comparing apples to apples between the two cards .. BUT in Shadow I think that the 3080 has the better picture? it's slightly brighter, and I think that there is more detail in the picture? Watch the video in 4k and see what you think?

Dirt 5 looks like a different game between the two... it's like it's not even the same game at some points... all the shadows are different, the definition on the cards is different... suppose there is RT at hand in this now... but look at this screen shot... I suppose the problem with this one is that we've not got 3 tech's all be employed in this title now... but I'd say in the screenie below the 6800 is the worst and the 5700XT is the cleanest? Also does this now invalidate the benchmark if it's showing different things? I'd say it does...

Obviously tons of discussion could ensue here and I'm not causing a war between the two as I think that they both are amazing cards BUT.... I'm not saying one card is better than the other here, however with all the tech these cards have to be seeing differences as blatent as this... when benchmarking now, I'm not convinced we're seeing a fair comparision sometimes? and I've just looked closely at Horizon Zero Dawn and here are my two example where I feel that the 3080 just isn't as defined as the 6800xt... ??? I see with my eye more detail in the 6800XT screen? Howerver the position of the screen is ever so slightly difference, coudl this cause this in the first picture below? Same with the second picture it's slightly out of sync... so could this be the explanation?

So listen, have a watch of the video see what you guys think and maybe do some checks yourself... anyhow thought I'd raise this again... and have a discussion on it...

Last edited: