In another thread, the topic of frame rate limiting came up, and whether FRTC or Chill was better. I didn't know, so I decided to do some Science™.

I connected the left button of a mouse and a photodiode to an oscilloscope, with the photodiode pointed at my monitor. With that, I can use the oscilloscope to measure the time between pressing the mouse button and the screen changing brightness. That's the total end-to-end latency, including anything added by any frame rate limiting.

To make the screen change brightness when the mouse button is pressed, the muzzle flash of a gun in a game is handy. I used the game Second Sun for this, as it has nice bright muzzle flashes, and I happen to have it installed now. It uses Unity as its engine, and DX11. I set it to 1080p with all the settings turned up to maximum (except no AA), which runs at 190 fps without any limiting in the particular location I stood in for testing.

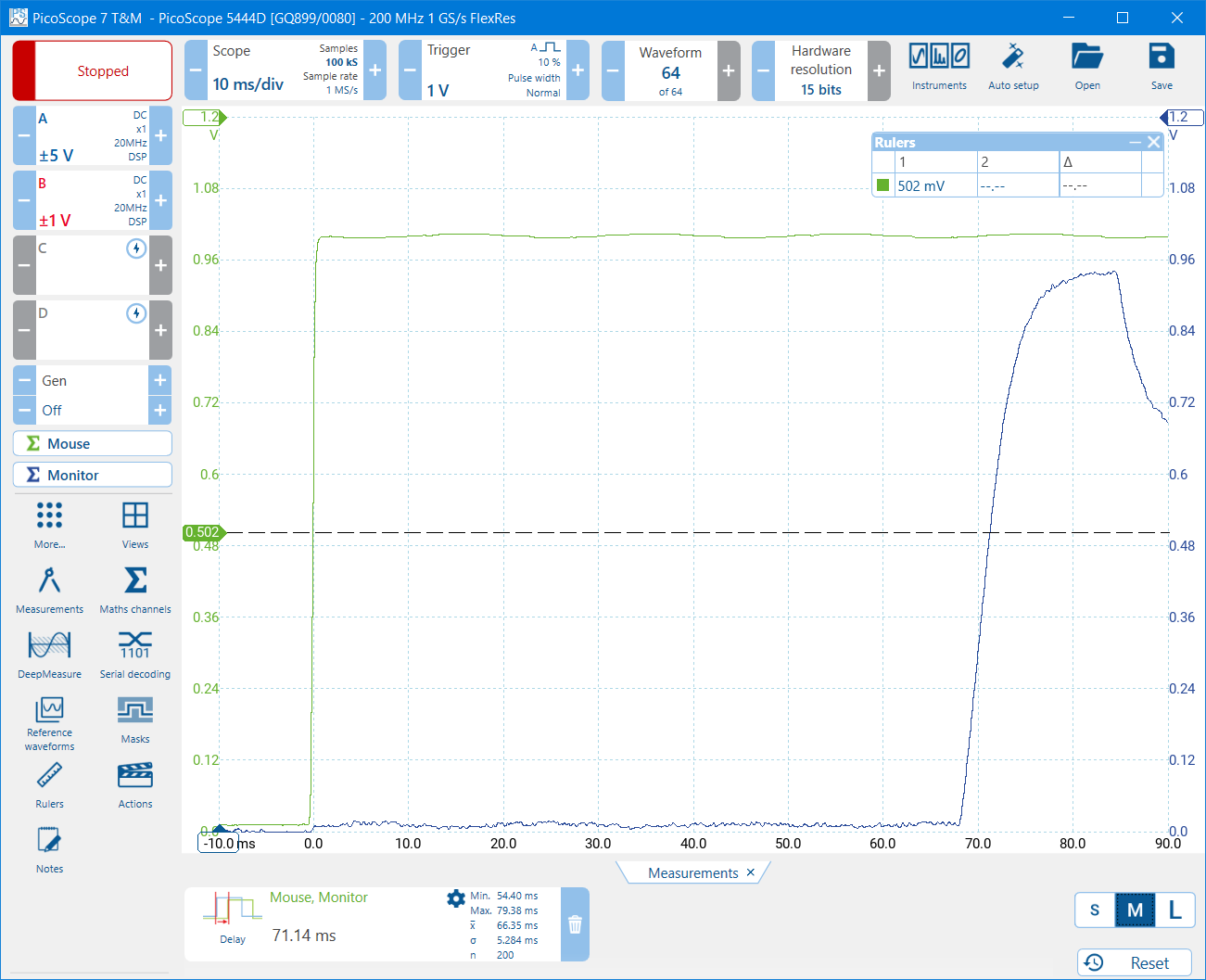

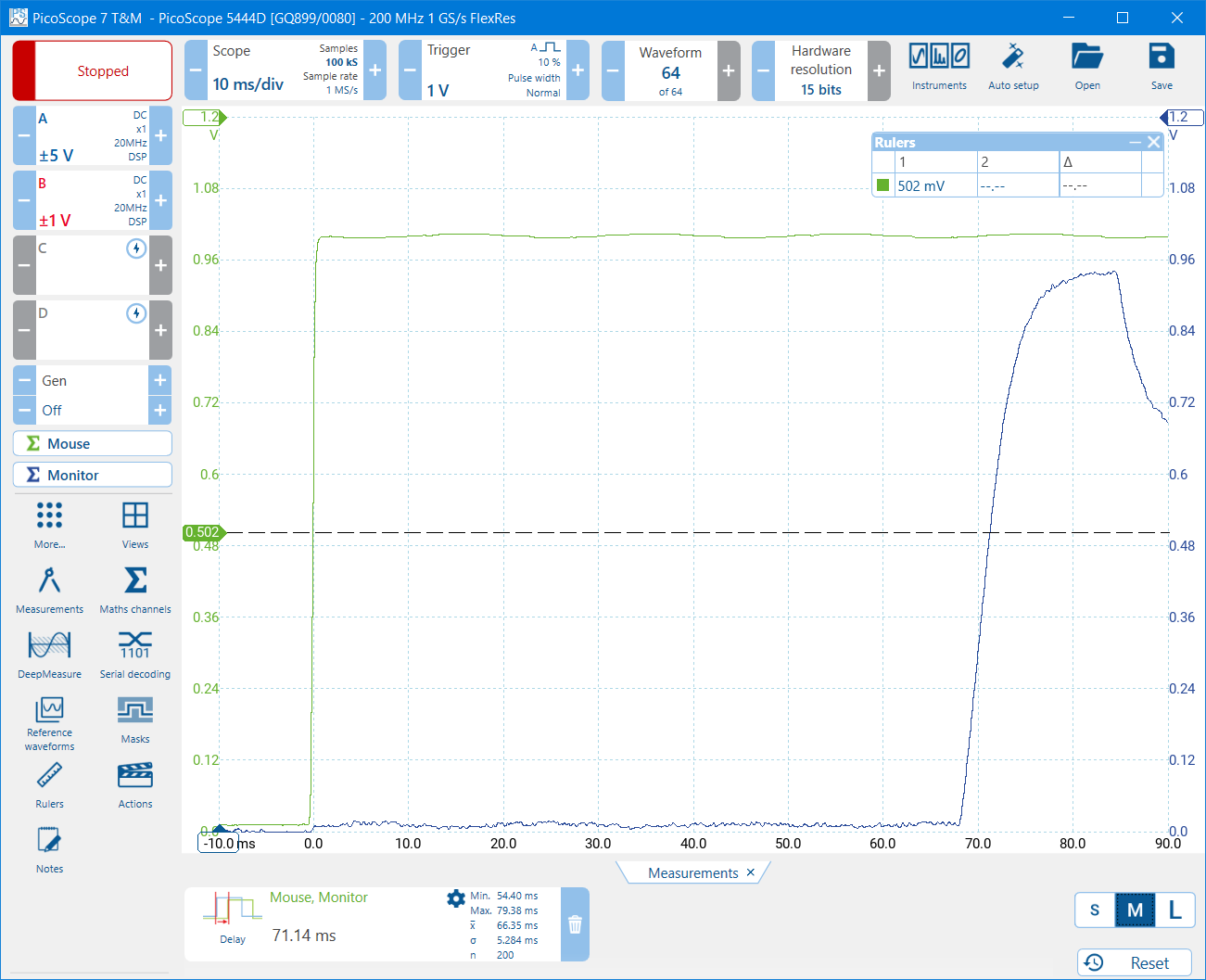

This is what it looks like on the oscilloscope. The green trace is the mouse button, the blue trace is the muzzle flash, and the latency is conveniently calculated automatically and shown underneath:

I clicked the mouse button 200 times with no frame rate limiting, then again limited to 60 fps using the game's own limiter, FRTC, and Chill. Then I created a cumulative frequency chart of the latencies:

"Cumulative frequency" means that a given point on the chart represents the proportion of clicks that were at that latency or quicker. So the median is exactly half way up the y axis, a line further to the left is lower latency, and a steeper line is more consistent latency.

The results aren't what I expected. I did expect "none" to be the fastest, because the frame rate is higher, and I expected the game's own limiter to be the best, but I didn't expect FRTC to be so much slower. It's possible that games using different graphics APIs would perform differently - I haven't tested that.

I connected the left button of a mouse and a photodiode to an oscilloscope, with the photodiode pointed at my monitor. With that, I can use the oscilloscope to measure the time between pressing the mouse button and the screen changing brightness. That's the total end-to-end latency, including anything added by any frame rate limiting.

To make the screen change brightness when the mouse button is pressed, the muzzle flash of a gun in a game is handy. I used the game Second Sun for this, as it has nice bright muzzle flashes, and I happen to have it installed now. It uses Unity as its engine, and DX11. I set it to 1080p with all the settings turned up to maximum (except no AA), which runs at 190 fps without any limiting in the particular location I stood in for testing.

This is what it looks like on the oscilloscope. The green trace is the mouse button, the blue trace is the muzzle flash, and the latency is conveniently calculated automatically and shown underneath:

I clicked the mouse button 200 times with no frame rate limiting, then again limited to 60 fps using the game's own limiter, FRTC, and Chill. Then I created a cumulative frequency chart of the latencies:

"Cumulative frequency" means that a given point on the chart represents the proportion of clicks that were at that latency or quicker. So the median is exactly half way up the y axis, a line further to the left is lower latency, and a steeper line is more consistent latency.

The results aren't what I expected. I did expect "none" to be the fastest, because the frame rate is higher, and I expected the game's own limiter to be the best, but I didn't expect FRTC to be so much slower. It's possible that games using different graphics APIs would perform differently - I haven't tested that.

Last edited: