Microsoft Seemingly Looking to Develop AI-based Upscaling Tech via DirectML

https://www.techpowerup.com/284265/...-develop-ai-based-upscaling-tech-via-directml

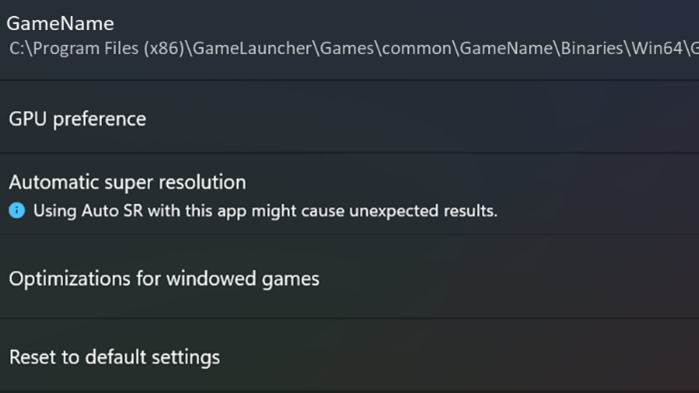

Microsoft seems to be throwing its hat in the image upscale battle that's currently raging between NVIDIA and AMD. The company has added two new job openings to its careers page: one for a Senior Software Engineer and another for a Principal Software Engineer for Graphics. Those job openings would be quite innocent by themselves; however, once we cut through the chaff, it becomes clear that the Senior Software Engineer is expected to "implement machine learning algorithms in graphics software to delight millions of gamers," while working closely with "partners" to develop software for "future machine learning hardware" - partners here could be first-party titles or even the hardware providers themselves (read, AMD). AMD themselves have touted a DirectML upscaling solution back when they first introduced their FidelityFX program - and FSR clearly isn't it.

It is interesting how Microsoft posted these job openings in June 30th - a few days after AMD's reveal of their FidelityFX Super Resolution (FSR) solution for all graphics cards - and which Microsoft themselves confirmed would be implemented in the Xbox product stack, where applicable. Of course, that there is one solution available already does not mean companies should rest on their laurels - AMD is surely at work on improving its FSR tech as we speak, and Microsoft has seen the advantages on having a pure ML-powered image upscaling solution thanks to NVIDIA's DLSS. Whether Microsoft's solution with DirectML will improve on DLSS as it exists at time of launch (if ever) is, of course, unknowable at this point.

https://www.techpowerup.com/284265/...-develop-ai-based-upscaling-tech-via-directml

Microsoft seems to be throwing its hat in the image upscale battle that's currently raging between NVIDIA and AMD. The company has added two new job openings to its careers page: one for a Senior Software Engineer and another for a Principal Software Engineer for Graphics. Those job openings would be quite innocent by themselves; however, once we cut through the chaff, it becomes clear that the Senior Software Engineer is expected to "implement machine learning algorithms in graphics software to delight millions of gamers," while working closely with "partners" to develop software for "future machine learning hardware" - partners here could be first-party titles or even the hardware providers themselves (read, AMD). AMD themselves have touted a DirectML upscaling solution back when they first introduced their FidelityFX program - and FSR clearly isn't it.

It is interesting how Microsoft posted these job openings in June 30th - a few days after AMD's reveal of their FidelityFX Super Resolution (FSR) solution for all graphics cards - and which Microsoft themselves confirmed would be implemented in the Xbox product stack, where applicable. Of course, that there is one solution available already does not mean companies should rest on their laurels - AMD is surely at work on improving its FSR tech as we speak, and Microsoft has seen the advantages on having a pure ML-powered image upscaling solution thanks to NVIDIA's DLSS. Whether Microsoft's solution with DirectML will improve on DLSS as it exists at time of launch (if ever) is, of course, unknowable at this point.