I have been trying to set up a proxy for some services on my server via Tailscale so I can use a nice DNS and not deal with ports. I got on one to work, but everything else is ignoring the port.

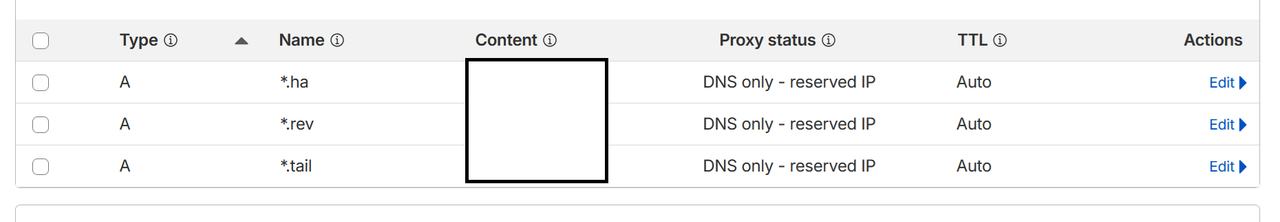

I am running Nginx in Docker Compose on OMV. All my services are also on Docker on OMV, except Home Assistant, which is on a VM on the same server, and another service on a different server using Docker Compose and OMV. I can access them on the local network and via the Tailscale DNS or IP, using the correct port. The DNS I use is from Cloudflare. I created SSL cert with their token, but it doesn't matter if I use it or not, I still have the same problem.

For example, I created a proxy host to localhost and port 81 to domain.com. When accessing domain.com, it is redirected to localhost instead of localhost: port. I also tried setting the IP to the Docker name instead of localhost since they are on the same network, but I still get directed to localhost.

As of right now, I have to do domain.com: port to access the correct service, which is not ideal.

I hope this explains it well since I am a networking noob.

I am running Nginx in Docker Compose on OMV. All my services are also on Docker on OMV, except Home Assistant, which is on a VM on the same server, and another service on a different server using Docker Compose and OMV. I can access them on the local network and via the Tailscale DNS or IP, using the correct port. The DNS I use is from Cloudflare. I created SSL cert with their token, but it doesn't matter if I use it or not, I still have the same problem.

For example, I created a proxy host to localhost and port 81 to domain.com. When accessing domain.com, it is redirected to localhost instead of localhost: port. I also tried setting the IP to the Docker name instead of localhost since they are on the same network, but I still get directed to localhost.

As of right now, I have to do domain.com: port to access the correct service, which is not ideal.

I hope this explains it well since I am a networking noob.

Last edited: