-

Competitor rules

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

NVIDIA RTX 50 SERIES - Technical/General Discussion

- Thread starter scubes

- Start date

More options

Thread starter's postson raw rasterization performance it definitely wont beat it but with the full dlss benefits exclusive to the 5000 series then it would most likely go to the 5080 so id say in games that are to be released going forward will prob run better on 5080 as most of the devs will make more and more use of dlss as it allows them to not have there games the most optomised as they know dlss will help cover the performance drop anyways

Not so fast, you are falling for Nvidia marketing. Only MFG is a 50 series exclusive, and since a 5080 is obviously not going to come close to a 4090 as a base performance comparison, and we already know that MFG has the same input latency "feel" as regular FG based on what reviewers witnessed at CES, we know that a weaker card than a 4090 will still feel like it has latency even if the MFG 5080 is showing 240fps vs the 4090's otherwise let's say 120fps.

You will have a worse gaming experience in that sort of scenario because a strong BASE performance matters more for a good frame generated experience, which is why people with weaker 40 series cards tend to hate using frame gen on modern heavy titles.

Associate

- Joined

- 11 Jul 2020

- Posts

- 111

on raw rasterization i said 4090 all day, but from a performance stand point for games going forward the 5080 will be the better performing card when making use of all the features available to it, i do agree that if you are running these new dlsss features without a good base fps to begin with you will be in for a bad time, but realistically a 5080 will still be able to achieve a good base fps in most titles out there now today, only game that will bring the fps down off top of my head would be cyberpunk for example and even at that the 4090 only manages 20ish fps with all the bells and whistles on running on raw rasterizationNot so fast, you are falling for Nvidia marketing. Only MFG is a 50 series exclusive, and since a 5080 is obviously not going to come close to a 4090 as a base performance comparison, and we already know that MFG has the same input latency "feel" as regular FG based on what reviewers witnessed at CES, we know that a weaker card than a 4090 will still feel like it has latency even if the MFG 5080 is showing 240fps vs the 4090's otherwise let's say 120fps.

You will have a worse gaming experience in that sort of scenario because a strong BASE performance matters more for a good frame generated experience, which is why people with weaker 40 series cards tend to hate using frame gen on modern heavy titles.

on raw rasterization i said 4090 all day, but from a performance stand point for games going forward the 5080 will be the better performing card when making use of all the features available to it, i do agree that if you are running these new dlsss features without a good base fps to begin with you will be in for a bad time, but realistically a 5080 will still be able to achieve a good base fps in most titles out there now today, only game that will bring the fps down off top of my head would be cyberpunk for example and even at that the 4090 only manages 20ish fps with all the bells and whistles on running on raw rasterization

That makes sense to me.

If a 4090 is pulling 120fps native and a 5080 is pulling something like 100fps, once you’re at the point when you’re ok with turning on frame gen then that difference might become totally redundant in some use cases.

In fact, if you can even his 80 fps native with the 5080 then you’re potentially in the realms of tripling that the frame gen. Not going to be quite as smooth as the 4090 starting with the higher base fps but is it material? Not sure, but I am sure there will be opinions all over the place on it for some time.

Last edited:

Soldato

- Joined

- 21 Jul 2005

- Posts

- 21,025

- Location

- Officially least sunny location -Ronskistats

That is my point, it won't, it only has MFG as the exclusive RTX50-tech to make use of, the rest comes to RTX40 too. if the base performance isn't high enough to yield good results at frame generated framerates then yeah bad times ahead at 300fps MFG. Have you played a game with regular FG that has input lag? You'd know what I mean if you have.on raw rasterization i said 4090 all day, but from a performance stand point for games going forward the 5080 will be the better performing card when making use of all the features available to it,

Even going by the Nvidia PR videos, Cyberpunk is shown at 120fps with a 5080 using MFG, whilst a 4090 gets around that with regular FG at 4K. There is about 7ms extra added with MFG as per DF's video so you can expect more noticeable input latency in that pipeline with the 5080 than with the 4090, though how much Reflex 2 controls that down is not yet known, but keep in mind Reflex 2 comes to all RTX cards. Though bear in mind that enhanced frame gen also comes to RTX 40 series so latency is lower and framerate is higher still. You can still play path traced Cyberpunk without frame gen on a 4090 and get 75fps at 4K, I know because that is what I can do right now.

There is a reason why at no point have NV compared 5080 to 4090 in any material, aside from Jensen's claim a 5070 is 4090 "performance" lol. And there's a reason why review embargo is literally on the wire.

Last edited:

It looks like something you’d find in your cereal box in the 90s.

Don't diss 90s cereal box freebies! I remember getting cool stuff in there, like game CDs and all sorts. There was a football game that ran on a crappy Intel pentium with a few hundred megabytes of RAM and like 16 or 32MB VRAM. Nowadays, the only freebies we get free is the privilege of paying more for stuff

I feel like I'm going to get home from work tomorrow and this thread will have grown 50 more pages full of folks arguing whether paying 30+% more money is worth the 25% performance improvementsin 24 hours and 17 mins from now

Long story short... only for those rich folks in their $10000 PC gaming command centres

Associate

- Joined

- 11 Jul 2020

- Posts

- 111

I currently own the 4090 card myself so its not as if im trying to downtalk the card it is a fantastic card and will continue to be, but my point still stands that the 5090 will be a better supported card going forward thanks to the extra dlss4 feature it has, also with it having gddr7 ram is also a nice benefit, i have used my 4090 without any form of dlss versus with dlss and i can appreciate both not having it and also the benefits that having dlss to squeeze that extra performance out is niceThat is my point, it won't, it only has MFG as the exclusive RTX50-tech to make use of, the rest comes to RTX40 too. if the base performance isn't high enough to yield good results at frame generated framerates then yeah bad times ahead at 300fps MFG. Have you played a game with regular FG that has input lag? You'd know what I mean if you have.

Even going by the Nvidia PR videos, Cyberpunk is shown at 120fps with a 5080 using MFG, whilst a 4090 gets around that with regular FG at 4K. There is about 7ms extra added with MFG as per DF's video so you can expect more noticeable input latency in that pipeline with the 5080 than with the 4090, though how much Reflex 2 controls that down is not yet known, but keep in mind Reflex 2 comes to all RTX cards. Though bear in mind that enhanced frame gen also comes to RTX 40 series so latency is lower and framerate is higher still. You can still play path traced Cyberpunk without frame gen on a 4090 and get 75fps at 4K, I know because that is what I can do right now.

There is a reason why at no point have NV compared 5080 to 4090 in any material, aside from Jensen's claim a 5070 is 4090 "performance" lol. And there's a reason why review embargo is literally on the wire.

if you are 1 of these ones though that are gonna play a game and stop at a certain sections to study and look for issues then you are always gonna find something doing that but if you normally activate dlss and appreciate the good upscaling job it does and simply game it is really good at what it does and a newer version from nvidia isnt gonna make that experience any worse its most likely gonna improve on what is already the best upscaling tech out there

I thought we were on about the 5080 not 5090  Obviously the 5090 is the better card, at twice the cost! GDDR7 isn't going to make any difference when you hit the 16GB limit in the newest games with no sign of devs being more sparing with VRAM usage. Remember the new texture compression feature only applies to games that support it, of which there is only one, the new CoD.

Obviously the 5090 is the better card, at twice the cost! GDDR7 isn't going to make any difference when you hit the 16GB limit in the newest games with no sign of devs being more sparing with VRAM usage. Remember the new texture compression feature only applies to games that support it, of which there is only one, the new CoD.

Obviously the 5090 is the better card, at twice the cost! GDDR7 isn't going to make any difference when you hit the 16GB limit in the newest games with no sign of devs being more sparing with VRAM usage. Remember the new texture compression feature only applies to games that support it, of which there is only one, the new CoD.

Obviously the 5090 is the better card, at twice the cost! GDDR7 isn't going to make any difference when you hit the 16GB limit in the newest games with no sign of devs being more sparing with VRAM usage. Remember the new texture compression feature only applies to games that support it, of which there is only one, the new CoD.

Last edited:

Associate

- Joined

- 11 Jul 2020

- Posts

- 111

sorry that was a typo by me i do mean the 5080 lolI thought we were on about the 5080 not 5090Obviously the 5090 is the better card, at twice the cost! GDDR7 isn't going to make any difference when you hit the 16GB limit in the newest games with no sign of devs being more sparing with VRAM usage.

Associate

- Joined

- 11 Jul 2020

- Posts

- 111

dlss has already showing off less vram usage also which helps with that issueI thought we were on about the 5080 not 5090Obviously the 5090 is the better card, at twice the cost! GDDR7 isn't going to make any difference when you hit the 16GB limit in the newest games with no sign of devs being more sparing with VRAM usage.

Last edited:

No that's only frame gen, before frame gen is used we have games that use 14GB VRAM or higher at times. Indiana Jones being a prime and recent example. The 5080 should have been a 20GB card at least not 16, makes no sense other than NV planning a 5080 Super card in the summer.dlss has already showing off less vram usage also which helps with that issue

Last edited:

Indeed the same monitor!i think you may have the same monitor as me the samsung g9 57 inch when you said the 2x4k screens makes me think it is and this is the reason why i plan to upgrade from my 4090 also, you have to remember that the higher the resolution the more of the workload you are normally putting on to the gpu so i think those of us that game at the higher resolutions over the 4k threshold this card is more beneficial to us, it allows me for the first time to unlock the 7680x2160 at 240hz also as i am currently locked to 120hz at that resolution due to the display port version on 4000 series, i think if you are a below 4k gamer and already have a 4080 or 4090 then i really dont think going for a 5000 series card makes much sense

Associate

- Joined

- 11 Jul 2020

- Posts

- 111

yes but why would you limit the card in that situation im saying the 5080 while making use of all its available resources is gonna be the better card going forward and even at the 14gb vram it still falls within 5080 capability anywaysNo that's only frame gen, before frame gen is used we have games that use 14GB VRAM or higher at times. Indiana Jones being a prime and recent example.

14GB VRAM use by a game doesn't account for system VRAM use, DWM uses a chunk of VRAM, background apps use VRAM, the total use can hit and easily exceed 16GB in particular games when accounting for total system use as well. This means i those situations the game is caching assets to system RAM which is an impact on 1% lows or the cause of stutters etc.

Associate

- Joined

- 11 Jul 2020

- Posts

- 111

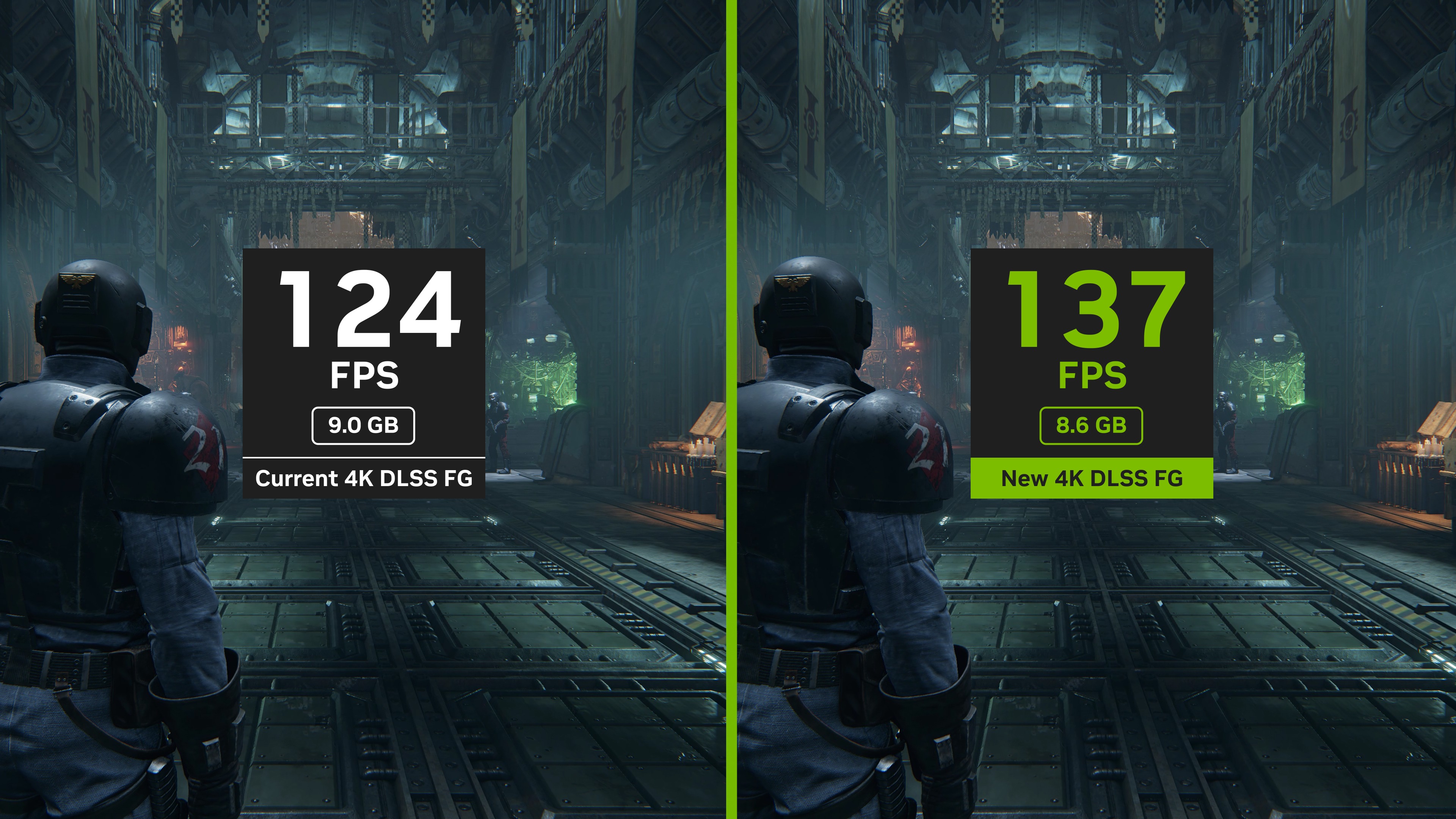

but again even at that games going forward are mostly gonna be frame gen supporting so to not use it when it is benificial to you would be silly imo, i do agree with that part though about nvidia skimping out on the ram i think it should have matched the vram of the 4090,but on the flipside of that they have brought out the compression of the textures to help alleviate that like the picture i included up there was warhammer game set to 4k with all the bells and whistles on, game companies now know going forward they have compression software available to them to help with vram usage also so of course they are gonna use that to their benefit14GB VRAM use by a game doesn't account for system VRAM use, DWM uses a chunk of VRAM, background apps use VRAM, the total use can hit and easily exceed 16GB in particular games when accounting for total system use as well. This means i those situations the game is caching assets to system RAM which is an impact on 1% lows or the cause of stutters etc.

Interesting to see a visualisation of that 4 slot prototype with the rotated PCB that pictures appeared of a while ago. What a beast that would have been!Nvidia - Designing the Founders series video

Last edited:

Same here. 240hz here we come!Indeed the same monitor!

Interesting to see a visualisation of that 4 slot prototype with the rotated PCB that pictures appeared of a while ago. What a beast that would have been!

Gamers nexus had a teardown video of it a while ago.