Caporegime

This is not news to me but it may be to some.

Source

Full article here, its a very good read.

http://www.brightsideofnews.com/new...rketing-offensive-ahead-of-hawaii-launch.aspx

Nvidia Launches "AMD Has Issues" Marketing Offensive ahead of Hawaii Launch

Recently I read an article on one of the popular technology websites which drew a comparison between multi-monitor gaming and 4K displays, joining the frame in claiming that Nvidia has a superior experience in 4K over AMD. Our experience, however - was exactly the opposite.

First a bit of a background - here at BSN we have more than five years of experience working with 4K equipment, including displays. And while as a video enthusiast and 4K gaming proponent I just have to state that while gaming on a 4K panel is more immersive than AMD's Eyefinity and Nvidia (3D) Surround - the biggest problem lies within the displays themselves.

...

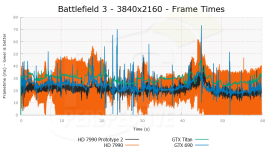

When running on GeForce cards (tested with GTX 580 / 590 / 680 / Titan), games would show a Vertical tear (not a horizontal one), regardless of running with Vsync on or off. Furthermore, Nvidia cards would display the Start bar only to 50% of the screen, and the wallpaper was double.

Our test setup - we use 7970 boards from PowerColor and GTX Titan cards from Nvidia.

On the other hand, AMD Radeon hardware (tested on HD 7970) worked simple - just select Eyefinity mode and voila, the task bar would fill the screen, single wallpaper and most importantly, no vertical tear in the middle.

The reason for these issues was simple - the display required two Dual-Link DVI cables and Nvidia consumer cards experienced issues when running in dual display setup. The story was exactly the opposite on the Quadro cards, as witnessed after running the Quadro K5000 - no tearing what so ever, and unified display (single wallpaper). After we worked with Nvidia on explaining the exact nature of the problems, the company released a driver that fixed all of the issues encountered.

At the time, AMD offered better game experience, as you can read in our detailed 4K Shootout article. Bear in mind that we have tested scaling between a single, dual and triple-GPU configuration between AMD and Nvidia.

...

And this brings us to the most important part of the whole story. Why would someone write an article mentioning or criticizing 4K gaming experience a week ahead of AMD's launch of 4K optimized drivers and the Hawaii GPU architecture in (you've guessed it) - Hawaii?

Source

Full article here, its a very good read.

http://www.brightsideofnews.com/new...rketing-offensive-ahead-of-hawaii-launch.aspx

Last edited: