-

Competitor rules

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Nvidia preparing new Geforce with GK110

- Thread starter Boomstick777

- Start date

More options

Thread starter's postsSoldato

I guess its something that's going to have to be seen to fully understand the difference it will make, at least for me. My fps are never that low so i guess its something that will benefit a slower gpu generally than the more powerful ones. I have to admit though, i don't really have a problem with lag or stutter when using vsync. Some input lag granted, but i can remove that by either taking off vsync or using an fps limit. Obviously if fps drops then its noticeable, but personally i don't start to notice it unless fps drops below say 50. Tearing does not always occur with it off either, that seems to vary on a game by game basis for me. So it sounds like a nice feature, but not one id personally describe as game changing because theres not really an issue there in the first place. Except in a few circumstances. For those situations im sure it will help. I can see the benefit of it a bit more now, but i don't think it would change too much for me personally.

The difference between letting the monitor refresh on its own terms and Gsync is obviously something that has to be seen so I can't comment. But I can easily see why there would be a huge benefit in reducing frame timings by as much as 20ms. Forget screen tearing as that was obviously one of the obvious benefits for them to touch on. The overall effect of synchronising the GPU and refresh time obviously gives the effect of a higher frames per second as there is literally no catchup. That much I can safely say is a really good thing. For example in situations in Battlefield where the GPU is forced to render a lot of detail, smoke fire claret etc. Reduced to 30-40fps even with Vsync enabled the loss of frame rate is still very apparent for that short period. They're essentially giving you free performance by keeping both devices almost completely in toe. I suppose the eventual outcome might be that GPU demanding games will have smoother performance under it. Much like Mantle you could say, but without the engine limitations. Both of these technologies in sync would be awesome, I jiggle at the thought. Oh well...

Boomstick that is just wild speculation but I'm really curious as to what this card is. If it is fully unlocked at those clocks it doesn't make a while lot of sense as the Titan has the memory to back it up.

Last edited:

Caporegime

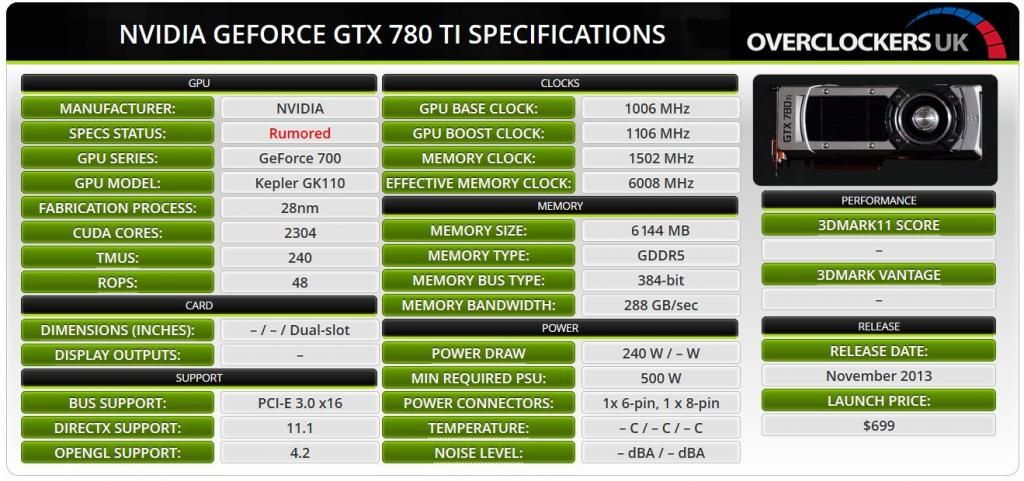

Thought I would have a play with what I think the specs will be

Soldato

Yeh see I'd tend to agree with Greg. Next week needs to hurry up so we can find out

Man of Honour

Very unlikely

It could come in many variations but not one with 2880 cores. Even if NVidia want to throw their money away, I don't think they would be able to produce enough full fat GK110 chips to keep up with demand.

Thought I would have a play with what I think the specs will be :D[/QUOTE]

I think you might be a bit low on the cores. The Quadro K6000 has 2880 @ 902 MHz to deliver 5.2 TFLOPS. Surely Nvidia will want to match/beat the 290X in the numbers game?

Possibly 4.5 GB of memory to keep costs down.

2 x 6-pin like the K6000.

Caporegime

I think you might be a bit low on the cores. The Quadro K6000 has 2880 @ 902 MHz to deliver 5.2 TFLOPS. Surely Nvidia will want to match/beat the 290X in the numbers game?

Possibly 4.5 GB of memory to keep costs down.

2 x 6-pin like the K6000.

Maybe but I called 6GB on a 780 with higher stock clocks and boosting clocks to make it the fastest GPU Nvidia had made. If they had called it a Titan Ti, I would have put higher clocks on it with 2880 cores but as it is a 780Ti....Complete guess work and probably miles off

Soldato

Aye, it's anyone's guess. REALLY annoying

Caporegime

Hope this is true, saving my pennies. Can not resist 2880 Cores !!

At $600 (£450) that would be fantastic, that would mean an equally fast R9 290X would be $500 (£370)

I'm remaining cautiously optimistic for more cores than Titan, and 3GB - 6GB variants. Have a feeling the 780ti will be a beast, Nvidia will want to properly take on the 290X with more than just an overclocked 780.

Bring on the 780ti and 290X benchmarks !!

Bring on the 780ti and 290X benchmarks !!

Soldato

- Joined

- 6 Feb 2010

- Posts

- 14,582

Make that GOOD GDDR5 memory with 1700MHz out of the box, and all overclockable to 2000MHz on average as well- that would make the bandwidth matches 290x's 1250MHz~1500MHz on the 512-bit bus.I'm remaining cautiously optimistic for more cores than Titan, and 3GB - 6GB variants. Have a feeling the 780ti will be a beast, Nvidia will want to properly take on the 290X with more than just an overclocked 780.

Bring on the 780ti and 290X benchmarks !!

Soldato

- Joined

- 30 Nov 2011

- Posts

- 11,375

Mantle is a low level API. Gsync is monitor synchronisation. If anything the two technologies combined would be brilliant. Although it is unlikely to happen. Not sure how this in anyway shape or form makes Mantle seem any less of a good thing.

because Mantle is vendor AND game specific (and the vendor with the lowest market share, so increasingly unlikely to be used in the majority of games)

where as Gsync gives you a higher EFFECTIVE frame rate, and requires no extra work from the devs, so 100% of titles will support it

people chase minimum 60FPS in games because if you vary from that you get artifacts... mantle gives you a small performance boost so that you can enable that higher setting and still keep 60fps... with gsync you can completely ignore 60fps as being a requirement, yet still not suffer from artifacts... the amount of performance these two technologies make up for is much larger with Gsync

basically, people with AMD cards will be stuck using lower settings in order to maintain artifact free gameplay, even in mantle games

nvidia have also hinted that they have something a bit more like mantle on the way... they already have NVAPI which does include some GPU specific enhancements

Linus Torvalds hates nvidia enough that he's publicly stuck the finger up at them... so for him to say that this is a total game changer, it must be significant

someone commented to me on another forum, why not just use a 2nd GPU that will cost about the same as buying a new monitor... but that is a short term solution and the vast majority of end users shy away from Crossfire / SLI, and particularly if you are in the market for a new monitor anyway, this does become very attractive

Soldato

You're assuming an awful lot in that post mate. Nobody apart from DICE can currently comment on how much of a performance gain is to be had from Mantle. You simply can't compare the two technologies, and as much as G-Sync will help with keeping smoother transitions between scenes and the annoyance of varying frame rates, there is no real performance gain. G-Sync doesn't in anyway render Mantle moot, nor does it overcome the draw backs of Direct-X.

As I said last week, a new low level API combined with Gysync would be optimum, the two aren't a resolve for one another.

As I said last week, a new low level API combined with Gysync would be optimum, the two aren't a resolve for one another.

Last edited:

Caporegime

If GSync is that great expect AMD to bring their own version out. Probably one reason why its not yet proprietary. I'm not sure the monitor companies would want to lock out 40% or so of the user base from a unique selling point of their monitor. If an AMD version does come out, expect it to be cheaper as well.

Soldato

- Joined

- 30 Nov 2011

- Posts

- 11,375

Gsync will be patented, so if AMD want to use it they'll need to licence it and nvidia make money everytime AMD sell a gpu

plenty of 3Dvision monitors out already so not sure we need to worry about manufacturer support, Asus, benq, viewsonic and phillips already onboard with multiple articles saying they are just the first

nvidia dont need to answer mantle because they are sticking an ARM core on maxwell, which among other things will allow vastly more draw calls, regardless of API or developer support

plenty of 3Dvision monitors out already so not sure we need to worry about manufacturer support, Asus, benq, viewsonic and phillips already onboard with multiple articles saying they are just the first

nvidia dont need to answer mantle because they are sticking an ARM core on maxwell, which among other things will allow vastly more draw calls, regardless of API or developer support

Last edited:

Soldato

If GSync is that great expect AMD to bring their own version out. Probably one reason why its not yet proprietary. I'm not sure the monitor companies would want to lock out 40% or so of the user base from a unique selling point of their monitor. If an AMD version does come out, expect it to be cheaper as well.

Panel manufacturers have enough products on the market to safely cover themselves on that Matt. Besides there isn't any stopping you using a G-Sync enabled monitor on AMD hardware. Potentially completely pointless but still true

Caporegime

Panel manufacturers have enough products on the market to safely cover themselves on that Matt. Besides there isn't any stopping you using a G-Sync enabled monitor on AMD hardware. Potentially completely pointless but still true

You really think Nvidia Gsync will continue to work as soon as an AMD Gpu is detected? Think Physx and arbitrary lockouts.

Associate

- Joined

- 13 Oct 2013

- Posts

- 171

You really think Nvidia Gsync will continue to work as soon as an AMD Gpu is detected? Think Physx and arbitrary lockouts.

Agreed, I'm not so much as risking my AMD card getting neutered by some nVidia chip.