6800XT 16GB Red Devil Review

Youtube "Breakfast Review" short review

Introduction

Ah hello everyone, how’s 2021 going for you so far? If you happen to be in the UK then don’t worry about answering that question it’s more rhetorical with me living in the UK myself I know exactly how bad it is. Today we will be looking at one of the latest generation GPUs, they aren’t exactly easy to come by right now with both AMD and nvidia coming up short on supply making reviews for the new gen GPUs a little redundant right now with potential buyers not being able to really do more than window shop but no doubt everyone out there would like to have some more details on the options that will, eventually, be available. To that end today we have a bit of a rarity to review, the Powercolor 6800XT Red Devil, Powercolor, aka TUL, established themselves in 1997 they have been around almost as long as I have with my humble tech beginnings starting in 1993 at the tender age of nine so it is not an understatement with all the years under their belt to say Powercolor are well established with presences in such places as Taiwan, Netherlands, and the US with a rich history and experience behind them. So let us dive in to this latest people’s review and find out what this 6800XT Red Devil has to offer.

Gallery

Let’s get to it and see what this card looks like.

Well, first impressions are quite positive. The packaging is top shelf feeling with a design that does somewhat remind me of the days of old before AMD bought ATi when you could always find some seriously cool artwork for ATi products. The accessories aren’t anything substantial but they never are unless there is a game bundled, you aren’t imagining things here either there was no driver DVD in the package which I assume isn’t the normal practice and more an indication of being sent a press sample. Protection offered by the packaging is also superb with no worries about anything getting damaged as we all know some firms will not ship anything out to you in more than the retail packaging so protection with the retail packaging is paramount to help avoid those damaged in transit situations. Lastly at a glance we can see that this card has the potential to draw up to 375w with the combined provided power of the PCIe slot and twin 8 pin PCIe connections as well as a dual vBIOS switch for silent and OC modes.

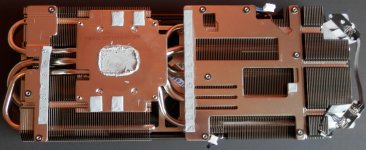

Moving on to a closer inspection it looks like Powercolor have got anything that generates heat attached in some way to the heatsink of which itself outwardly appears to be the best designed heatsink I’ve seen in a long time but we shall take a closer look at that later.

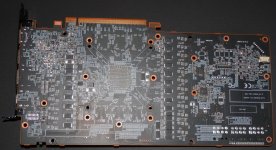

As for the backplate and overall appearance the card is certainly a nice looking one but if I’m to be totally honest I liked the design of the Powercolor 5700XT Red Devil better and the 5700XT Red Dragon was simply sublime. I will point a finger at that warranty sticker though, Powercolor. If you want to put a warranty sticker on that’s absolutely fine just don’t put it on right over one of the screw heads as in the US those stickers are flat out illegal now, you must surely know this, and in the UK such things while being in more of a gray area are heavily frowned upon under statutory rights laws when put in places that prevent basic maintenance.

Meanwhile taking a look at the rear IO I’m pleased to see that Powercolor have given the plate some ventilation to help heat out of the case as fast as possible, something the AMD reference design doesn’t do and that’s not cool (pun intended, heh.) Connectivity on the rear is a somewhat standard affair with 3x DisplayPort connections and 1x HDMI, nothing really wrong here but I would have much rather seen a configuration of 2x HDMI and DisplayPort connections for a little more flexibility.

For the last part of this showcase we will move to the RGB features of the card and to do that it means firing up the Powercolor Devil Zone utility... why that name Powercolor? There are so many other cooler names you could have gone with that also more clearly describe what the software does, Devil RGB, RGB Devil, or even just RGB Zone to name a few examples off the top of my head.

Here is Devil Zone, or as I’m going to call it to make it sound cooler DZ, as far as RGB software goes it’s pretty good but it is definitely a bit buggy and could do with some work. At one point using the software it seemed to flat out forget that any colour had been selected and applied which lead to the LEDs on the card having the appearance of being off and even selecting to turn the LEDs off in the software then supposedly turning them back on with one of the profiles and clicking “Apply” did not flip the switch, so to speak. I had to manually select a colour and profile effect then hit “Apply”, then, and only then, did the LEDs on the card flick back into life.

Some adjustment with DZ appears necessary as well due to some colours being not even close to what they are supposed to be, selecting orange for example resulted in more of an off-white colour so it was necessary to manually adjust the RGB values to get an actual orange colour.

Overall DZ has some nice potential but the glitchy nature of it is disappointing.

Laid to Bare

We know the shuffle here, time to tear down the card and see what we have underneath that colossal epic sized heatsink assembly. As always this phase is done last after benchmarking and temperature measurements so results are as representative as possible for what you will get out of the box.

Ok, so we have a few minor violations to talk about here, firstly the thermal pads are not large enough to fully cover the GDDR memory that’s a moderate no-no Powercolor and at the price this card is likely to be it’s not something that should be happening. The second thing is the amount of thermal interface material used on the GPU, being generous is one thing but it is in such abundance here it is likely going to be hurting thermals a bit, we will test that later. The third and final point is the thermal pads used on the VRMs taking the HSF off as delicately as you can there is no avoiding the pads literally just falling apart like fluff this shouldn’t be happening really. I have no doubt the thermal pads are of extremely high quality but the pads dissolving as they appear to isn’t good.

Moving on to technical details we can see the PCB looks like a slightly cut down 6900XT which shouldn’t really be much of a surprise, the memory used is Samsung K4ZAF325BM HC16, and the MOSFETs used are International Rectifier TDA21472 rated for 70A with a 1500KHz switching frequency.

Flipping the card over and moving to the voltage regulators we have the Infineon XDPE132G5D switching regulator and the International Rectifier 35217. I wasn’t able to get hold of a spec sheet for the 35217 so there isn’t much I can tell you about it but functionally it is likely similar to the 35201. Overall the choice of hardware on this PCB is superb I have no issues at all here.

Taking a look at the heatsink we see that this goliath is immense not a single corner has been cut here the plate is soldered not just screwed, fin density is ideal and airflow should meet less resistance thanks to the fins being vertical. The fans used on the cooler are 100mm FD10015H12D and a 90mm FD9015U12D, I have no issues here either. The rear plate was a bit of a different story though, it is there for looks alone and not functionality I’d have at least liked to have seen it taking some of the thermal load off of the primary heatsink by having some thermal pads on the rear side of the memory.

I will use this space to also mention that I noted the screw heads appeared on the slightly worn side... some screwdriver heads could need changing on that manufacturing line, Powercolor. I’m the son of a specialist watchmaker and have done that line of work myself, nothing goes by me unnoticed, I just don’t mention everything if I consider it minor enough, and you guys know me, if it’s more than a faint blip on the radar I’m likely to mention it.

Technical Specifications

Now we have taken a look at the cooler and card itself it is time to have a peek at what this 6800XT is packing under the hood.

Nothing out of the ordinary to see here folks it’s your everyday 6800XT with a healthy 2090MHz engine clock with a boost frequency up to 2340MHz. Something that does really irk me though and a lot of manufacturers do it, what in the blue blazes is wrong with a nice rounded 2100MHz/2350MHz?

Test Setup

We should all be familiar with the test system now but here we go, it would have been nice to do testing on an X570 board but alas due to the global ******* the X570 board that is inbound did not arrive in time for this review.

CPU: AMD Zen 2700X @ 4.1GHz 1.3v

Mainboard: MSI X470 Gaming Pro Carbon

RAM: 2x16GB Crucial Ballistix RGB 3200MHz CL16, optimised timings

GPU: Powercolor 16GB 6800XT Red Devil

Storage: 250GB Hynix SL301 SATA SSD, 250GB & 500GB Asgard NVME PCI-E 3.0 SSDs, 2TB Seagate Barracuda

Opticals: 24x Lite-On iHAS324 DVD-RW, 16x HP BH40N Blu-Ray

Sound: Xonar DX 7.1, Realtek ALC1220

PSU: EVGA 1000w Supernova G2

OS: Windows 10 Pro x64 (latest ISO) and all updates

Case: NZXT Phantom 530

Radeon Settings

Radeon Settings really doesn’t get enough attention or even a mention in reviews and it should if you ask me as you can't have good hardware without good software both need to exist as a symbiotic relationship, for all the bugs and glitches Radeon Settings has it is still a fully featured comprehensive piece of software so let’s have a couple screenshots of it to show it off a little.

The first thing to note is that since the 2020 edition drivers AMD revamped the UI (again) and instead of the wonderful, compact design of the 2019 edition drivers we now have this over inflated and bloated monstrosity of an interface. It is unpleasant and at the extreme least needs a proper scaling option so people who want a more compact UI have that option. One thing that is a blessing though is the ability to directly report bugs and issues from the software, this option hasn’t been in the drivers that long but I can already tell that it does appear to be making a difference from remembering old bugs and glitches and looking for them during testing for this review a good number of them do seem to have been fixed. There is still a long way to go though to get everything ironed out for the architectures the Radeon drivers currently support but early signs are promising.

Benchmarks

No detail has been spared today for the benchmarks a lot of you wanted more tests and more tests you will get... and then some. Results are from running the Adrenalin 20.12.1 drivers which is why you will never see a massive long list of comparison GPUs from me aside from too many comparison GPUs taking focus away from the review hardware in question I rather the data I use be current and relevant not results taken that are several months or years out of date (unless I need to highlight something), and thus useless anyway because performance of GPUs can and does change based on the driver you are using.

So let’s start, synthetic tests first.

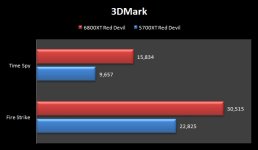

Without question the 6800XT Red Devil is fast and next to AMDs best of last generation you can see is beaten by about 33% even at 1080p in the venerable Fire Strike yardstick test which is quite some uplift. Even moving to the much more GPU dependent Time Spy shows the 6800XT absolutely destroying the 5700XT with a result that is about 65% faster.

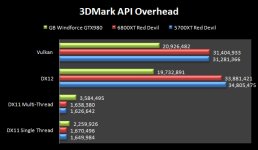

At first look you could be forgiven for thinking these synthetic results have been mixed up but I assure you they have not, the 5700XT really has returned comparable Vulkan and DX12 results to the 6800XT. DX11 Multi-Thread results particularly are as always abysmal and to highlight that I included results from the now ancient GTX980. There is something wrong here with the DX11 Multi-Thread test, most likely with how AMDs driver is handling dynamic batching if I had to hazard an explanation and if this synthetic test carries any weight you would see real world improvements by AMD addressing this.

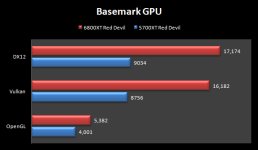

Now we come to a test that certainly divides opinion but Basemark GPU does the same thing as the 3DMark API overhead tests do but also includes a test for OpenGL performance.

I really can’t say there is anything surprising here the 5700XT looks decidedly midrange (1440p60) while the 6800XT displays again the superiority and evolutionary gains it has. The OpenGL results are also not surprising to me AMD have been terrible at OpenGL for years now, or rather I should say AMDs Windows driver\team has been terrible at OpenGL performance for years. Whatever is going on here they need to take pointers from the Mesa Coders] that quite frankly, rather badly show up whoever is doing the Windows OpenGL portion of the driver.

Our final paper test introduces something new to the table in Raytracing, now, games using Raytracing are thin on the ground and benchmarks for RT are even scarcer. Add to that the conundrum that everything is optimised and built for Nvidias RT currently and we have a real problem. In the end I settled on what I consider to be the fairest possible RT benchmark to run at this time as it is hardware agnostic and will run on both AMD and Nvidia hardware; Cryteks Neon Noir. It’s not ideal still, but it is the best we currently have really for the time being in a non-biased RT benchmark.

Performance here isn’t bad for either GPU, with AMDs version of Raytracing being Compute Unit based if we make the (relatively safe, but not guaranteed) assumption that a switch can be flipped for RDNA 1.0 cards we get to see through the window a bit at potential Raytracing performance for both RDNA 1.0 and 2.0. The takeaway here would be the 5700XT being a solid choice for Raytracing at medium and the 6800XT being a banger for Raytracing at high settings. Depending on the game, game engine, and RT implementation however I’d be much more cautious at expecting to max out Raytracing at 4K with the current cards on offer, another generation or two down the line though and we should be well on our way in this regard.

Overall you can be suitably impressed by either cards performance if the Neon Noir bench ends up being an accurate gauge of RT performance for AMD but only time will tell on that front and probably the first game you will get to experience AMDs Raytracing, dare I say it, is when Cyberpunk 2077 gets the proper next generation version released which is meant to be sometime in 2021.

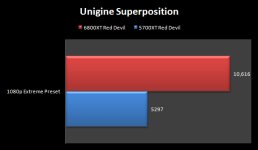

As is the trend the 6800XT flexes the muscles it has here chewing through even Superposition’s Extreme preset like it is nothing more than a minor inconvenience. I’m not easily impressed but the 6800XT impressed me here.

Taking a break from gaming specific tests I decided Indego would be interesting to run and just look at that compute performance, look at it, until the image is burned in your brain. It has been an extremely long time since GPU generations have shown performance jumps like this.

Alright we’re all done for synthetic and what if scenarios now it is time to bust out some real games. Unless otherwise stated all games are run with their maximum in-game quality options.

Say what you will of AMD and the Radeon Technologies Group I think you would have to be a special kind of denier not to agree that AMD RTG have climbed a mountain and great strides have been made in an extremely short timeframe and these results right here back that up, this is at least RTX3080 levels of performance here ladies and gentlemen coming from the 6800XT.

Starting with the older Metro Last Light Redux there is a dual purpose to including it, firstly back when it came out it was a real system strainer to run so makes for a good baseline and a bit of a legacy check for older DX11 titles to see how they are running on new hardware and drivers as well as to highlight a point about Metro Exodus, but we will get to that shortly. As the results show it is smooth sailing in Metro LL without MSAA but even a small amount of AA would begin to present a challenge even for modern hardware, fortunately though the latter is something you would likely only consider at 1080p and 1440p where there is performance to spare in spades.

Moving to Shadow of the Tomb Raider performance is very solid but with a GPU such as the 6800XT what numbers you look at for your average FPS can highly influence results such as in my testing for 1080p, primary numbers reported by the benchmark said 123FPS average while if you looked further down to the table itself 233FPS average was reported, I opted to go with the conservative number rather than one that was more likely to potentially misrepresent performance. I don’t know why there would be such a wild difference reported it is just something to bare in mind.

Now for Metro Exodus... performance is about as good as you could expect, which is to say regardless of if you have an AMD or nvidia GPU that framerate isn’t going to exactly blow anyone away. Compare Exodus on Ultra to Metro Last Light Redux at Very High settings and you won’t see that much of a visual improvement in Exodus over LL Redux but Exodus runs about 60% worse at 4K yet there is no good technical reason (aside from bad optimisation) for such a performance impact, add to this that if you go into the configuration file for Exodus and disable TAA you’ll see that somehow this completely breaks the lighting in the game, it very quickly becomes obvious 4A made a complete botch up of this title from a technical perspective and it isn’t just limited to AMD hardware, it runs pretty badly on nvidia hardware as well. The game also takes away all of that graphical flexibility given to you in LL Redux making it a lazy console port to PC at best.

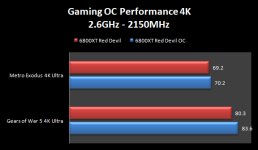

Gears of War 5 next then, performance looks good mostly here but considering the age of the title I had expected to see better framerates at 4K but you can’t call these results bad either, certainly nothing to really talk about performance is fine even if it is disappointing at 4K, probably a driver issue.

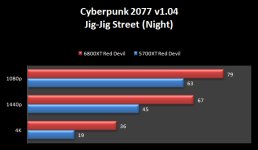

For the final game test getting a chart of it’s very own because the game is a mess yet everyone is still curious about it is Cyberpunk 2077. These results were taken with the hex mod on the exe file and all in-game settings at maximum except for screenspace reflections which are set to “High” as any setting above this just obliterates framerate without any image quality improvement I can tell.

Up to 1440p you can say the game is playable on both the 5700XT and 6800XT but there is no way you should be trying to run the game at 4K it just won’t be a nice experience. This is by no means any fault of the GPUs or drivers this is purely on CDPR and frankly speaking CP2077 is not a particularly good looking game so the fact you can’t even run it at 1440p with a solid 60FPS on anything less than a 6800XT or RTX3080 is a joke, the thought of a next gen version of the game coming makes you shudder with the current gen version running this badly.

Power, Thermals, Noise, & Overclocking

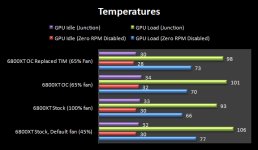

So up first we have thermals, as always for GPUs temperature results have been taken before stripping the card down so what you see here is what to expect from manufacturer construction, where possible I use my trusty DT8380 temperature gun and where that’s not possible HWinfo64.

Once again using Cyberpunk 2077 at Jig-Jig street 1440p and an extremely well ventilated Phantom 530 consisting of 6x 140mm fans and a 200mm fan GPU temperatures with the default fan profile remained at a slightly warm 77c at stock frequencies, idle junction temperatures were also good once that horrible Zero RPM “feature” was disabled, prior to this the idle temps were around the 45c mark which is just too high for my liking when even a low RPM value would drop idle temperatures a lot without adding any noise. As things stand if you choose to disable Zero RPM (and you should) the fans will spin at about 1000RPM which is inaudible but could do with being fine tuned to about 700RPM to strike a balance of the lowest possible idle temperatures at the lowest possible RPM for those people who are especially... particular, about noise. These sorts of things used to be thought about and considered if AMD aren’t going to then AIBs need to start doing it.

Moving to the results despite the overall reasonably good temperatures with default values the junction temperature of 106c is a bit disconcerting; it’s not something to get alarmed by though.

Dialling the fan up to 100% GPU and edge temperatures dropped considerably, but noise levels also increased substantially. At the maximum 3200RPM the card was certainly audible but it wasn’t an unpleasant screech or whine coming from the fans.

Next the fans were set to a practical maximum to balance cooling with noise. At 65% the fans really were not intrusive up to about 2000RPM. Reducing the fans by 35% did not impact temperatures like you might think either they really weren’t all that much higher compared to the stock results at 100%.

For the final thermals test the abundance of TIM was cleaned off and replaced with Halnziye HY-A9 that has the ludicrously high rating of 11W/m-k. With the new TIM application temperatures did not change too much and if I’m honest my TIM application on this occasion was probably a little thin, none the less idle and junction temperatures did drop a bit which in my book is worth the new TIM application alone.

Sound levels have been measured with a digital sound meter here, if you are a gamer who uses headphones you could likely turn those fans almost right up and not hear anything intrusive as you can see here noise levels with the 6800XT Red Devil were not all that intrusive, 60% is probably going to be the practical limit for most people who don’t game with headphones.

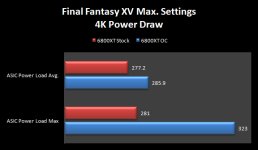

On to the OC and power draw results.

Moving to power draw we can see that 277w average at stock is very good but on the other hand with only a +15% allowance in Wattman we can immediately see this card throttles far too quickly with a 323w restrictive limit. This card would fly with an increased power limit to 360w, which I am still at a loss to explain as to why it doesn’t have that limit.

GPU overclocking... has changed. Encrypted vBIOSes lock everything down to the point of absolute control, no more can you increase artificially gimped cards power limits even with elaborate registry hacks as most are locked on a hardware level, GPU clock speeds as good as regulate themselves, It’s not even possible to optimise stock memory timings anymore without decoding and re-encoding timing straps...

So for "overclocking" then as you can see from the above results gains are so marginal overclocking with Wattman is a total non-starter.

I chose to stick to using Wattman rather than Afterburner to test just how good it is and unfortunately it really isn’t not as far as the 6800XT goes, out of the box voltage is already topping out at 1.15v and being able to increase TDP by a meagre 15% was as good as useless. As you can imagine being limited like this resulted in less than an exciting OC session, it was downright boring, pointless, and an utter waste of my time with best results with Wattman seemingly being around 2.6GHz for the GPU and 2150MHz for the memory, but now you know, Wattman sucks for AMDs new GPUs, I wish Powercolor had their own OC utility.

What a waste of potential. Whatever happened to when you said you wouldn’t make the same artificially limiting mistakes you made with the 5700, AMD? Hmm?

Conclusion

So where do we start with wrapping this peoples review up? I often have a lot to say in my conclusions and no doubt some of you may find it difficult to follow at times so I have been considering ways to make conclusions much easier to follow so to that end here is what will happen; I’m going to break things down into chunks, miscellaneous things first followed by bite size chunks for each criteria scored against.

I’ll start with perhaps the elephant in the room, the Powercolor 6800XT Red Devil has all of the most important things right, but currently AMD have no alternative to nvidias DLSS, which while not perfect (yet) the performance uplift it gives you especially at 4K cannot be ignored. AMD in collaboration with Microsoft, and probably Sony, are working on a solution to this but they need to work fast, and perhaps most worryingly, have a way to do it that doesn’t heavily bottleneck the hardware and this latter point as far as I can see is a stumbling block, the GPU itself is busy rendering while the Compute Units in games that will support it will be busy with Raytracing duties so where are those free cycles going to come from? The only idea I have would be for AMD to utilise no more than 70% of the Compute Units for RT while the leftover 30% handles AMDs version of DLSS, whatever that ends up being. The solution isn’t perfect but this is the reality of things when you don’t have dedicated hardware to do it. Going forward don’t be surprised if the Compute Units on future generation cards have babies.

As for the power limit 320w is nowhere near enough scope to see the potential of this card and I’ll question why this card doesn’t have a power limit of at least 360w as if you take the power the PCIe slot provides (75w) and the combined total of the twin 8 pin PCIe connectors (300w) we arrive at a maximum of 375w. AIBs aren’t to blame for this at all but real GPU OCing is also non-existent these days with encrypted vBIOSes diminishing appeal for such high end hardware and you should be asking questions about this directed at AMD and nvidia because overclocking is a value add and enticement to buyers.

I’ll end on a note about prices, now, if you are looking at hardware like this money is clearly no object you want the best and will pay for it but there is a crux to think about here and you shouldn’t go pointing your fingers at the AIBs because they have baseline costs they need to cover to turn a profit and keep going instead you should be aiming your pointing fingers at both AMD and nvidia then asking the question; Why are GPU prices outrageously expensive these days when you can go out and essentially buy an entire gaming system in the form of Microsoft’s Xbox X and the Sony PS5 for £400 which will give you a near identical experience to that of £1000 or more GPUs for the foreseeable future. Inflation and the global ******* alone are not enough to explain this.

Right, we have said all we can about the Powercolor 6800XT Red Devil it is time to get down to scoring.

Aesthetics: 8 / 10

The Powercolor 6800XT is rather attractive it isn’t as pretty as the Powercolor 5700XT in my opinion but it is still rather nice and should fit in with most builds.

Hardware Quality: 20 / 20

I really can’t speak highly enough of the component quality on the Red Devil it is made with some very fine components indeed such as the XDPE132G5D switching regulator and the International Rectifier 35217 and TDA21472, I would expect no less from a card costing what this surely will.

Cooling: 15 / 20

If I had to describe the cooler on this card in one word “phenomenal” would be a good one to use, some points are dropped though due to the unrefined idle RPM speed, 700 instead of 1000 would be a happy medium for everybody, and default values that are far too scared of themselves to ramp up a bit to keep the card cooler under load 2000RPM or so isn’t intrusively loud and is in fact still rather quiet while providing a quite large improvement in temperatures. More than these things though points come off for the multiple minor infractions with the thermal materials. Indeed I suspect the thermal pads being too small for the GDDR6 is responsible for the memory never actually reaching the maximum OC frequency I set but slightly under, which was 2138MHz.

Performance: 9 / 10

Performance at this moment in time with the 6800XT Red Devil is absolutely out of this world and will remain that way for some time, you can count yourself among the privileged minority should you purchase this card, despite the overall great performance though the 4K result in Gears 5 was underwhelming.

Overclocking: 5 / 10

Overclocking with Wattman is terrible and only serves to highlight that at such a premium level as the Red Devil Powercolor need to develop OC software of their own because this card has so much potential and without going out of your way to download something like Afterburner you will never see that potential unless AMD improve what they are offering in Wattman, which while not out of the realms of possibility, is unlikely. It is also hard to ignore the limiting power limit of 320w on a card that should be comfortable with 360w.

Bundled Software: 6.5 / 10

Devil Zone is quite comprehensive and has potential but it left me unimpressed with the current buggy nature of it. Without the bugs it would certainly earn a couple more points, the software even has the look and feel to be an all-in-one suit of sorts if only it had an additional tab for OCing.

Warranty Period & Product Support: 13 / 20

This is an area I’m not enamoured with, it’s not terrible, but nor does it leave you feeling fully satisfied and the largest reason for this which is something I am absolutely dumbfounded by, and have said as much to Powercolor, is their decision to give what I have dubbed “VEG” (Very Expensive Graphics) cards in the box with a purchase of the Red Devil. Without this VEG card and the code on it you cannot get access to their support forum meaning if you get a problem with a Red Dragon or their standard model GPUs you are somewhat out in the cold for support having to rely on returning the card to the etailer or shop you purchased from, and perhaps trying to get the attention of Powercolor via social media which is kind of like being on the horizon out at sea and flapping your arms about while yelling trying to be heard by someone on land.

In fairness Powercolor have acknowledged that they need to do better here and step up to the table and are working to improve this side of their game so I am willing to give them the chance to hold good to their word here. You just need to open up your forums to all Powercolor GPU owners and let all of your customers have full access to support should they need it.

Final Score: 76.5%

In closing Powercolor have a really nice card in the 6800XT Red Devil, the performance it offers you can justly be awed by and the RGB is a nice touch that you even have the option to turn off completely if RGB isn’t your thing. Even the box art makes the card feel special; it’s been a long time since a manufacturer has really focused on trying to revive a bit of that really cool artwork ATi used to be known for and it does make a difference both for the people who appreciate it and if you are just trying to stand out in the crowd but I do feel with a restrictive upper power limit, Powercolor not currently having their own OC software, and no game bundled at the sorts of prices hardware of this level commands the card is missing that extra spice factor to really propel it head and shoulders above the rest.

Last edited: