RDNA2 maxed out at 80 CUs.

RDNA3 maxed out at 96 CUs.

On consoles, the Series X GPU has 52 Compute units:

www.techpowerup.com

www.techpowerup.com

Generally speaking, AMD has been improving the performance when the number of CUs is scaled up on RDNA3, but they kind of hit a wall at 96 CUs due to power constraints (already 355w for the RX 7950X).

It makes sense (assuming the scaling is decent), that a GPU with 2x the compute units of the Series X console GPU would provide very good 4K performance on both consoles and desktop graphics cards. cards, particularly because the RX 7900 XTX already performs well (94 FPS 1% lows at 4K in most games - according to Techspot's review: https://static.techspot.com/articles-info/2601/bench/4K-p.webp).

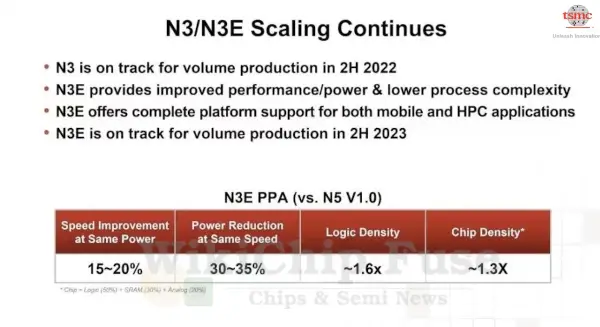

It seems likely that they will only be able to accomplish this with RDNA4, which will offer a die shrink to either a 3 or 4nm TSMC fabrication process, allowing AMD to reduce the power consumption a considerable amount. The main problem with RDNA3 is that on 5nm, it doesn't appear to scale all that much higher than RDNA2.

It's a good bet that 100 CU or greater GPUs will be a thing, even at the upper mid end (e.g. same tier as the 6800 XT), but I think it's much more likely to happen if they are able to use TSMC's future 3nm fabrication process.

It is true that the clock rate can be scaled up also on desktop GPUs (compared to the Series X GPU which is already running at 200w clocked at 1825 Mhz), but based on AMD analysis, it does increase power consumption more than might be considered desirable.

RDNA3 maxed out at 96 CUs.

On consoles, the Series X GPU has 52 Compute units:

AMD Xbox Series X GPU Specs

AMD Scarlett, 1825 MHz, 3328 Cores, 208 TMUs, 64 ROPs, 10240 MB GDDR6, 1750 MHz, 320 bit

Generally speaking, AMD has been improving the performance when the number of CUs is scaled up on RDNA3, but they kind of hit a wall at 96 CUs due to power constraints (already 355w for the RX 7950X).

It makes sense (assuming the scaling is decent), that a GPU with 2x the compute units of the Series X console GPU would provide very good 4K performance on both consoles and desktop graphics cards. cards, particularly because the RX 7900 XTX already performs well (94 FPS 1% lows at 4K in most games - according to Techspot's review: https://static.techspot.com/articles-info/2601/bench/4K-p.webp).

It seems likely that they will only be able to accomplish this with RDNA4, which will offer a die shrink to either a 3 or 4nm TSMC fabrication process, allowing AMD to reduce the power consumption a considerable amount. The main problem with RDNA3 is that on 5nm, it doesn't appear to scale all that much higher than RDNA2.

It's a good bet that 100 CU or greater GPUs will be a thing, even at the upper mid end (e.g. same tier as the 6800 XT), but I think it's much more likely to happen if they are able to use TSMC's future 3nm fabrication process.

It is true that the clock rate can be scaled up also on desktop GPUs (compared to the Series X GPU which is already running at 200w clocked at 1825 Mhz), but based on AMD analysis, it does increase power consumption more than might be considered desirable.

Last edited: