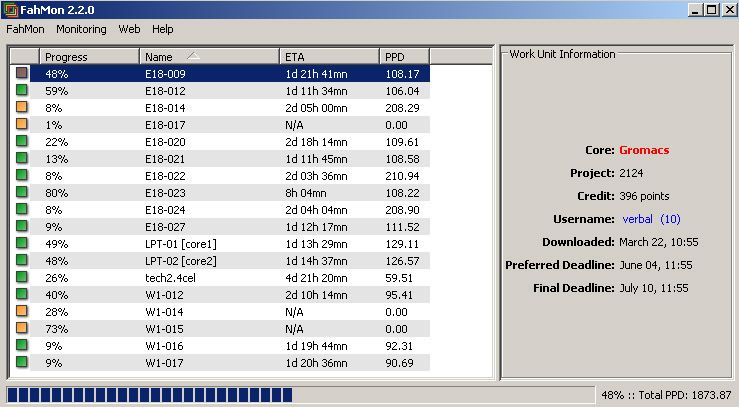

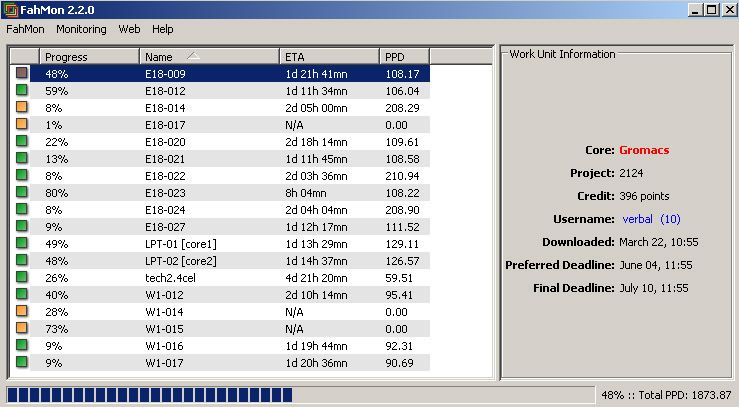

Found this old screen-grab of mine. I spent days setting these up and baby-sitting them.

Mainly 3Ghz Pentium 4 Prescotts and some 2.4Ghz Celerons.

The points I got from these in a month is what you can get in a day from one GPU now. (around 50k)

Mainly 3Ghz Pentium 4 Prescotts and some 2.4Ghz Celerons.

The points I got from these in a month is what you can get in a day from one GPU now. (around 50k)