For example, the EPYC 7401 contains 24 cores, 6 cores per Zeppelin and thus 6 cores per NUMA node. When using the default setting of

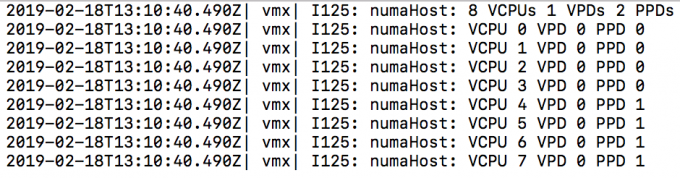

numa.vcpu.min=9, an 8 vCPU VM is automatically configured like this.

Screenshot by @AartKenens

A VPD is the virtual NUMA client that is exposed to the guest OS system, while a PPD is the NUMA client used by the VMkernel CPU scheduler. In this situation, the ESXi scheduler uses two physical NUMA nodes to satisfy CPU and memory requests while the guest OS perceives the layout as a Uniform Memory Access (UMA) system. In a UMA system, the access time to a memory location is independent of which processor makes the request, or which memory chip contains the transferred data). I.e., pretty much the same latency and bandwidth throughout the system. However, this is not the case as reported in this article above. Reading and writing remote CCX cache and remote memory (on-die) is slower than local memory even within the same Zeppelin. By setting the

numa.vcpu.min=6, two VPDs are created, and thus the guest OS is made aware of the physical layout by the ESXi scheduler. The guest OS and the applications can optimize memory operations to attain consistent performance.