Hold on, you can't accuse me of cherry picking (I picked that clip completely at random) and then cherry pick Fallout 4 in response.

I cherry picked that example because the i5 has nothing to prove in any game - it's a rock solid performer and will not be found wanting in anything versus competitors. I can show you loads of benchmarks showing the FX flip-flopping from excellent, to good, to poor performance across various games when compared to the i5, which is always consistently reliable. Can you show me benchmarks showing the FX beating the i5 in a variety of games?

That is why simply showing one or two benchmarks where it does fine, like you've done, paint a totally inaccurate picture of the processor.

It does not make one better than the other, it just makes them different, if the i5 can't keep pace with the FX83## in such situation the i3 certainly can't.

In some games Haswell and Skylake i3 can beat the FX. IT doesn't make the i3 a better all around processor but it does show the inherent weakness of the FX in single thread which a lot of games depend on.

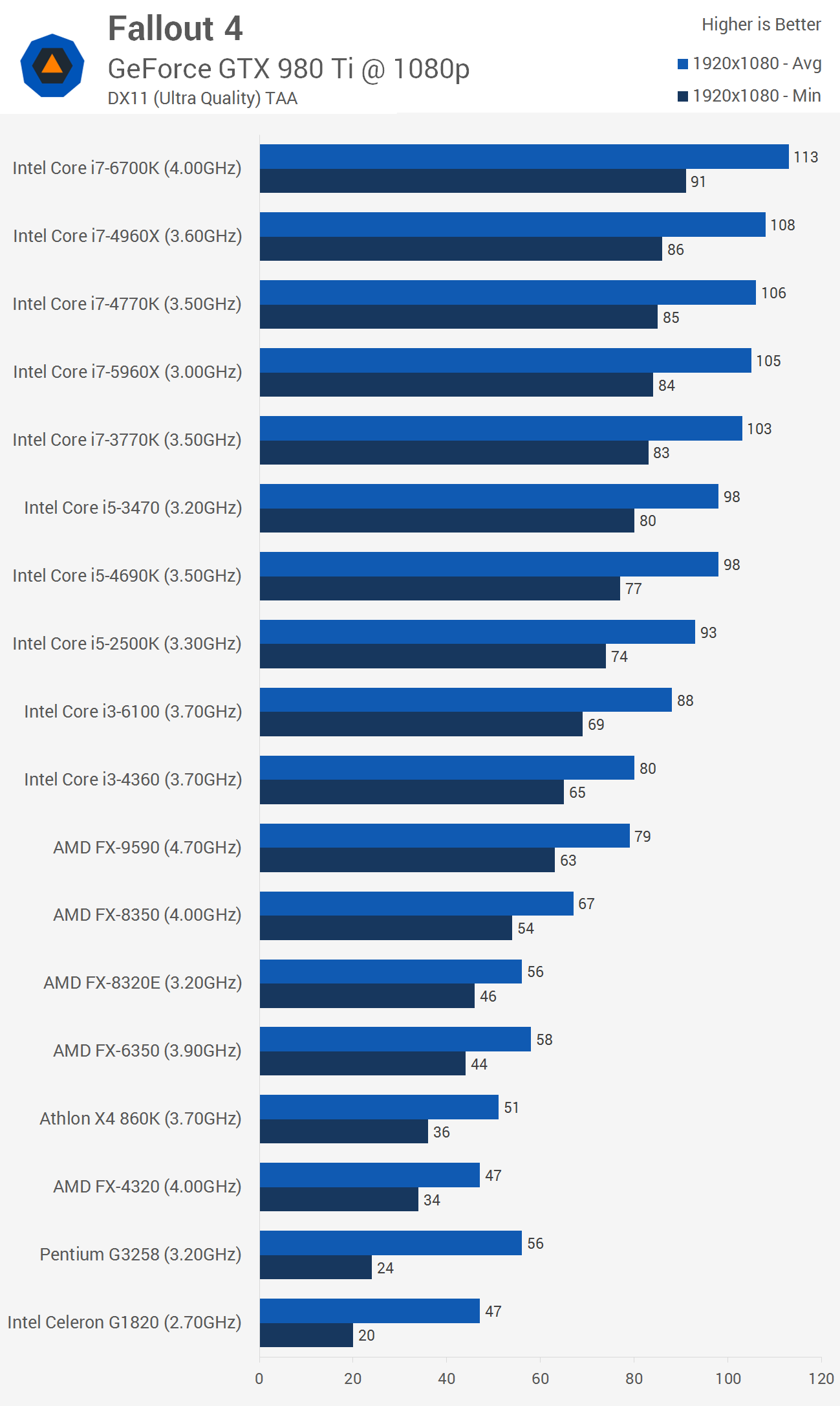

As for Fallout 4, the FX is way slower than the i5 in that game. Set everything to ultra including CPU options and see the FX struggle badly.

It's also pointless use screen caps from videos. Try running the opening 30 minutes of Fallout 4 on both CPU's where there aren't any major variances in what occurs and then examine your average and minimum frames for the real picture.

Also, I know from experience, I build PC's. I've run Fallout 4 on about 10-15 different processors including the i3-6100, FX-6300, FX8350, i5-2400, i7-2600, i5-3570, i7-6700, etc. To maintain a steady 60fps on an FX processor, whether the 6300 or monster 9570, you have to dial back the CPU intensive options.

That's not cherry-picking. It is COMMON for the FX-8350 to be fine for gaming. The bottleneck for the huge majority of people is their GPU. They were just supporting their point with an example of a modern and popular game that ran fine with an FX-8350. Nearly all modern popular games will.

Yes, it's common for it to be fine in games, just as it is common for it to be a considerable bottleneck in scenarios where the i5 is not, and there are a few games where it does comparatively very badly. Most games are GPU dependent, but there are also games that are more CPU dependent.

The FX was fine when it came out, but in 2016, you'd want to be actually mad to choose it over any Intel platform for gaming.

When I said it was 'terrible for gaming', I didn't mean it won't play new games. I meant compared to the i5, it's comparatively poor and supports high-end graphics cards badly.

Also, people are always obsessed with average framerate, and while the FX trails the i5 from small to huge margins compared to i5,

minimum frames also tell a huge story as the below benches show.

Fallout 4, everything set to ultra at 1440p inc all CPU dependent options.

Point has nothing to do with thread. Lets make this AMD vs Nvidia

Point has nothing to do with thread. Lets make this AMD vs Nvidia