As for Assassins creed and DX10.1.

The reason they had .1 nerfed in AC was not because nVidia can't do it - nVidia can infact do most .1 features anyway - it was because nVidia are already running at optimal performance on DX10 and ATI due to the way their architecture is needed .1 to get upto full speed... not a very nice move by nVidia but it keeps ATI cards just behind them performance wise in the game instead of just ahead... nVidia cards would see little to no benefit from DX10.1 in that game specifically.

What, this is getting ridiculous, since the time they patched dx10.1 out of the game everyone on earth knows its because Nvidia can't do it and ATi can and it gives them a significant performance advantage in the game.

You go on to say they didn't do it because they can't do it.... much fluff later, they did it because ATi can do it.

So Nvidia can do dx10.1 great, but they'd see no benefit, and ATI can do it, and get a massive benefit.

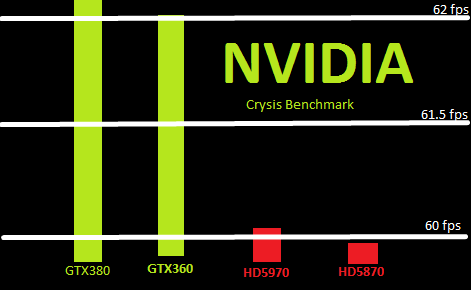

I seriously give up, also if we're going the I know more than you know, I just can't say because of an NDA, well I'm also under and NDA which says the 380gtx will be 948% slower than a 5870. Yes we can all say silly things, the simple and very basic fact is you do not know how fast it is. How do I know that, because Nvidia DO NOT HAVE FINAL SILICON. The head engineer doesn't know how fast it will be, Mr Spin CEO doesn't know how fast it is. YOU DO NOT KNOW HOW FAST IT IS, seriously, stop pretending you do.

Nvidia are MORE than happy to leak numbers to screw another cards launch, they have still not done so, because they still don't know the final spec's of the card let alone the clock speeds. They can't possibly know how much of a boost in clock speeds A3 will get them till its back, this will effect yields and spec's of the cards. yes the base specs are known.

But if it turns out they can't get a single 512shader part back at over 300Mhz, but they can get 30 cores if they drop one shader cluster but even then only at 600Mhz. If they go for 10% less shaders they can eek out 750Mhz. We don't know because they don't know.

10% less shaders and 10% lower clock speeds, and you could be looking at 20-25% less performance. IN all likelyhood they will indeed launch a 380gtx at full specs, hopefully at top clocks but considering the lack of clocks/yields in the A2 and the lack of improvement in TSMC's process, its hardly likely to sell in large quantities.

THe question is where will the 360gtx part fall in, they will obviously on this process need a part cut down enough to provide as many salavaged parts as possible, which means its the 360gtx thats in most danger of being cut down or clocked down. I wouldn't be surprised to see Nvidia launch with 3 versions for a change, with a 375gtx straight away and a really badly cut down 360gtx to bump that effective yield as much as humanly possible.

But again, Nvidia can not possibly know where those numbers will come in clocks/shaders, maybe even bus being cut down. Whoever is telling you information or leaking you a guess at final performance, well, if the CEO is spinning his ass off all over the place, what makes you think everyone internally isn't doing the same to people they work with so those guys don't decide to move towards AMD.

Just because you're under and NDA does NOT at all mean you're getting accurate info, not least because their isn't any.

This by the way is from someone who used to do hardware reviewing, has signed NDA's with Nvidia, ATi, Intel, AMD and a few others who knows full well what I'm told under NDA isn't always accurate or final data, be that from Nvidia or AMD. PR is PR and my guess would be if anyone you're in contact with at Nvidia, it will be their developers helping work on a project your company is working on. The developers know the LEAST of anyone in a company like Nvidia/AMD, they will be given the best case scenario and told to tell everyone how good everything is going.

RAAAAAGE

RAAAAAGE