Soldato

- Joined

- 25 Sep 2009

- Posts

- 10,248

- Location

- Billericay, UK

Now it's fair to say the GTX480 & GTX470 have come into hail storm of criticism on these boards concerning it's performance and power usage and with that in mind I wanted to find out just how good Fermi could have been IF it were as efficient as the HD5970, HD5870 & HD5850 in terms of power usage.

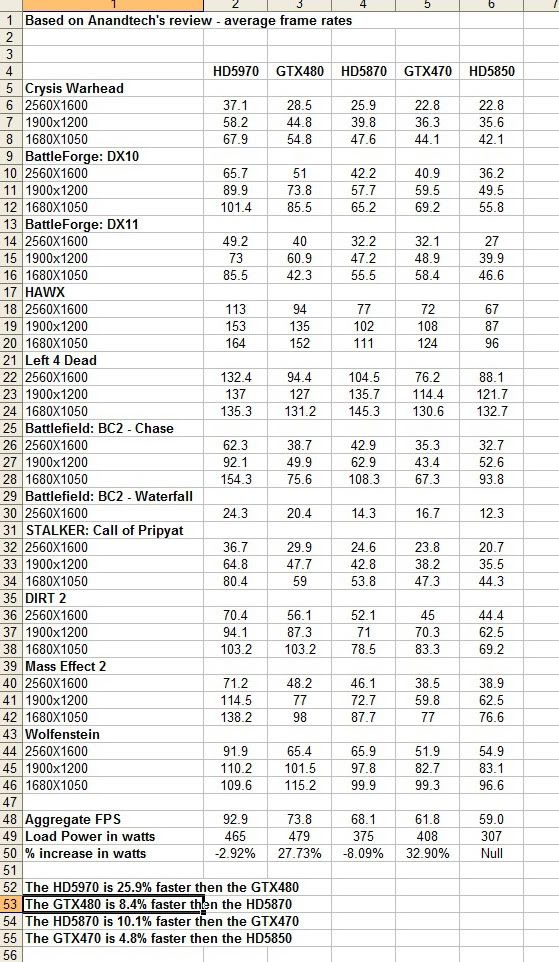

This first chart shows the average frame rate of the cards in the games below and also the load power draw in Furmark. I got these numbers from Anandtech who are seemingly reputable.

Here you can see the cards listed in performance order HD5970, GTX480, HD5870, GTX470, HD5850 and at the bottom there power draw and the % increase in performance they have over each other. Note that despite the huge power draws on the two Fermi cards that there lead over the respective ATI cards can be measured in single digits.

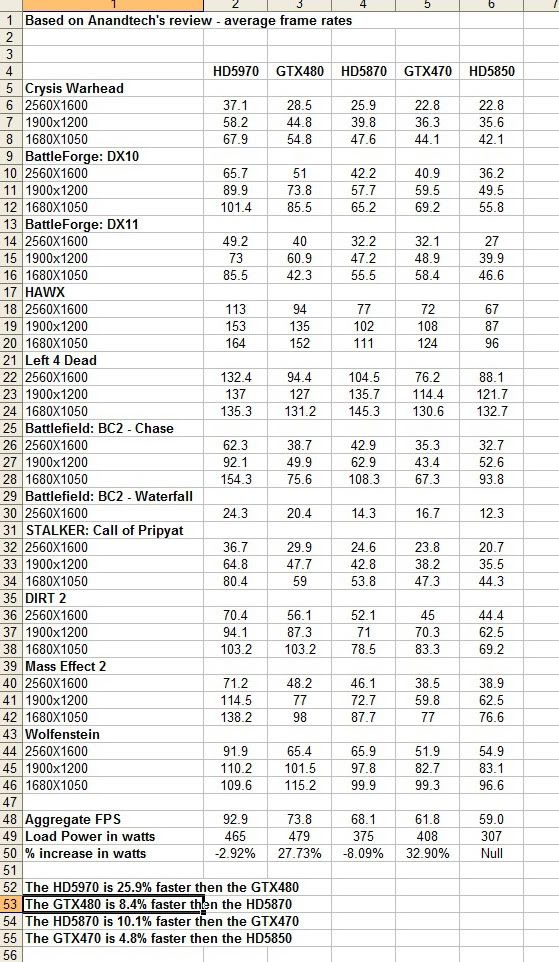

Now put that to one side and lets examine just how good Fermi could have been if the chipset was as efficient as ATI's. I've increased the FPS by the % difference in power load between the GTX480 and HD5870 and GTX470 and HD5850.

I can't help and feel Nvidia has missed the boat here, the GTX480 could have been as fast (by cats whisker) as the HD5970 and a lot cheaper to boot and the GTX470 would have forced ATI to make serious price cut backs on it's two other cards.

This first chart shows the average frame rate of the cards in the games below and also the load power draw in Furmark. I got these numbers from Anandtech who are seemingly reputable.

Here you can see the cards listed in performance order HD5970, GTX480, HD5870, GTX470, HD5850 and at the bottom there power draw and the % increase in performance they have over each other. Note that despite the huge power draws on the two Fermi cards that there lead over the respective ATI cards can be measured in single digits.

Now put that to one side and lets examine just how good Fermi could have been if the chipset was as efficient as ATI's. I've increased the FPS by the % difference in power load between the GTX480 and HD5870 and GTX470 and HD5850.

I can't help and feel Nvidia has missed the boat here, the GTX480 could have been as fast (by cats whisker) as the HD5970 and a lot cheaper to boot and the GTX470 would have forced ATI to make serious price cut backs on it's two other cards.

Last edited:

) that a better picture would be gotten without the randomly odd Vram limited tests, I think throwing out physx tests would also help but would obviously take back Nvidia's advantage somewhat.

) that a better picture would be gotten without the randomly odd Vram limited tests, I think throwing out physx tests would also help but would obviously take back Nvidia's advantage somewhat.