So no technical explanation of how a digital signal from a digital source can differ to the extent that the contrast is different etc..? No actual tests you can refer to other than claims that you've definitely seen differences in a sighted test yourself?

I've already told you that the standard industry tests for these are the

broadcast standard test patterns. Do you even understand what that phrase means?: "broadcast standard"

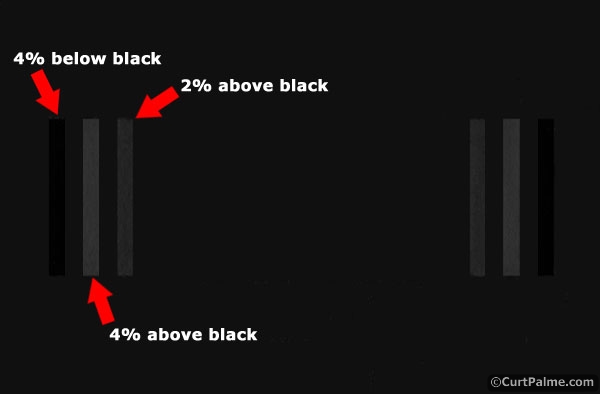

Here's a PLUGE pattern:

This is a standard test pattern for adjusting a video monitor's brightness control. Speak to the engineers at your local TV studio; go to any University or college that teaches electronics engineering; speak to any broadcast editor or cameraman; they'll all tell you the same. The METHOD is to LOOK at the screen and make the necessary adjustments. This is done with a series of patterns appropriate to each adjustment. So you banging on about "

tests you can refer to" when I've already told what every engineer uses as a standard method for picture evaluation just goes to underline how uneducated you are on the topic.

While you may not be lying a more realistic conclusion is either that one of the players is actually changing/attempting to enhance the signal in some way or simply

Halle-blinking-lujah!!!! That's exactly what I've been saying. The player changes the signal before it is transmitted via HDMI. So, a PS3 can look different to a standalone Blu-ray player; and it's not enough to say that "

the picture is 0's and 1's so it must be the same".

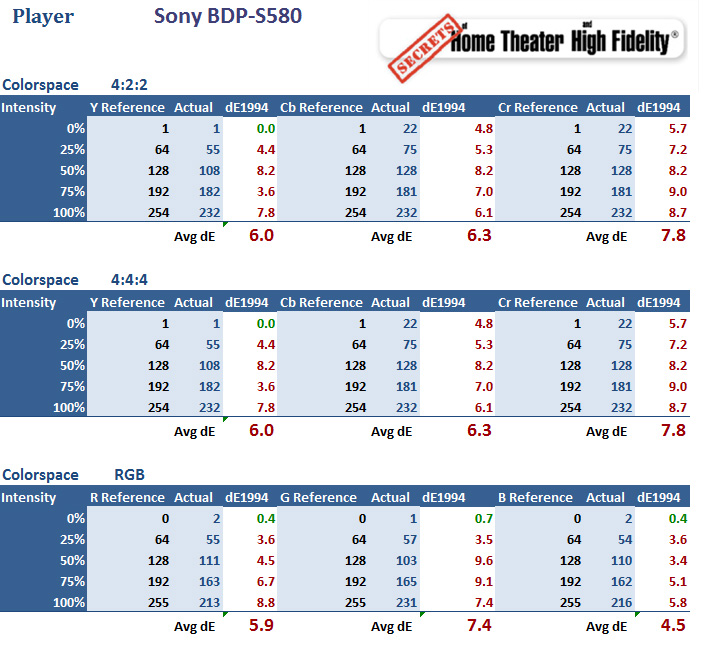

As for your comment about the differences not being there.... The test patterns on Digital Video Essentials, Spears & Munsil, my own reference test pattern generator, an Accupel DVG-5000 or any other reference test pattern source provide the correct tools to allow professionals and amateurs alike to make objective assessments of video performance in all areas that there are user controls for adjustment. It's simple. One just has to read the appropriate test pattern.

This is a colour contrast test pattern. If you played the Blu-ray version of this through your own system you'd see certain sections of the colour bars lose definition between the brightness levels.

* bear in mind, these are images lifted from the web and you are probably watching them on a PC monitor rather than a TV. Further, I have no idea how they were captured or if they have been enhanced or otherwise altered. Accordingly they probably no longer represent reference standard specifications.

The claim you're making is about as dubious as me claiming that the colours on the BBC web page are so much clearer now I've upgraded my motherboard/processor.

I'm not making a claim; I'm stating a fact. Blu-ray players process the video signal before it leaves the player. This results in subtle but visible differences in the picture performance.

As for what happens with your motherboard/processor.... who knows. I couldn't comment definitively until the video output was measured and assessed.

Its digital content - they should look exactly the same - if you think you're seeing differences then you're being hopelessly naive in just jumping to the conclusion right away that one player is superior to the other. Its much more likely that your test is flawed or that the test hasn't been conducted fairly, one of the players is manipulating the signal in some way etc...

The bold bit sums up my position and why I assert that in calibrations using broadcast standard test patterns that not all Blu-ray players are equal.