Hi all,

I have been meaning to definitively find out if pushing my R9 290X overclocks really made a difference. Most people assume simply pushing until you get artifacts, then back off slightly is the best way to find the best 24/7 overclock. What I actually wanted to see was is there a substantial difference between pushing for max OC and a moderate OC.

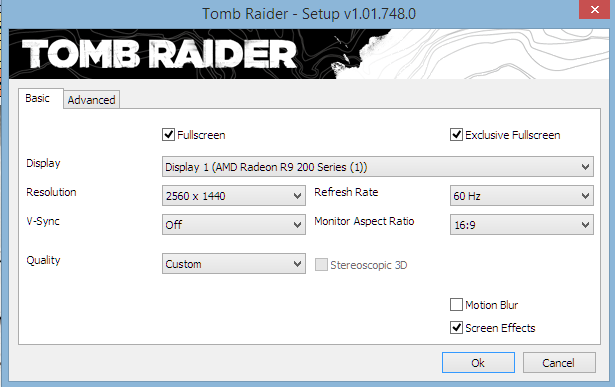

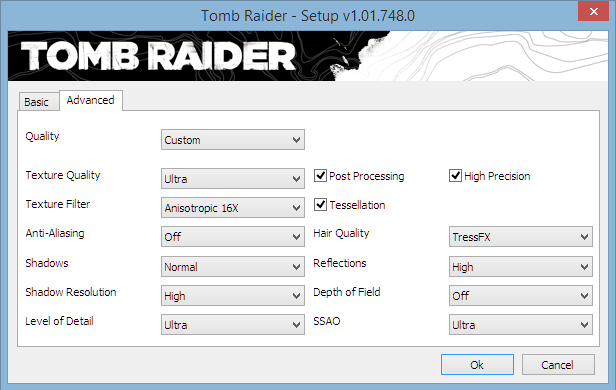

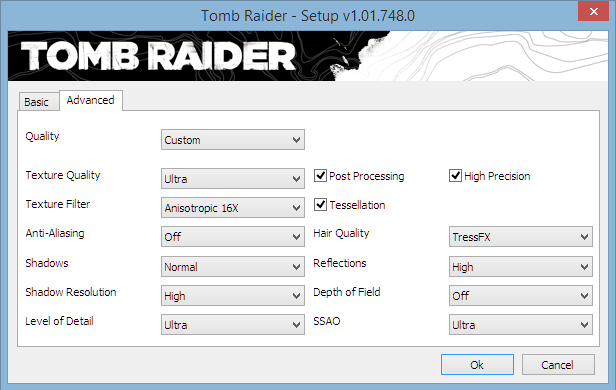

I used Tomb Raider benchmark for my testing and used max IQ settings in Radeon Pro. I also used SweetFX (via RadeonPro) to enable image sharpening and SMAA. So no driver optimisations were used at all and image enhancements were set in SweetFX. Finally, I set my own personal preference for the game graphics settings as follows.

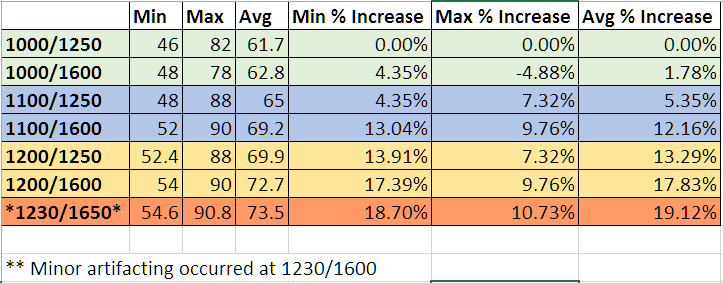

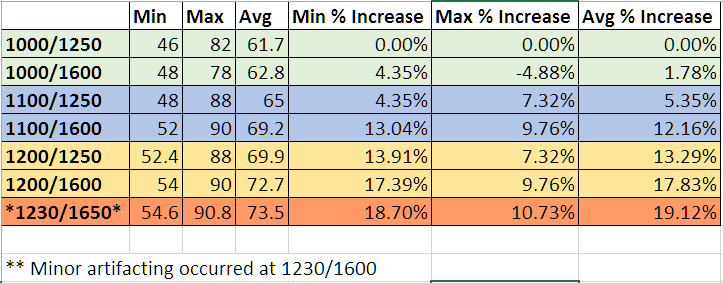

Using the above settings I set about testing various core clock and VRAM speeds. My results are recorded in the table below.

As can be seen the max I could reach without artifacting was 1200/1600. I was able to complete a benchmark at 1230/1650 OC and have included the result for reference. I found the following intersting.

I have concluded there is essentially a diminishing return in truly pushing a GPU to its limits. Pushing a GPU until it artifacts, then backing off slightly is not always beneficial. For example the fan speed at 1100/1600 was noticeably quieter than what was needed at 1200/1600. So the very marginal ~3% gain in performance was not worth the extra noise.

I know most here will already know this, but I felt it might be useful for new comers to see.

I have been meaning to definitively find out if pushing my R9 290X overclocks really made a difference. Most people assume simply pushing until you get artifacts, then back off slightly is the best way to find the best 24/7 overclock. What I actually wanted to see was is there a substantial difference between pushing for max OC and a moderate OC.

I used Tomb Raider benchmark for my testing and used max IQ settings in Radeon Pro. I also used SweetFX (via RadeonPro) to enable image sharpening and SMAA. So no driver optimisations were used at all and image enhancements were set in SweetFX. Finally, I set my own personal preference for the game graphics settings as follows.

Using the above settings I set about testing various core clock and VRAM speeds. My results are recorded in the table below.

As can be seen the max I could reach without artifacting was 1200/1600. I was able to complete a benchmark at 1230/1650 OC and have included the result for reference. I found the following intersting.

- In order to achieve best results, it is necessary to increase both core and VRAM clocks.

- Increasing one while ignoring the other will marginalise the performance increase slightly.

- 1100/1600 compared to 1200/1600 should be noticeable on paper, but it truly does make zero difference in game-play.

- By contrast the increase from 1000/1600 to 1100/1600 showed a definite and noticeable difference even during game-play.

I have concluded there is essentially a diminishing return in truly pushing a GPU to its limits. Pushing a GPU until it artifacts, then backing off slightly is not always beneficial. For example the fan speed at 1100/1600 was noticeably quieter than what was needed at 1200/1600. So the very marginal ~3% gain in performance was not worth the extra noise.

I know most here will already know this, but I felt it might be useful for new comers to see.