I did watch this bit earlier in the week, interesting point about the 9-240 range, though I think he did say that would naturally stick a premium on the monitor as its outside of usual operating ranges of 24/30/59/60/75/120. Be interested to see what the first lot of units do range at. He seemed quite careful not to mention any specific monitor manufacturers, which makes you wonder how many ate actually on board with pushing this?

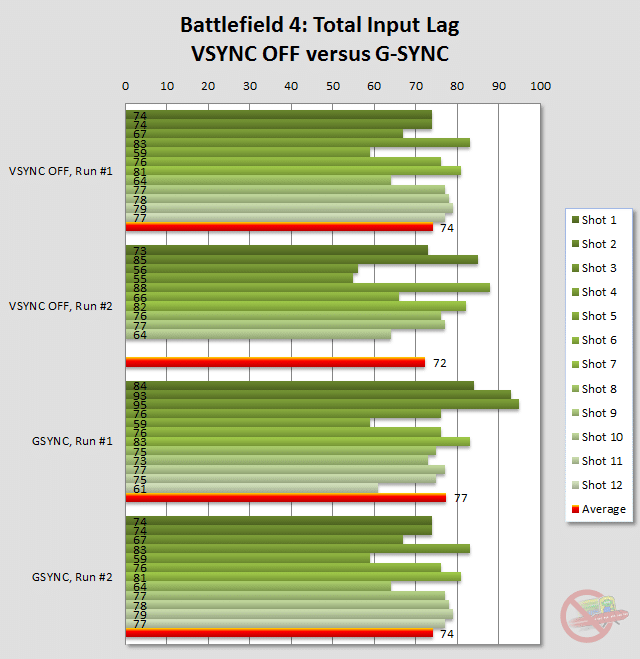

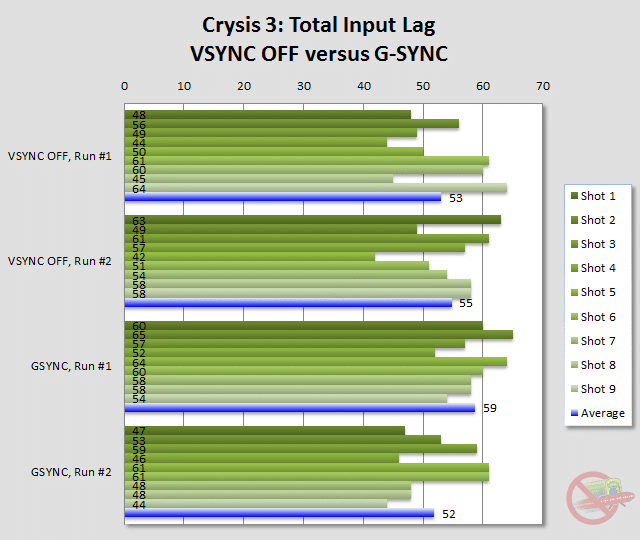

As for the gsync latency thing, idk tbh, no one else has mentioned latency besides the complete opposite of what this guy is saying, in that it removed all additional frame lag, leaving that limit down to the panel. Now this fella claims it adds lag?

I do have to say though the fact its a driver driven solution worries me slightly, **** ups are easily made by any driver vendor, add new feature/fix existing bug - break 3 other things then wait 3 months for a driver that does everything

Edit: apologies for any inaccuracies, was days ago I watched the video, or skipped through it at least

Apparently because G-Sync uses a frame buffer between the GPU and screen it adds 1 frame to the latency.

I guess its reducing latency when you compare it to running V-Sync because that buffers 2 frames on the GPU, or 3 if you use Triple Buffering.

Free-Sync does not use any buffering of any kind.