Soldato

- Joined

- 28 Sep 2014

- Posts

- 3,659

- Location

- Scotland

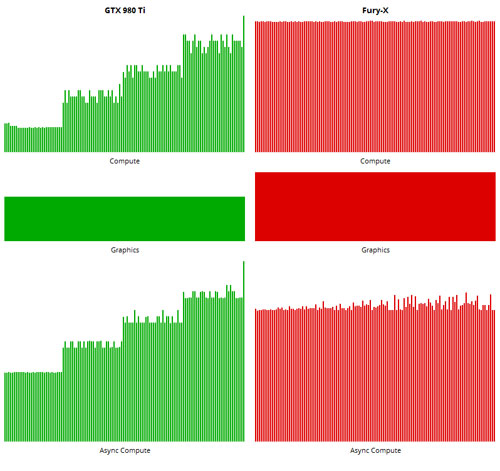

Its not something that has been a big focus until very recently - nVidia managed to get pre-emption working for compute stuff in general (on hardware that theoretically shouldn't support it at all) but last I heard they hadn't addressed the situation with dynamic parallelism (which is relevant to async) but I wouldn't count them out when it actually comes to it being needed. (EDIT: Its also currently only enabled for debugging use AFAIK so it won't perform well in gaming use as things stand).

The reason it didn't performed well because Nvidia told Oxide that Async Compute is not fully implemented in driver yet.

http://www.overclock.net/t/1569897/...ingularity-dx12-benchmarks/2130#post_24379702

Regarding Async compute, a couple of points on this. FIrst, though we are the first D3D12 title, I wouldn't hold us up as the prime example of this feature. There are probably better demonstrations of it. This is a pretty complex topic and to fully understand it will require significant understanding of the particular GPU in question that only an IHV can provide. I certainly wouldn't hold Ashes up as the premier example of this feature.

We actually just chatted with Nvidia about Async Compute, indeed the driver hasn't fully implemented it yet, but it appeared like it was. We are working closely with them as they fully implement Async Compute. We'll keep everyone posted as we learn more.

EDIT: End of the day Maxwell is going to be a liability when it comes to DX12, its been savaged to be very good at DX11 and that is it but anyone who really cares about DX12 performance won't want to be on any of the current GPUs if developers actually make proper use of what DX12 can bring to the table (i.e. AMD supported tessellation when nVidia didn't at all and we all know what happened there).

You, David Kanter and others should not jumped to conclusion. Maxwell will not going to be a liability when it comes to DX12 when Async Compute is fully implemented in driver. Nvidia did the same thing to Star Swarm benchmark when it came out AMD bragged Nvidia DirectX 11 will never matched Mantle performance, Nvidia was very angry at AMD's smeared marketing then they busy spend months fully implemented CPU overhead in driver. When Nvidia released DirectX 11 wonder driver saw Nvidia DirectX 11 destroyed AMD Mantle in Star Swarm.

It will be very interesting how good Async Compute will performed when it fully implemented, probably faster than or matched AMD on latency.

nvidia has broken async shaders in maxwell, and VR hardware is kinda weak? No problem, you just need to upgrade next year to next gen cards which will have everything fixed for you

nvidia has broken async shaders in maxwell, and VR hardware is kinda weak? No problem, you just need to upgrade next year to next gen cards which will have everything fixed for you  Yeah, consumer friendly.

Yeah, consumer friendly.