So should we ignore Rasterization? if so why?

Certainly not, but at the same time it's not smart to focus only on rasterisation.

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

So should we ignore Rasterization? if so why?

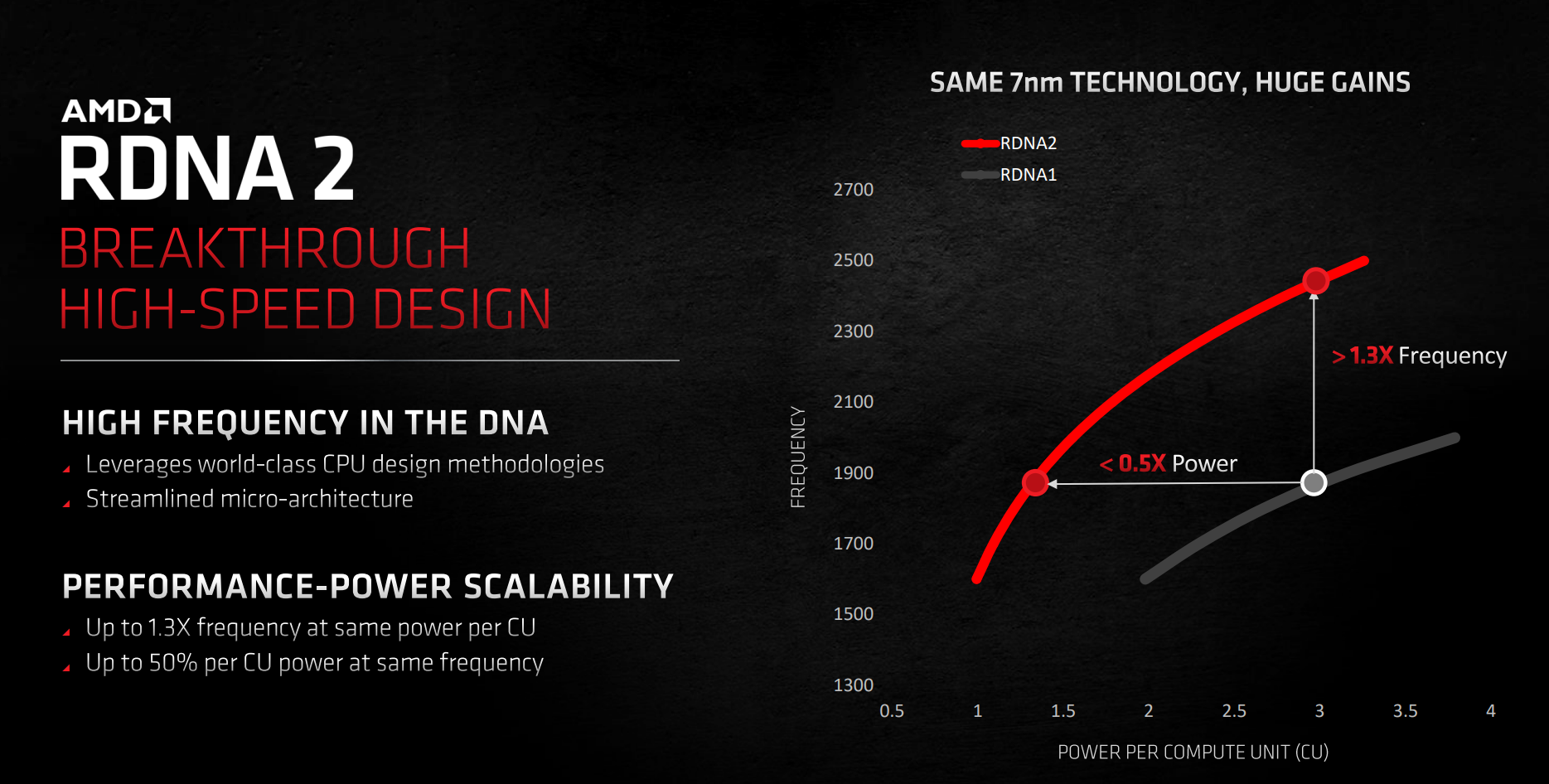

I thought it was shown when RDNA 2 was first released that the gains in efficiency between RDNA 1 and 2 was too great to be down to just being on a better node?

I am not saying Nvidia is going to be more efficient or that AMD is going to be more efficient.

All I am saying is that we don't know and we can't use Rumours or the current Gen of cards to predict which company will have the better power efficiency.

And by power efficiency, I mean performance per watt.

RX 6800 (None XT) used about 10% more power than the 5700XT with performance of about +60% on the same node.

Again as with the Intel 14nm vs GloFo 14nm i'd like to see people try to explain that away without agreeing architecture would have anything to do with it.

5700XT and RX 6800 are on the same nodeRDNA2 performance per Watt was hugely increased over RDNA1. Part of that was imo the 7nm process but also architectural improvments for sure. AMD had not focused on power efficiency for many generations of GPU and had previously been behind Nv in this metric, why Nv were the better choice for laptop gpus for many years. It looks like they woke up and made all the changes they needed in a single generation and took the lead in this area to a significant degree.

We can discuss till the cows come home but until the cards are in the hands of independant reviewers we just will not know.

Sure, and architecture matters just as much if not more than what node its on when it comes to power efficiency, i'll say it again, the 1800X, 8 cores 16 threads had the same performance as Intel's 8 core 16 thread equivalent but used half the power despite Intel being on an arguably better node, that is a massive difference in architectural efficiency.

Its one of the reasons Intel cannot keep up with AMD in Data-centre today, not even close. Intel are at least 2 generations behind.

5700XT and RX 6800 are on the same node

I just checked and you are right. Had slipped past me what node the 5700 cards were on, I assumed it was a lesser one because performance was mediocre.

AMD did really shake some special sauce on RDNA2 and really caught up in a single gen. Very impressive.

5700XT and RX 6800 are on the same node

I just checked and you are right. Had slipped past me what node the 5700 cards were on, I assumed it was a lesser one because performance was mediocre.

Why are you comparing with Intel and CPUs? Nvidia isn't intel and CPUs aren't GPUs.

Second, I never said that RDNA 2's architecture was good or bad. And I know it's important. But, we know that the 8nm node that Ampere is using isn't even close to been as good as the original TSMC 7nm process that AMD used in RDNA 1. The node that RDNA 2 is even better again.

You can't fully compare the the architectures and say how much more efficient one is than the other without been on the roughly the same node. Even your CPU example is proof of that. We don't know how much more efficient AMD's architecture actually is than Intel's in that case. On the same node AMD's efficiency lead would be even greater.

Surely you have to admit, that Nvidia's Ampere GPUs would be much more power efficient if they were on the same node as AMD's RDNA 2?

The slide says it is the same. If there where any efficiency gains in the node between RDNA 1 and 2, It was probably so small that AMD didn't even bother to mention it.No, you were right, there was definitely a node difference too. And you are doubly right because RDNA 2 was a big jump architecturally as well.

Assuming you are talking about current GPUs, I believe it is both. Weighted more towards architect as AMD stripped out the compute stuff that was in GCN.Anyone know why AMD are getting away with smaller dies than Nvidia? Is it process or just a different design?

Ok makes sense, all those tensor and cuda cores must take up some space. Thinking about it I did hear something about AMD splitting their cards. Makes sense if you're just looking at it for gaming. Keep the compute for the professional users.Assuming you are talking about current GPUs, I believe it is both. Weighted more towards architect as AMD stripped out the compute stuff that was in GCN.