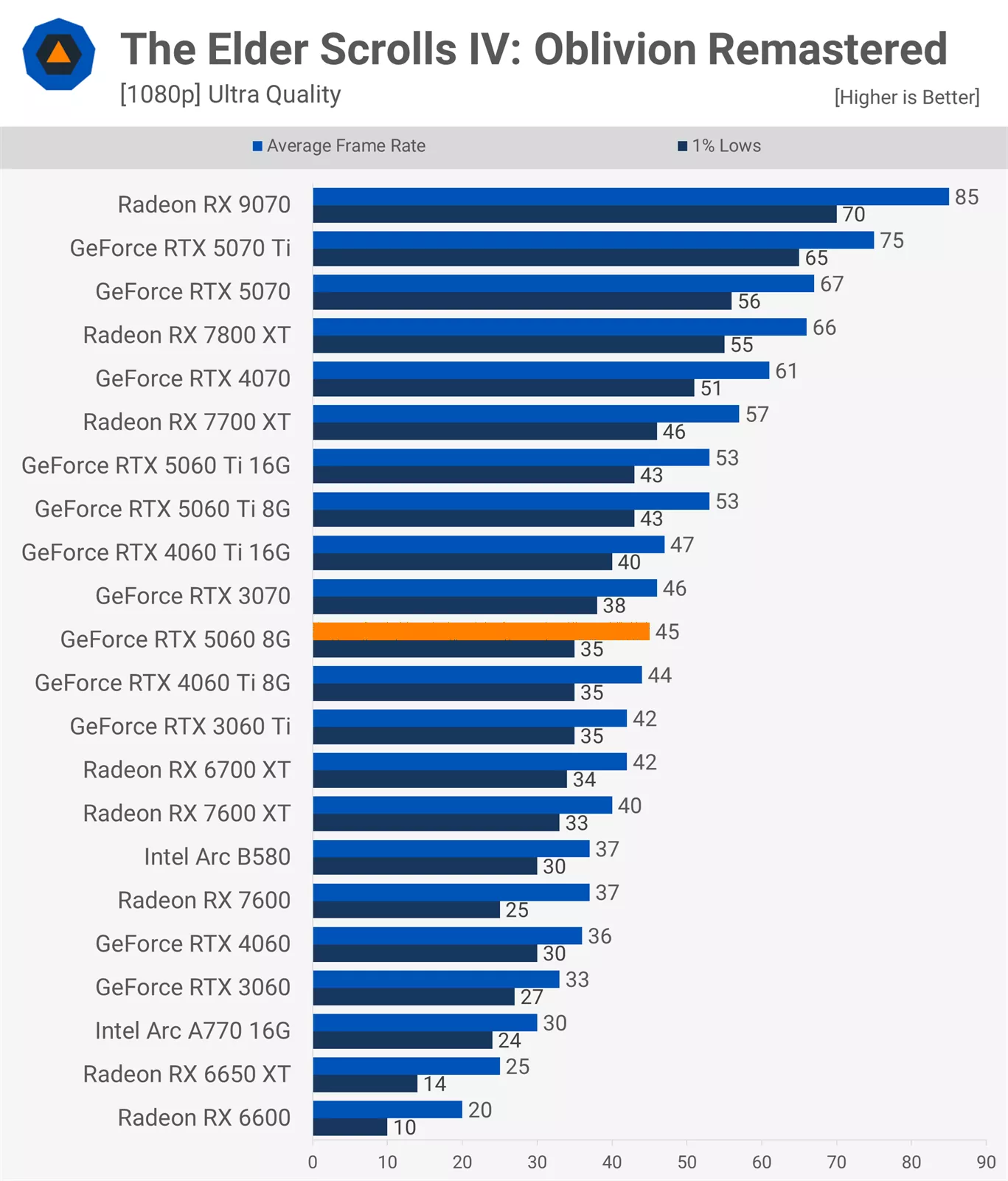

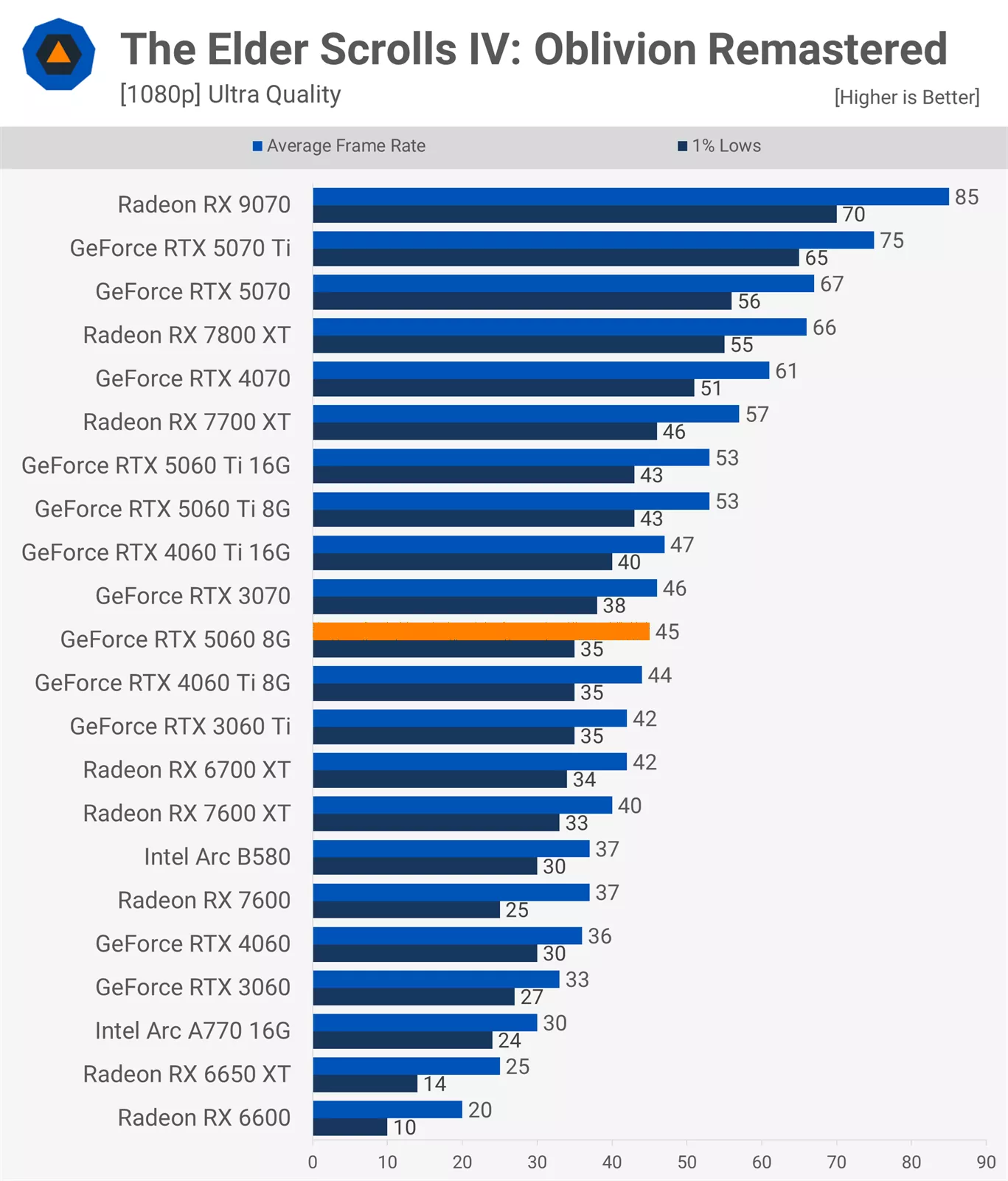

Some of the latest Techspot graphics card reviews are including UE5 games, like:

Review link:

https://www.techspot.com/review/2992-nvidia-geforce-rtx-5060/#The_Elder_Scrolls_IV

On recent Techpowerup reviews, Avowed uses UE5 also.

Each of these games ideally need a GPU like a RX 9070 or RX 7900 XT to achieve a consistent 60 FPS (1% low above 60 FPS).

That is before taking into consideration, issues with loading / traversal stutter present in some titles (a different topic).

So, I think that’s worth considering when building any new serious gaming PC, or upgrading.

So a minimum of ~£560 for the GPU is what you’ll be looking at. Particularly if you want to future proof your system a bit, this is probably what you will need for games like the Witcher 4 when that comes out.

Potentially less, if you use technologies like frame generation to boost your framerate in these titles.

Full list of UE5 games:

https://en.wikipedia.org/wiki/Category:Unreal_Engine_5_games

- Oblivion: Remastered

- Clair Obscur: Expedition 33

- Stalker 2: Heart of Chornobyl

Review link:

https://www.techspot.com/review/2992-nvidia-geforce-rtx-5060/#The_Elder_Scrolls_IV

On recent Techpowerup reviews, Avowed uses UE5 also.

Each of these games ideally need a GPU like a RX 9070 or RX 7900 XT to achieve a consistent 60 FPS (1% low above 60 FPS).

That is before taking into consideration, issues with loading / traversal stutter present in some titles (a different topic).

So, I think that’s worth considering when building any new serious gaming PC, or upgrading.

So a minimum of ~£560 for the GPU is what you’ll be looking at. Particularly if you want to future proof your system a bit, this is probably what you will need for games like the Witcher 4 when that comes out.

Potentially less, if you use technologies like frame generation to boost your framerate in these titles.

Full list of UE5 games:

https://en.wikipedia.org/wiki/Category:Unreal_Engine_5_games

Last edited: