Well it looks like the media giants have finally pulled the plug on one of the worlds biggest cretins, Youtube, Facebook, iTunes and others have all blocked him from their platforms;

https://www.bbc.co.uk/news/technology-45083684

I absolutely cannot stand the man, or that awful infowars company - but I'm not sure blocking his content from all the platforms sits well with me.

When all the media companies group together and decide to do a mass 'nope' towards someone, the amount of power they exercise is enormous, it probably comes with practically zero input from actual authority or any judicial system - instead it seems to me, to be a culture of intolerance - if you can't deal with it, ban it.

When the big media companies act this way, I feel as though the ability to make up my own mind about something is being taken away, and performed by some other agency according to it's own rules or agendas, as though they want to spoon feed everyone with their definition of all of the nice things.

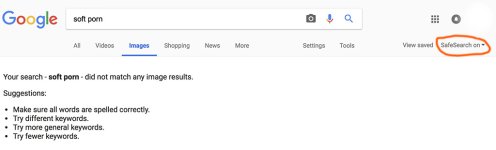

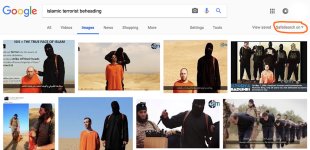

I think there are some cases where big media companies should remove things - for example if it actually breaks the law of the land [child abuse, terrorism, etc], in many cases it doesn't - it's just a bit nasty or naughty.

Who the hell wants to live in a world where anything controversial or unsavoury (but legal) is immediately blocked?

https://www.bbc.co.uk/news/technology-45083684

I absolutely cannot stand the man, or that awful infowars company - but I'm not sure blocking his content from all the platforms sits well with me.

When all the media companies group together and decide to do a mass 'nope' towards someone, the amount of power they exercise is enormous, it probably comes with practically zero input from actual authority or any judicial system - instead it seems to me, to be a culture of intolerance - if you can't deal with it, ban it.

When the big media companies act this way, I feel as though the ability to make up my own mind about something is being taken away, and performed by some other agency according to it's own rules or agendas, as though they want to spoon feed everyone with their definition of all of the nice things.

I think there are some cases where big media companies should remove things - for example if it actually breaks the law of the land [child abuse, terrorism, etc], in many cases it doesn't - it's just a bit nasty or naughty.

Who the hell wants to live in a world where anything controversial or unsavoury (but legal) is immediately blocked?