Wrong. You’d have FSR FG just as good as DLSSFG then. Which is not the case. Just like with every amd vs nv feature, amd’s is the ‘we have x at home’ one.

Nvidia’s features are built to certain standards. And that’s why most people continue to buy Nv and completely ignore Amd. The numbers don’t lie. Wish things were different but, maybe intel can shake things up a bit?

Pretty much. Again, it's great what amd do but literally they have no other choice lol....

- show up months/years later with said features

- features then take months/years to get better and even then I don't think said features ever end up being better?

- marketshare of < 10% still?

I still see people use gsync as an example for the freesync winning and no need for gsync module, it was just a way to lock customers in and whilst true to an extent (shock horror a company wanting to make profit by getting people into an ecosystem and upgrading!!!!

), which as evidenced by actual experts in the field, it still does have its place now as stated (unless once again, people have something to prove them wrong otherwise?)

A detailed look at variable refresh rates (VRR) including NVIDIA G-sync, AMD FreeSync and all the various versions and certifications that exist

tftcentral.co.uk

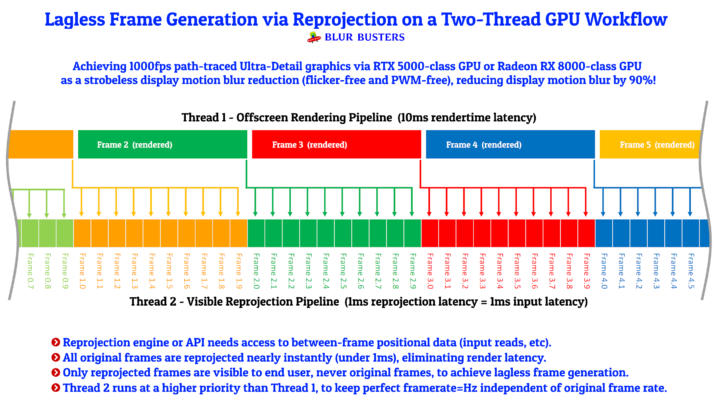

And a very recent post by blurbusters on the matter:

Native G-SYNC is currently the gold standard in VRR at the moment, and it handles VRR-range-crossing events much better (e.g. framerate range can briefly exit VRR range with a lot less stutter).

Using G-SYNC Compatible can work well if you keep your framerate range well within VRR range.

The gold standard is to always purchase more VRR range (e.g. 360-500Hz) to make sure your uncapped framerate range (e.g. CS:GO 300fps) always stays permanently inside the VRR range. Then you don't have to worry about how good G-SYNC versus G-SYNC Compatible handles stutter-free VRR-range enter/exit situations.

Also NVIDIA performance on FreeSync monitors is not as good as AMD performance on FreeSync monitors, in terms of stutter-free VRR behaviors.

Eventually people will need to benchmark these VRR-range-crossing events.

If you have a limited VRR range and your game has ever touched maximum VRR range in frame rate before, then the best fix is to make sure you use VSYNC ON and a framerate cap approximately 3fps below (or even a bit more -- sometimes 10fps below for some displays). Use VSYNC OFF in-game, but VSYNC ON in NVIDIA Control Panel.

Free/adaptive sync wasn't out for like 1-2 years till after gsync? And when it launched it had a whole heap of issues with black screening, no LFC and terrible ranges.

Yes, to get the best, you have to pay the premium to get said experience months/years before the competition but what's new here? Same goes for anything in life. If AMD were first to the market with quality solutions, you can be damn sure they would be locking it down or going about it differently to get people to upgrade. AMDs reveal at their amd event about frame generation was literally nothing but a knee jerk reaction in order to keep up appearences that they aren't falling behind nvidia hence why we still have only 3 titles with official FSR 3 integration and questionable injection method (that will get you banned if used for online games).

So the article summed it up perfectly:

Well, we all know why a lot of PC gamers picked their pitchforks. It wasn’t due to the extra input latency and it wasn’t due to the fake frames. It was because DLSS 3 was exclusive to the RTX40 GPU series, and most of them couldn’t enjoy it. And instead of admitting it, they were desperately trying to convince themselves that the Frame Generation tech was useless. But now that FSR 3.0 is available? Now that they can use it? Well, now everyone seems to be happy about it. Now, suddenly, Frame Generation is great. Ironic, isn’t it?

So yeah, the release of the AMD FSR 3.0 was quite interesting. And most importantly, the

mods that allowed you to enable FSR 3.0 in all the games that already used DLSS 3.0. Those mods exposed the people who hadn’t tested DLSS 3 and still hated it. Hell, some even found

AFMF to be great (which is miles worse than both FSR 3.0 and DLSS 3). But hey, everything goes out the window the moment you get a free performance boost on YOUR GPU, right?

Oh, the irony…

I was talking about if Nvidia enabled FG on Nvidia RTX cards not AMDs free for all take on it.

DLSS 3 is built around the optical flow accelator, Bryan and a couple of other engineers have stated they could enable it but it wouldn't work well then people would trash how bad dlss 3 is thus better to keep it locked in order to retain the "premium" look, of course, they could take a different approach like amd but alas they then have 2 solutions to maintain and again, from their POV, what benefit do they have to this? Who knows though, maybe they will do a "dlss 3 compatible" version.

www.dsogaming.com

www.dsogaming.com

), which as evidenced by actual experts in the field, it still does have its place now as stated (unless once again, people have something to prove them wrong otherwise?)

), which as evidenced by actual experts in the field, it still does have its place now as stated (unless once again, people have something to prove them wrong otherwise?)