Now we know what RDNA 2 in Navi 21 is capable of, let's speculate and wish what improvements can be made.

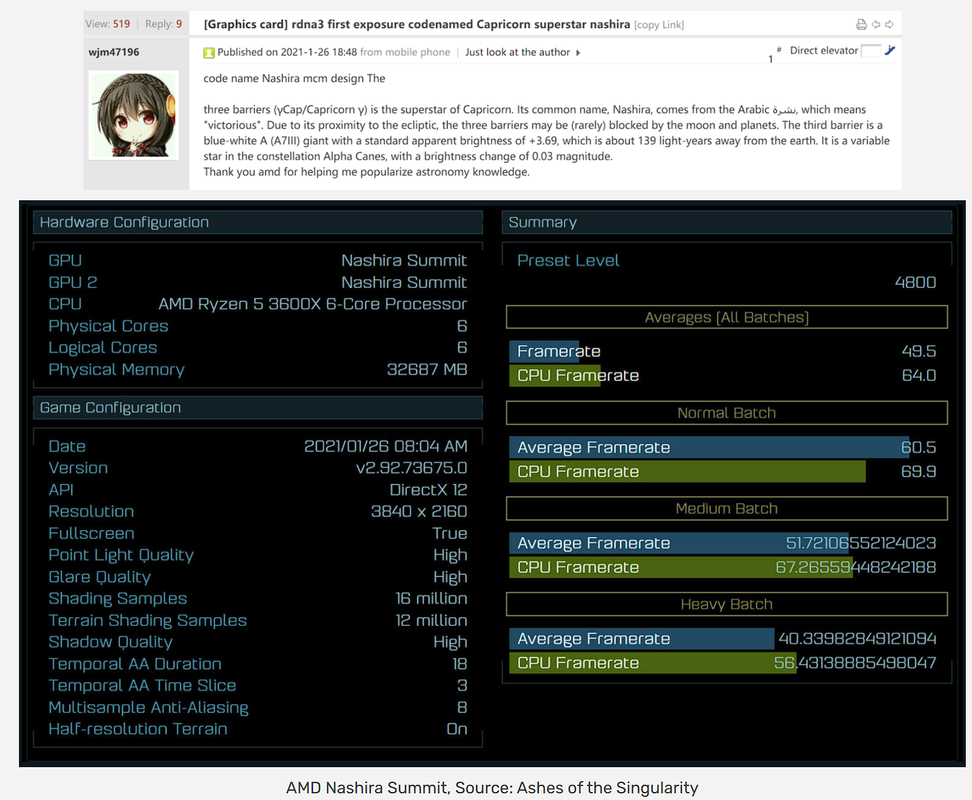

Ultra HD 2160p gaming with higher framerates looks like one of them.

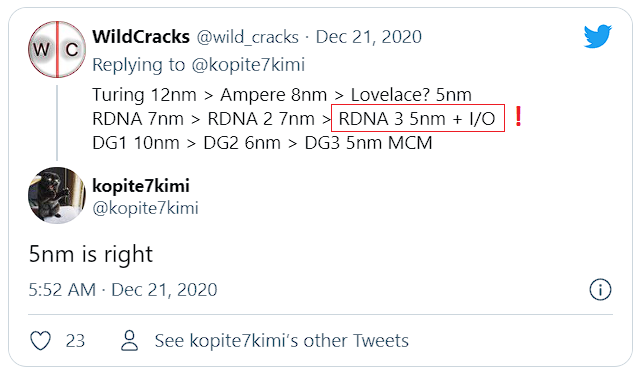

Shrink to TSMC N5 process?

Adding more Infinity Cache - up to 256 MB or more?

Increasing the width of the memory bus and/or better memory - GDDR-next-gen?

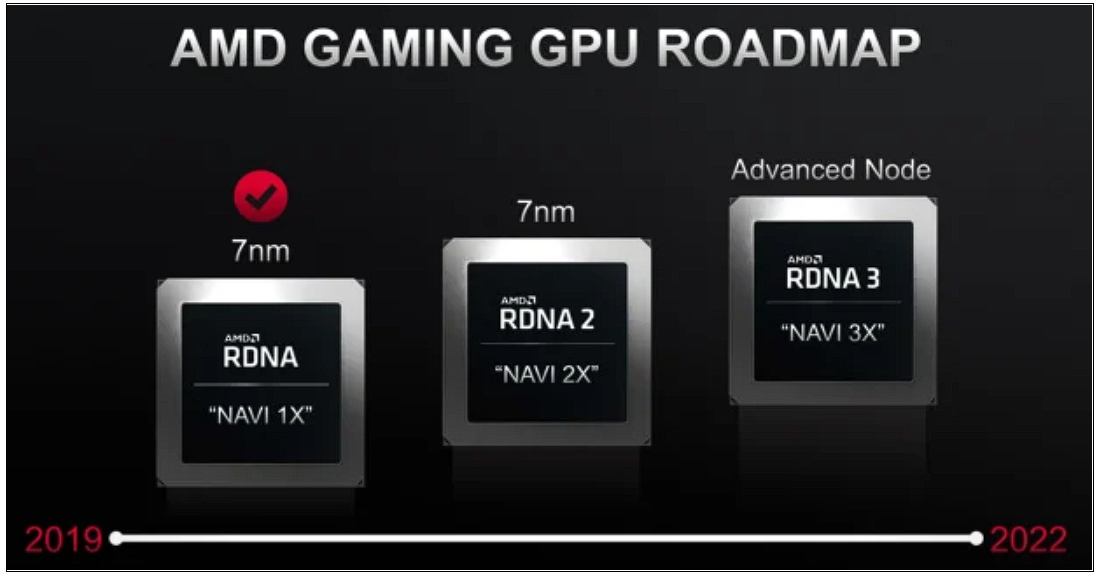

AMD Zen 4, RDNA 3 to repeat recently seen generational leaps

https://hexus.net/tech/news/cpu/146824-amd-zen-4-rdna-3-repeat-recently-seen-generational-leaps/

Ultra HD 2160p gaming with higher framerates looks like one of them.

Shrink to TSMC N5 process?

Adding more Infinity Cache - up to 256 MB or more?

Increasing the width of the memory bus and/or better memory - GDDR-next-gen?

AMD Zen 4, RDNA 3 to repeat recently seen generational leaps

https://hexus.net/tech/news/cpu/146824-amd-zen-4-rdna-3-repeat-recently-seen-generational-leaps/

As per our headline the AMD EVP was then quizzed about product refreshes going forward. "Everything is scrutinized to squeeze more performance out," replied Bergman and indicated that a similar long list of optimisations strategies are available that made the 19 per cent Zen 3 IPC gain possible – and Zen 4 will be moving to 5nm. On the topic of RDNA 3 GPUs, Bergman later confirmed that AMD was again targeting a 50 per cent plus GPU performance per watt improvement from generation to generation.

Yes, I agree the wait for proper graphics from AMD was difficult for everyone - since now the pressure over AMD is much lower, we can relax and enjoy this discussion.

Yes, I agree the wait for proper graphics from AMD was difficult for everyone - since now the pressure over AMD is much lower, we can relax and enjoy this discussion.