There's no point in the Apple GPU having this power and that power - If they don't support the APIs which games use, they are good as useless for them..

Maybe... wait for it... they're not for gaming ¯\_(ツ)_/¯

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

There's no point in the Apple GPU having this power and that power - If they don't support the APIs which games use, they are good as useless for them..

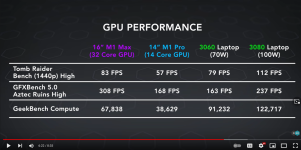

These cherry picked benchmarks are pretty wild, they are not near desktop 5000 series ryzen in performance and still behind the top end mobile GPU's as well lol.

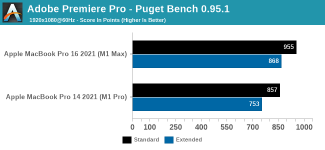

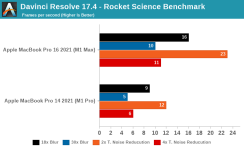

https://www.anandtech.com/show/17024/apple-m1-max-performance-review

This anandtech article shows the rough level its at. in some cases it will will outperform bigger more powerful chips, but general case its not as powerful as people were claiming.

3080 mobile and desktop ryzen performance its nowhere near really

AT answers the gaming question - its not quite there yet even in the best case scenario. Realise also AT is not testing a lot of Zen3 based laptops either - you can get 8C Zen3 based laptops with an RTX3060 for as low as £900 if you look on HUKD.

https://images.anandtech.com/graphs/graph17024/126685.png

https://images.anandtech.com/graphs/graph17024/126683.png

[IMG]https://images.anandtech.com/graphs/graph17024/126681.png

[IMG]https://images.anandtech.com/graphs/graph17024/126682.png

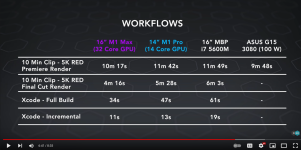

Also,some tests in a YT video today:

[URL]https://www.youtube.com/watch?v=IhqCC70ZfDM[/URL]

[URL]https://imgur.com/a/YdErGtK[/URL]

[IMG]https://i.imgur.com/kqKBEjl.png

Its solid,but as usual PCMR has gotten way too overhyped on these things and people have to realise the media gets in on the hype too. The Asus G15 has a Ryzen 9 5900HS,which is made on an old 7NM process and AMD is already moving over to newer designs over the next 12 months.

So as usual the Macs work well if you are already in that ecosystem and TBH,you would be already using a Mac irrespective of what CPU/GPU would be in there.

Its no different than all those compute focussed GPUs AMD/Nvidia have made which never did that well in gaming! Although one could argue for an ARM based SOC these are the quickest so far. It does make you wonder what AMD could do if someone would commission them to do a laptop focussed Zen3 SOC.

I do hope it means AMD/Intel actually get on an try and push their laptop CPUs a bit more. The Zen3 SOCs are only 180MM2 - I am hoping once DDR5 comes along,AMD can try and push more cores.

The huge performance gains due to the memory system makes we wish we had that on PC.

Decided to post here rather than make a new thread,

Considering what Apple has done with their M1 chips, is there a chance that future CPUs will have significantly larger "RAM"/cache on the/next to the processors. This would be alongside the standard DDR4/5 arrangement we have now.

I'm talking 1GB on Ryzen 3 and more the higher up you go. Say a Ryzen 7 sporting 4GB and threadrippers with 16GB on the low end and 64GB on the high end. How much would computational speed benefit from such an approach? Would we need to change how software is programmed to take advantage of this?

What about accelerators, will we see more HW accelerators for professional work?

Just had a proper look and apparently the RAM on the M1 is a separate chip on the same package so I've worded my question wrong.

Will we see RAM added onto the same package as the rest of the CPU.

Lets take AMD, stick RAM off to the side, attach it with IF or something else. For desktop purposes the on board RAM would be in addition to your standard DDR4/5 not a replacement. As an example, you would have a Ryzen 7 with 8GB of onboard RAM and 32GB of DDR4.

Is this something that could happen? Would windows and/or software need to be coded differently to take advantage of this?

it could, it’s technically possible, but you would need a lot of control circuitry over what data that you store on that local RAM as opposed to the sticks. And that cost/complexity likely outweighs the benefits.

As fantastic as the M1 stuff is, I Can’t help think that the apple route will plateau due to die size unless they can manage to split it towards the chip let type route.

Agreed, my point was more towards the cores and gpu die aspect of it. How far can that scale?

But Jigger has told us that the M1X is going to dominated the gaming laptop market

I do laugh at this gaming criticism. Is it designed as a gaming platform? nope. Is it marketed as a gaming platform? nope. Is there a eco-system around it for gaming? nope. Is it good at gaming? nope.

Is it designed as a production platform? Yup. Is it marketed as a production platform? Yup. Is there an eco-system around it for production? Yup. Is it good at production? Yup.

So why there is a fixation on gaming is completely beyond me.

The main problem with apple seems not to actually be apple themselves, but the people who are massive apple fanboys! A lot of them talk so much crap and are usually very condescending, which in turn brings out people to try smack apple down as their way to argue and vice versa.

If people were just normal and didnt fanboy over specific company's (not just apple), the tech world would be a better place. Would be much better in general if people were just a fan of specific products and technologies, regardless of where it comes from or who made it, but sadly I dont think thats ever going to happen

It’s totally going to kill sales of gaming laptops with the performance and battery life on offer. The installed base of Mac users and ability to run Windows will be a tempting market for games developers and that will drive more sale of the M1.

It’s interesting you can’t see the potential with this chip. It’s simply miles ahead of anything else on the market.

You can’t see developers wanting to reach a new market with a large user base top level hardware?

I'm looking really forward to the M2. I can see Apple adding in even more dedicated processing units, who knows what for, but it's cool to see them have so much control over what they deem important.

I've just gotten into Final Cut a bit more seriously at the moment, and would love some hardware accelerated ProRes encode/decode but for now my M1 MBP is still pretty snappy!

All of which are appreciated, although still won't be letting us play Cyberpunk at 4K ultra just yet... That being said... what if in the background they are working on Ray Tracing Cores? Or DLSS type acceleration? Maybe to also put into Apple TV's and iPads? I guess if these cores can also be helpful in other than gaming this is more likely...

On the more practical sense, I would say I could see them adding some sort of AR boosting capabilities (they might already been covered by the general ML cores). I can see Apple's next push into AR to expand what we expect computers can do. Something about processing spacial info real time but extremely power efficient?

There is zero chance that they are not at least communicating between the M1 GPU software and chip hardware teams to talk about ray tracing. It can also be used for special effects generation for film/movies. I can see in a few years there is a big announcement about how fast it is in Motion or another effects program, and tail end it with improvements in games as well. Who knows, maybe the types of calculations for raytracing are also super useful elsewhere, like calculating how wifi or sound waves bounce through a room and you pair it with LIDAR scanning to give new options.

Wish I could jump in that time machine to see where Apple is in ten years... I guess until then I will entertain myself watching people get mad about benchmark charts.

News time: If you take the M1 Max chip and turn upside down and photograph it (with the right tools) you can see that the chip has an interconnected die on one side and this detail was never shown in any of the marketing materials.

So Apple has already been playing with interconnects and it's possible that the M2/M3 are MCM CPUs capable of performance that is 2x to 4x greater than the M1 Max

Just catching up on this thread. Amazing chips from Apple. Will be the goto choice for many looking at video editing and other specific use cases.

A side note:

I love watching people make a complete **** of themselves. Cheers jigger.

This is actually what stops me from buying into macOS. For work, macOS would be great (development), but I’d still need my Windows PC for games. Now I just dual boot Linux and Windows, which meets both requirements.