What should you expect out of a non-synthetic benchmark?

But what is it exactly that you are going to see in a benchmark that is measuring actual gameplay performance? If you run the Ashes of the Singularity Benchmark, what you are seeing will not be a synthetic benchmark. Synthetic benchmarks can be useful, but they do not give an accurate picture to an end user as to what expect in real world scenarios.

Our benchmark run is going to dump a huge amount of data which we caution may take time and analysis to interpret correctly. For example, though we felt obligated to put an overall FPS average, we don’t feel that it’s a very useful number. As a practical matter, PC gamers tend to be more interested the minimum performance they can expect.

People want a single number to point to, but the reality is that things just aren’t that simple. Real world test and data are like that. Our benchmark mode of Ashes isn’t actually a specific benchmark application, rather it’s simply a 3 minute game script executing with a few adjustments to increase consistency from run to run.

What makes it not a specific benchmark application? By that,we mean that every part of the game is running and executing. This means AI scripts, audio processing, physics, firing solutions, etc. It’s what we use to measure the impact of gameplay changes so that we can better optimize our code.

Because games have different draw call needs, we’ve divided the benchmark into different subsections, trying to give equal weight to each one. Under the normal scenario, the driver overhead differences between D3D11 and D3D12 will not be huge on a fast CPU. However, under medium and heavy the differences will start to show up until we can see massive performance differences. Keep in mind that these are entire app performance numbers, not just graphics.

http://oxidegames.com/2015/08/16/the-birth-of-a-new-api/

Benchmarks.

PCPer

http://www.pcper.com/reviews/Graphi...ted-Ashes-Singularity-Benchmark/Results-Avera

http://www.computerbase.de/2015-08/...diagramm-ashes-of-the-singularity-3840-x-2160

Eurogamer

http://www.eurogamer.net/articles/digitalfoundry-2015-ashes-of-the-singularity-dx12-benchmark-tested

DidgitalFoundry

Wccftech

But what is it exactly that you are going to see in a benchmark that is measuring actual gameplay performance? If you run the Ashes of the Singularity Benchmark, what you are seeing will not be a synthetic benchmark. Synthetic benchmarks can be useful, but they do not give an accurate picture to an end user as to what expect in real world scenarios.

Our benchmark run is going to dump a huge amount of data which we caution may take time and analysis to interpret correctly. For example, though we felt obligated to put an overall FPS average, we don’t feel that it’s a very useful number. As a practical matter, PC gamers tend to be more interested the minimum performance they can expect.

People want a single number to point to, but the reality is that things just aren’t that simple. Real world test and data are like that. Our benchmark mode of Ashes isn’t actually a specific benchmark application, rather it’s simply a 3 minute game script executing with a few adjustments to increase consistency from run to run.

What makes it not a specific benchmark application? By that,we mean that every part of the game is running and executing. This means AI scripts, audio processing, physics, firing solutions, etc. It’s what we use to measure the impact of gameplay changes so that we can better optimize our code.

Because games have different draw call needs, we’ve divided the benchmark into different subsections, trying to give equal weight to each one. Under the normal scenario, the driver overhead differences between D3D11 and D3D12 will not be huge on a fast CPU. However, under medium and heavy the differences will start to show up until we can see massive performance differences. Keep in mind that these are entire app performance numbers, not just graphics.

http://oxidegames.com/2015/08/16/the-birth-of-a-new-api/

Benchmarks.

PCPer

http://www.pcper.com/reviews/Graphi...ted-Ashes-Singularity-Benchmark/Results-Avera

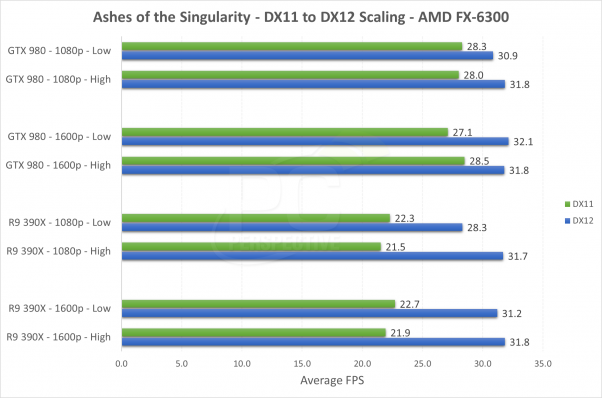

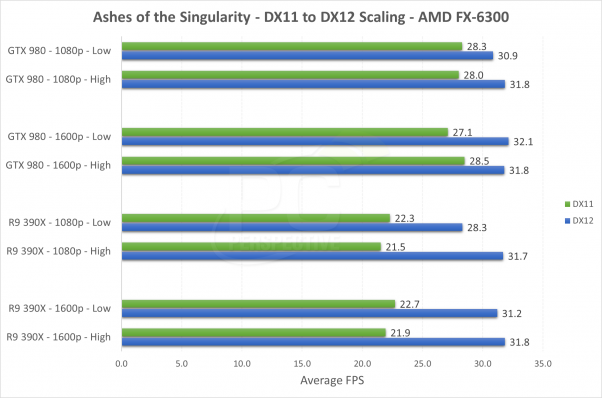

ComputerbaseThere is a lot of information in each graph so be sure you are paying attention closely to what is being showcased and what bars represent what data. This first graph shows all the GPUs, resolutions and APIs running on the Core i7-5960X, our highest end processor benchmarked. The first thing that stands out to me is how little different there is between the DX11 and DX12 scores on the NVIDIA GTX 980 configuration. In fact, only the 1080p / Low preset shows a performance advantage at all for DX12 over the DX11 in this case; the other three results are showing better DX11 performance!

The AMD results are very different – the DX12 scores are as much as 80% faster than the DX11 scores giving the R9 390X a significant FPS improvement. So does that mean AMD’s results are automatically the better of the two? Not really. Note the DX11 scores for the GTX 980 and the R9 390X – at 1080p / Low the GTX 980 averages 71.4 FPS while the R9 390X averages only 43.1 FPS. That is a massive gap! After utilizing the DX12 that comparison changes to 78.3 FPS vs 78.0 FPS – a tie. The AMD DX12 implementation with Ashes of the Singularity in this case has made up the difference of the DX11 results and brought the R9 390X to a performance tie with the GTX 980.

http://www.computerbase.de/2015-08/...diagramm-ashes-of-the-singularity-3840-x-2160

Eurogamer

http://www.eurogamer.net/articles/digitalfoundry-2015-ashes-of-the-singularity-dx12-benchmark-tested

DidgitalFoundry

Wccftech

http://wccftech.com/nvidia-we-dont-believe-aots-benchmark-a-good-indicator-of-dx12-performance/NVIDIA: We Don’t Believe AotS Benchmark To Be A Good Indicator Of DX12 Performance

http://forums.oxidegames.com/470406'Nvidia mistakenly stated that there is a bug in the Ashes code regarding MSAA. By Sunday, we had verified that the issue is in their DirectX 12 driver. Unfortunately, this was not before they had told the media that Ashes has a buggy MSAA mode. More on that issue here. On top of that, the effect on their numbers is fairly inconsequential. As the HW vendor's DirectX 12 drivers mature, you will see DirectX 12 performance pull out ahead even further.'

We've offered to do the optimization for their DirectX 12 driver on the app side that is in line with what they had in their DirectX 11 driver. Though, it would be helpful if Nvidia quit shooting the messenger.

http://forums.oxidegames.com/470406

Last edited:

.

. I think it is also an AMD sponsored game.

I think it is also an AMD sponsored game.