You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Calling Team 10

- Thread starter Dantoshou

- Start date

More options

Thread starter's postsOff topic, but your sig, that's nvidia and I can't for the life of me remember what off, it's from my old 5200XT nvidia days I think... minds going as I get older lolThank you sah

Its Dawn (but the naughty version) https://www.nvidia.com/coolstuff/demos#!/geforce-fx/dawn

Having trouble keeping my big rig folding.

Its a duel epyc rig with 7601's so 64 core 128 threads ,i'm having the most luck when smt is disabled so only 64 cores (about 1mill ppd).

The problem is about once a day it just sits with no work until the slot is deleted and replaced or the rig is rebooted.

I'm running ubuntu 18.04.

I have other rigs that mostly just run without issue ,any suggestions?

I have tried a larger number of smaller slots but that seems even worse

Its a duel epyc rig with 7601's so 64 core 128 threads ,i'm having the most luck when smt is disabled so only 64 cores (about 1mill ppd).

The problem is about once a day it just sits with no work until the slot is deleted and replaced or the rig is rebooted.

I'm running ubuntu 18.04.

I have other rigs that mostly just run without issue ,any suggestions?

I have tried a larger number of smaller slots but that seems even worse

Last edited:

Having trouble keeping my big rig folding.

Its a duel epyc rig with 7601's so 64 core 128 threads ,i'm having the most luck when smt is disabled so only 64 cores (about 1mill ppd).

The problem is about once a day it just sits with no work until the slot is deleted and replaced or the rig is rebooted.

I'm running ubuntu 18.04.

I have other rigs that mostly just run without issue ,any suggestions?

I have tried a larger number of smaller slots but that seems even worse

Can't really help you there mate, not running any big iron anymore. Closest I could get would be maybe a 2990WX on Ubuntu 20.04 I can give it a whirl if I get a chance.

Gonna pick this back up again lads and ladettes...

Dropped out of the top 10, makes me sad.

About bloomin time!

Soldato

- Joined

- 21 Jul 2005

- Posts

- 21,209

- Location

- Officially least sunny location -Ronskistats

gone from 3 million points a day to 11,000

RTX 3080 maybe 8-9 mil PPD?

You pre-ordered then?

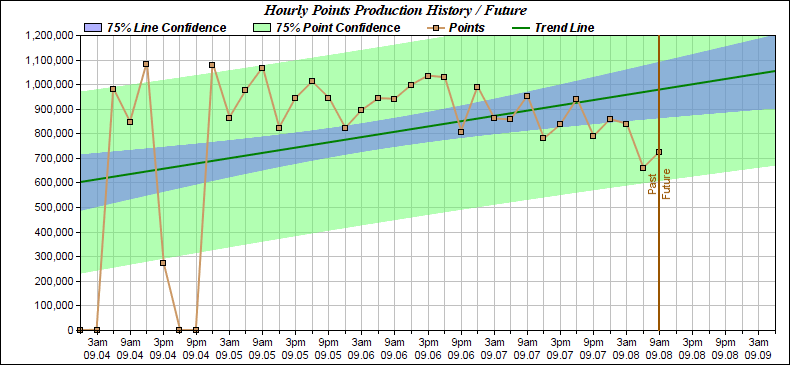

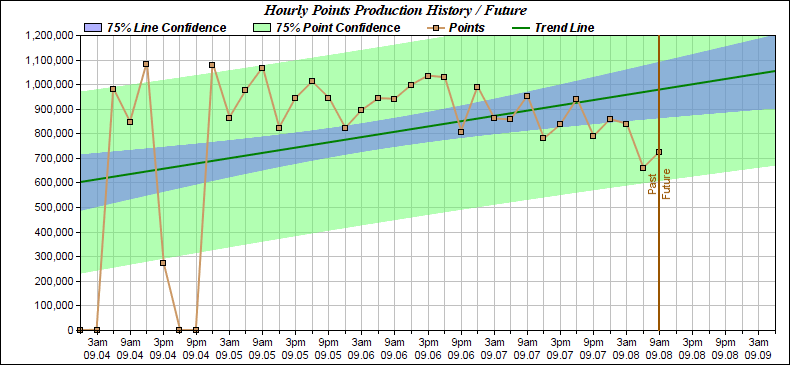

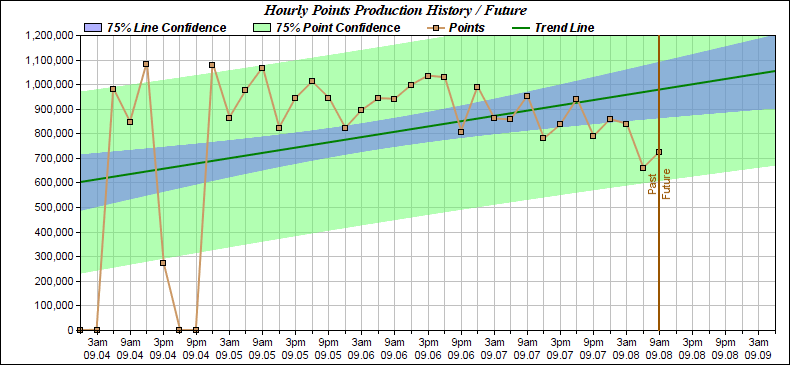

Occasionally I get asked to prove stability / reliability of machines with GPUs in them. F@H makes for an easy way to both make a machine work, and actually provide some value. 6,425,705 from the last 24 hours from what I left running over this long weekend (I'm in the US), under my more usual handle of Twirrim. I'll circle back here with details soon because I think some folks might find it interesting.

I'll need to check some metrics, but I _think_ there has been a bit of work unit starvation going on

I'll need to check some metrics, but I _think_ there has been a bit of work unit starvation going on

Last edited:

Associate

- Joined

- 9 Apr 2004

- Posts

- 856

- Location

- Nottingham - UK

Occasionally I get asked to prove stability / reliability of machines with GPUs in them. F@H makes for an easy way to both make a machine work, and actually provide some value. 6,425,705 from the last 24 hours from what I left running over this long weekend (I'm in the US), under my more usual handle of Twirrim. I'll circle back here with details soon because I think some folks might find it interesting.

I'll need to check some metrics, but I _think_ there has been a bit of work unit starvation going on

I had wondered where 'Twirrim' had come from as you approached in my rear view mirror extremely fast LOL! Shame you can't run like that all the time as that was a pretty significant boost

Soldato

- Joined

- 18 Oct 2002

- Posts

- 8,307

- Location

- Aranyaprathet, Thailand

ppd should have fallen for many as some of the crazy high point beta and test units have completed and we're back to normal returns

Soldato

- Joined

- 18 Oct 2002

- Posts

- 8,307

- Location

- Aranyaprathet, Thailand

New CUDA beta gpu core22 indicates maybe 6m tops.

Okay, for those who were curious. I had large boosts over two weekends, that rapidly shot me up various charts. I was testing our new cloud hardware "shape" (configuration) that was just announced, which features 8 * Nvidia A100 GPUs in them, linked in their usual hybrid-cube shape. Our previous generation had 8 * V100 GPUs in it in similar configuration. Very rough "gpu-burn" testing put the tensorcore performance up some ~50% vs V100, and normal cuda performance seemed to be up about a third. Note: I really don't know what I'm doing with this stuff. These aren't official benchmark figures, and nothing I tested longer term leveraged nvlink, to the best of my knowledge.

F@H proved a good way to have a sustained workload over a period of time to ensure that there were no immediately obvious gotchas. I didn't spend any time particularly configuring F@H, I just ran the CLI straight. There were a few times I had to restart the process to bring some GPUs back in to the fold. F@H didn't know what the hardware was, nor how to take advantage of it particularly, so far as I can tell. It picked up general CUDA/GPU workloads I believe.

F@H proved a good way to have a sustained workload over a period of time to ensure that there were no immediately obvious gotchas. I didn't spend any time particularly configuring F@H, I just ran the CLI straight. There were a few times I had to restart the process to bring some GPUs back in to the fold. F@H didn't know what the hardware was, nor how to take advantage of it particularly, so far as I can tell. It picked up general CUDA/GPU workloads I believe.

Soldato

- Joined

- 21 Jul 2005

- Posts

- 21,209

- Location

- Officially least sunny location -Ronskistats

Yeah makes sense, I mean look how long it has taken them to make strides on the nvidia cards only just releasing recently a massive boost in performance.