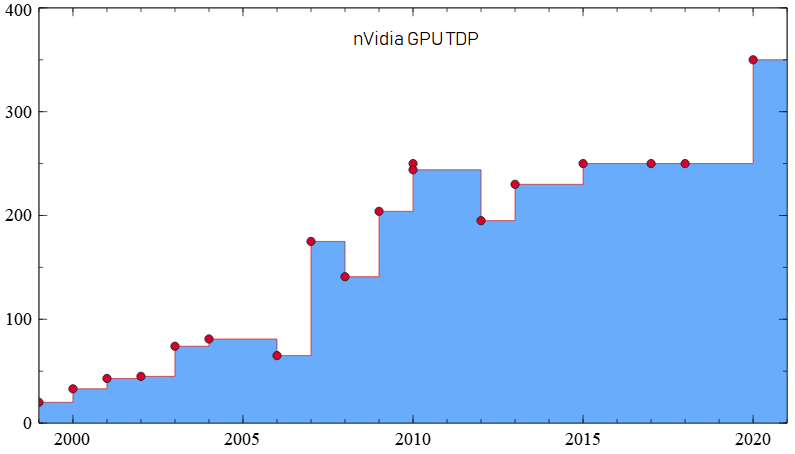

A long time ago, PCs consumed so little power that nothing special had to be done to cool them. As they became faster, power consumption increased too, ands so small heatsinks were added, then bigger heatsinks, then fans, then heatpipes. To illustrate this trend, I plotted the TDP of nVidia's top GPUs from each generation from 1999 to today (ignoring dual GPUs, Titans, and OEM-only cards):

The numbers aren't perfect due to some being the TDP of the chip itself, and some being TBP for the entire card, plus the usual fudging of official specs vs. actual power consumption, and the difficulty of finding the offical TDP for older cards. It's good enough to illustrate the trend though. Similar plots could be made for GPUs and CPUs from other companies.

You can see a steady increase until 2010 when TDP stabilized at around 250W. I thought that might have been the ultimate limit for GPUs, but this year's releases show that nVidia and AMD think more is acceptable, and the demand for those GPUs suggests they are correct.

There must be an upper limit somehwere though - some value so high that customers will refuse to buy. Such limits have been pushed against before, e.g. with 200W CPUs. Will we see those again, as the slowing of semiconductor fabrication improvements makes increasing power consumption the only way to improve performance, or will we decide that there's an upper limit beyond which we won't go? Does the popularity of 1kW+ PSUs mean that people are willing to own computers that actually use as much power as a kettle? How much power would you be willing to feed to a machine to make it faster?

EDIT: Added RTX 4090.

GPU,Year,TDP/W,Source

GeForce 256 DDR,1999,20,http://hw-museum.cz/vga/162/asus-geforce-256-ddr

GeForce2 Ultra,2000,33,http://hw-museum.cz/vga/164/asus-geforce2-gts-tv

GeForce3 Ti500 ,2001,43,http://hw-museum.cz/vga/174/nvidia-geforce3-ti-500

GeForce4 Ti4600 ,2002,45,http://hw-museum.cz/vga/184/msi-geforce4-ti-4600

GeForce FX 5950 Ultra,2003,74,https://www.techpowerup.com/gpu-specs/geforce-fx-5950-ultra.c79

GeForce 6800 Ultra,2004,81,http://hw-museum.cz/vga/308/msi-geforce-6800-ultra-pci-e

GeForce 7950 GT,2006,65,https://www.techpowerup.com/gpu-specs/geforce-7950-gt.c183

GeForce 8800 Ultra,2007,175,https://en.wikipedia.org/wiki/List_of_Nvidia_graphics_processing_units#GeForce_8_(8xxx)_series

GeForce 9800 GTX+,2008,141,https://en.wikipedia.org/wiki/List_of_Nvidia_graphics_processing_units#GeForce_9_(9xxx)_series

GeForce GTX 285,2009,204,https://en.wikipedia.org/wiki/List_of_Nvidia_graphics_processing_units#GeForce_200_series

GeForce GTX 480,2010,250,https://en.wikipedia.org/wiki/List_of_Nvidia_graphics_processing_units#GeForce_400_series

GeForce GTX 580,2010,244,https://en.wikipedia.org/wiki/List_of_Nvidia_graphics_processing_units#GeForce_500_series

GeForce GTX 680,2012,195,https://en.wikipedia.org/wiki/List_of_Nvidia_graphics_processing_units#GeForce_600_series

GeForce GTX 780 Ti,2013,230,https://en.wikipedia.org/wiki/List_of_Nvidia_graphics_processing_units#GeForce_700_series

GeForce GTX 980 Ti,2015,250,https://en.wikipedia.org/wiki/List_of_Nvidia_graphics_processing_units#GeForce_900_series

GeForce GTX 1080 Ti,2017,250,https://en.wikipedia.org/wiki/List_of_Nvidia_graphics_processing_units#GeForce_10_series

GeForce RTX 2080 Ti,2018,250,https://en.wikipedia.org/wiki/List_of_Nvidia_graphics_processing_units#GeForce_20_series

GeForce RTX 3090,2020,350,https://en.wikipedia.org/wiki/List_of_Nvidia_graphics_processing_units#GeForce_30_series

GeForce RTX 4090,2022,450,https://en.wikipedia.org/wiki/List_of_Nvidia_graphics_processing_units#GeForce_40_series

GeForce 256 DDR,1999,20,http://hw-museum.cz/vga/162/asus-geforce-256-ddr

GeForce2 Ultra,2000,33,http://hw-museum.cz/vga/164/asus-geforce2-gts-tv

GeForce3 Ti500 ,2001,43,http://hw-museum.cz/vga/174/nvidia-geforce3-ti-500

GeForce4 Ti4600 ,2002,45,http://hw-museum.cz/vga/184/msi-geforce4-ti-4600

GeForce FX 5950 Ultra,2003,74,https://www.techpowerup.com/gpu-specs/geforce-fx-5950-ultra.c79

GeForce 6800 Ultra,2004,81,http://hw-museum.cz/vga/308/msi-geforce-6800-ultra-pci-e

GeForce 7950 GT,2006,65,https://www.techpowerup.com/gpu-specs/geforce-7950-gt.c183

GeForce 8800 Ultra,2007,175,https://en.wikipedia.org/wiki/List_of_Nvidia_graphics_processing_units#GeForce_8_(8xxx)_series

GeForce 9800 GTX+,2008,141,https://en.wikipedia.org/wiki/List_of_Nvidia_graphics_processing_units#GeForce_9_(9xxx)_series

GeForce GTX 285,2009,204,https://en.wikipedia.org/wiki/List_of_Nvidia_graphics_processing_units#GeForce_200_series

GeForce GTX 480,2010,250,https://en.wikipedia.org/wiki/List_of_Nvidia_graphics_processing_units#GeForce_400_series

GeForce GTX 580,2010,244,https://en.wikipedia.org/wiki/List_of_Nvidia_graphics_processing_units#GeForce_500_series

GeForce GTX 680,2012,195,https://en.wikipedia.org/wiki/List_of_Nvidia_graphics_processing_units#GeForce_600_series

GeForce GTX 780 Ti,2013,230,https://en.wikipedia.org/wiki/List_of_Nvidia_graphics_processing_units#GeForce_700_series

GeForce GTX 980 Ti,2015,250,https://en.wikipedia.org/wiki/List_of_Nvidia_graphics_processing_units#GeForce_900_series

GeForce GTX 1080 Ti,2017,250,https://en.wikipedia.org/wiki/List_of_Nvidia_graphics_processing_units#GeForce_10_series

GeForce RTX 2080 Ti,2018,250,https://en.wikipedia.org/wiki/List_of_Nvidia_graphics_processing_units#GeForce_20_series

GeForce RTX 3090,2020,350,https://en.wikipedia.org/wiki/List_of_Nvidia_graphics_processing_units#GeForce_30_series

GeForce RTX 4090,2022,450,https://en.wikipedia.org/wiki/List_of_Nvidia_graphics_processing_units#GeForce_40_series

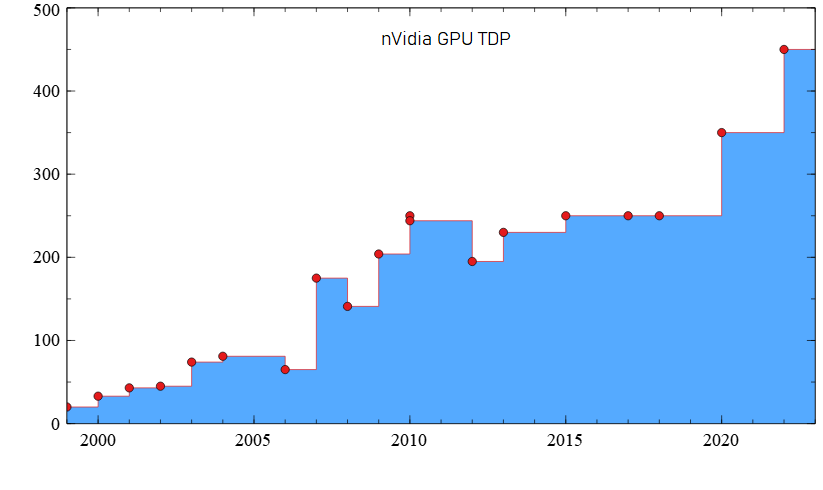

The numbers aren't perfect due to some being the TDP of the chip itself, and some being TBP for the entire card, plus the usual fudging of official specs vs. actual power consumption, and the difficulty of finding the offical TDP for older cards. It's good enough to illustrate the trend though. Similar plots could be made for GPUs and CPUs from other companies.

You can see a steady increase until 2010 when TDP stabilized at around 250W. I thought that might have been the ultimate limit for GPUs, but this year's releases show that nVidia and AMD think more is acceptable, and the demand for those GPUs suggests they are correct.

There must be an upper limit somehwere though - some value so high that customers will refuse to buy. Such limits have been pushed against before, e.g. with 200W CPUs. Will we see those again, as the slowing of semiconductor fabrication improvements makes increasing power consumption the only way to improve performance, or will we decide that there's an upper limit beyond which we won't go? Does the popularity of 1kW+ PSUs mean that people are willing to own computers that actually use as much power as a kettle? How much power would you be willing to feed to a machine to make it faster?

EDIT: Added RTX 4090.

Last edited: