Nvidias next evolution to keep it the gold standard for VRR?

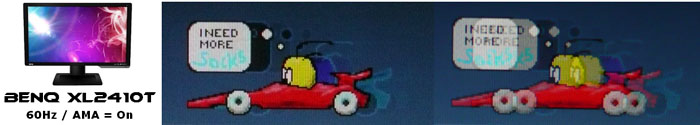

Not quite fully related to the above but finally something, which shows what sets gsync apart from adaptive/free sync! This was always the main advantage of gsync module over non gsync modules, it's not really required for oled due to how oled handles motion and the instantaneous pixel response but for LCD screens, it was/is quite the game changer.

blurbusters.com

blurbusters.com

G-SYNC Displays Dazzle At CES 2024: G-SYNC Pulsar Tech Unveiled, G-SYNC Comes To GeForce NOW, Plus 24 New Models

NVIDIA G-SYNC Pulsar is the first and only display tech to deliver flawless variable frequency strobing, variable refresh, and variable overdrive, together establishing a new gold standard for motion clarity and stutter-free gameplay; the ASUS ROG Swift PG27 series G-SYNC monitor with Pulsar...

www.nvidia.com

Not quite fully related to the above but finally something, which shows what sets gsync apart from adaptive/free sync! This was always the main advantage of gsync module over non gsync modules, it's not really required for oled due to how oled handles motion and the instantaneous pixel response but for LCD screens, it was/is quite the game changer.

NVIDIA Announces ULMB 2 — Improved Motion Blur Reduction - Blur Busters

A Better Motion Blur Reduction System for Gaming Monitors Long-time Blur Busters readers knows that Blur Busters started because of strobe backlight systems for reducing motion blur on displays, including our famous NVIDIA LightBoost HOWTO from over a decade ago! NVIDIA has a news release about...

Last edited: