Decided I wanted to build a server/nas to help with backups etc so I've cobbled together all of my spare parts and collected a few freebies across the last few months. Here's how it looks at present:

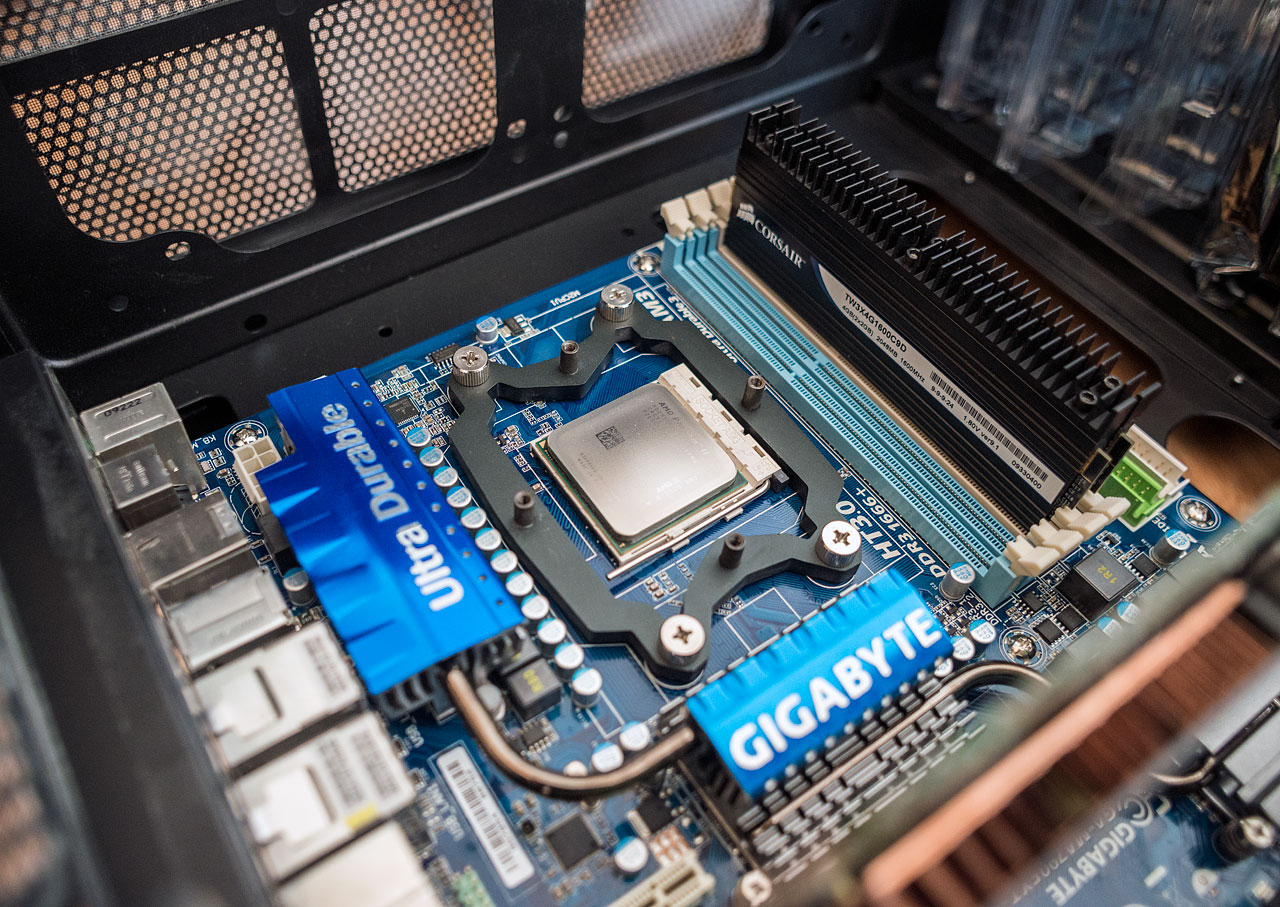

Gigabyte MA-790FXT-UD5P

AMD Phenom II X4 955

Corsair Dominator 4GB 1600

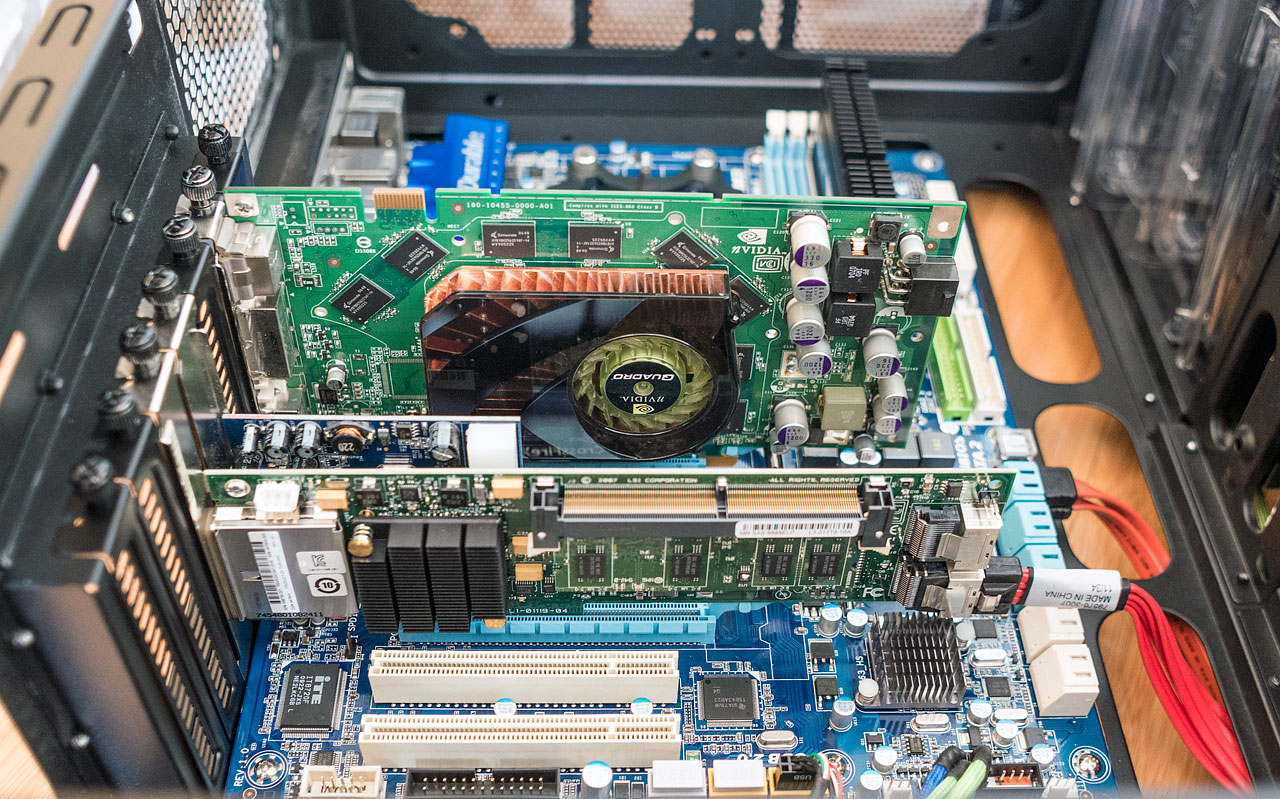

Nvidia Quadro FX3500

LSI MegaRaid SAS 8888ELP

Delta 850W PSU (WTX form factor so may well be swapped for my corsair TX650 if it'll fit in the phanteks else I'll probably have to buy another PSU)

Belkin USB 3.0 card

Prolimatech Megahalems

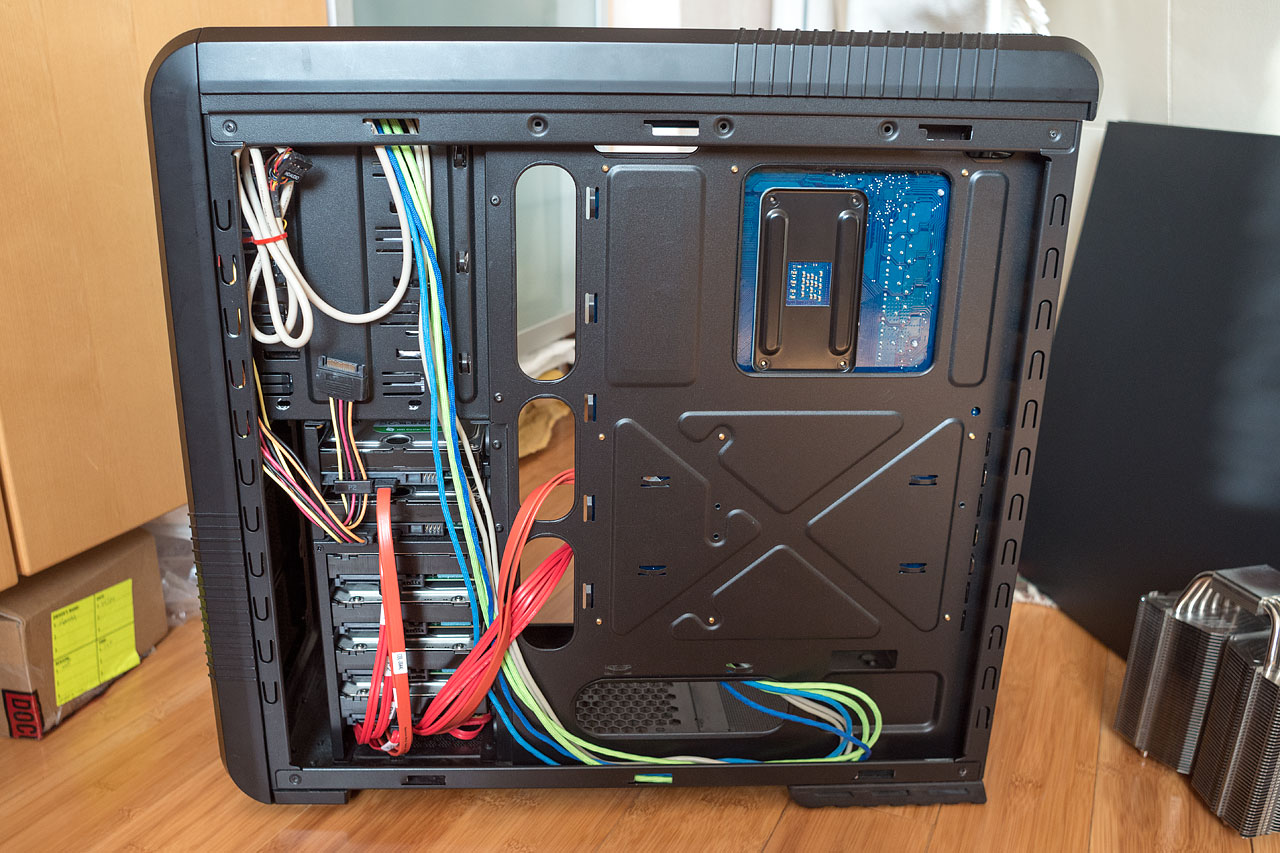

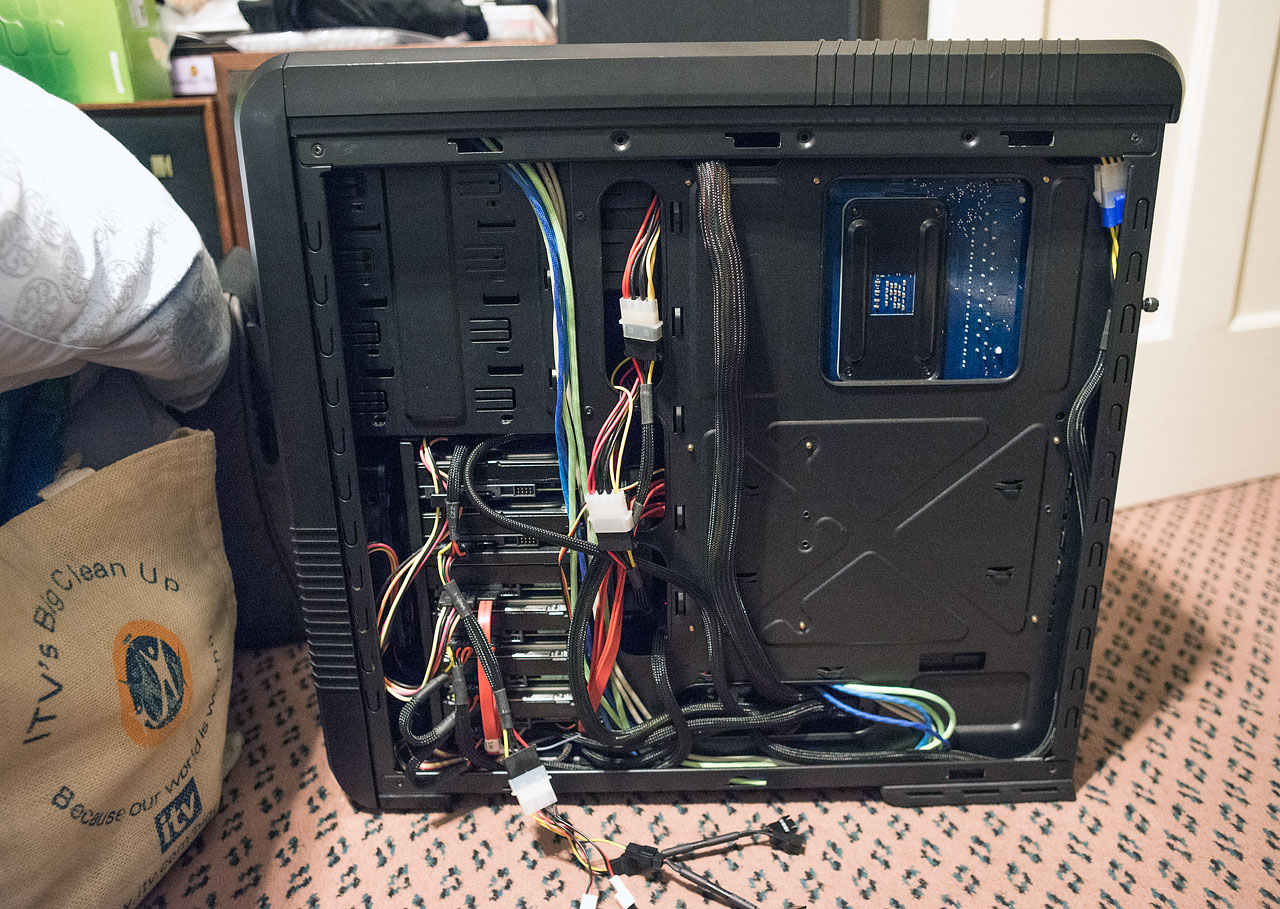

Coolermaster CM690-II

Hard drive wise, at present I have a load of different disks ranging from 500GB to 1TB. (6x 500GB, 1x 640GB and a 1TB) I will probably be looking at getting several WD SE 2TB disks to make up the array. The card doesn't support larger than 2TB, but 8 of those would make more than enough storage space for me at present. If i find a suitable expander, I should be able to add more in the future if needed. (or just get a different HBA)

Gigabyte MA-790FXT-UD5P

AMD Phenom II X4 955

Corsair Dominator 4GB 1600

Nvidia Quadro FX3500

LSI MegaRaid SAS 8888ELP

Delta 850W PSU (WTX form factor so may well be swapped for my corsair TX650 if it'll fit in the phanteks else I'll probably have to buy another PSU)

Belkin USB 3.0 card

Prolimatech Megahalems

Coolermaster CM690-II

Hard drive wise, at present I have a load of different disks ranging from 500GB to 1TB. (6x 500GB, 1x 640GB and a 1TB) I will probably be looking at getting several WD SE 2TB disks to make up the array. The card doesn't support larger than 2TB, but 8 of those would make more than enough storage space for me at present. If i find a suitable expander, I should be able to add more in the future if needed. (or just get a different HBA)

Last edited: