Associate

As some who may be reading Shads fibre channel setup thread may be aware, I have been looking at infiniband rather than FC for a storage network.

There are a number of reasons for this;

4x Mellanox ConnectX DDR (20Gbps) infiniband HCAs (Used - MHGH28-XTC): US$75 each.

1x Mellanox ConnectX-2 QDR card for one of my Dell C6100 server nodes (Used - Mez card): US$199.

1x Flextronics F-X430046 24-Port 4x DDR Infiniband Switch (used): US$200 (from a friend).

4x 8mtr CX4 -> CX4 DDR Infiniband cables (new): US$15 (caught a fantastic deal as these are usually over US$200 new but only had 8mtr available).

1x 2mtr QSFP -> CX4 QDR Infiniband cable (new): US$105

Item Total (approx): US$864 + shipping (US to Singpore where I currently am).

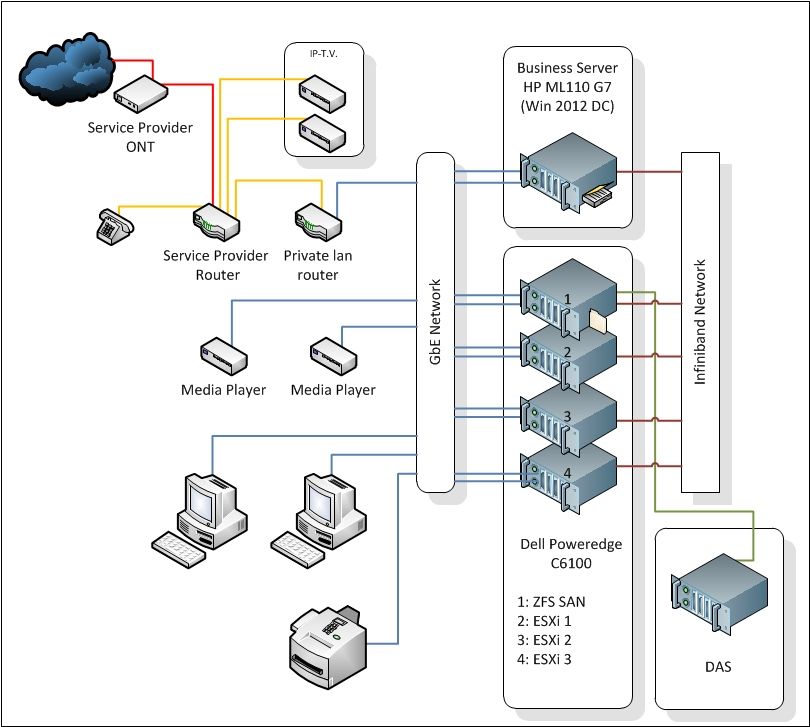

The current setup looks like this...

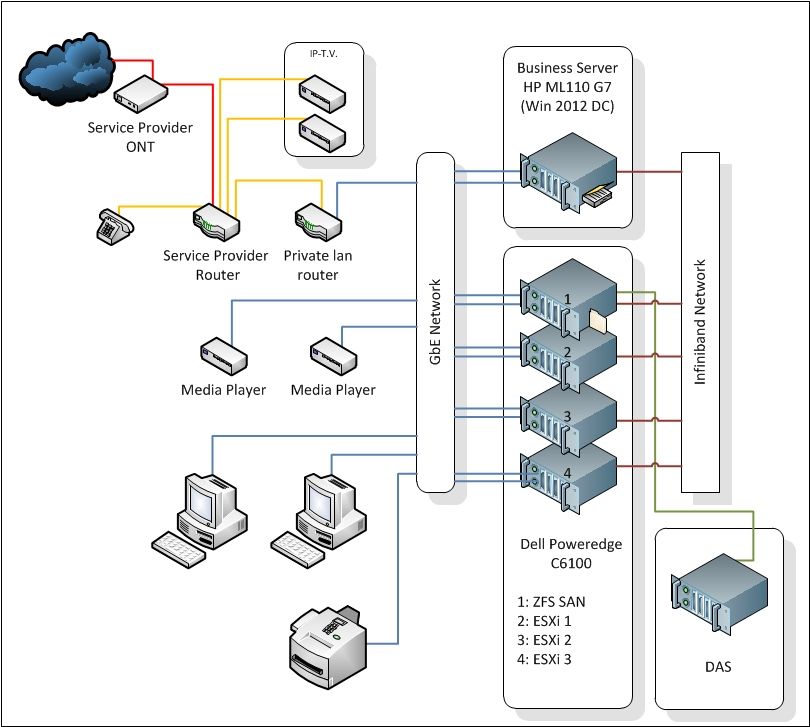

This is the network the Infiniband equipment will be part of (planned completed setup).

RB

There are a number of reasons for this;

- Equipment is plentyful in the second hand market.

- Equipment is fairly cheap if you go a couple of generations back.

- Switches are generally cheaper than 10GbE in the second user markets.

- Cables can be had for a decent price if you are lucky and hunt around.

- Pretty old Infinihost cards need onboard ram to work with Solaris (from what I have read).

- Cables can be big and difficult to easily manage.

- Drivers / standards are not universal across OS's.

4x Mellanox ConnectX DDR (20Gbps) infiniband HCAs (Used - MHGH28-XTC): US$75 each.

1x Mellanox ConnectX-2 QDR card for one of my Dell C6100 server nodes (Used - Mez card): US$199.

1x Flextronics F-X430046 24-Port 4x DDR Infiniband Switch (used): US$200 (from a friend).

4x 8mtr CX4 -> CX4 DDR Infiniband cables (new): US$15 (caught a fantastic deal as these are usually over US$200 new but only had 8mtr available).

1x 2mtr QSFP -> CX4 QDR Infiniband cable (new): US$105

Item Total (approx): US$864 + shipping (US to Singpore where I currently am).

The current setup looks like this...

This is the network the Infiniband equipment will be part of (planned completed setup).

RB

and trying it at the same time would probably be a bad thing

and trying it at the same time would probably be a bad thing  ).

).  .

.