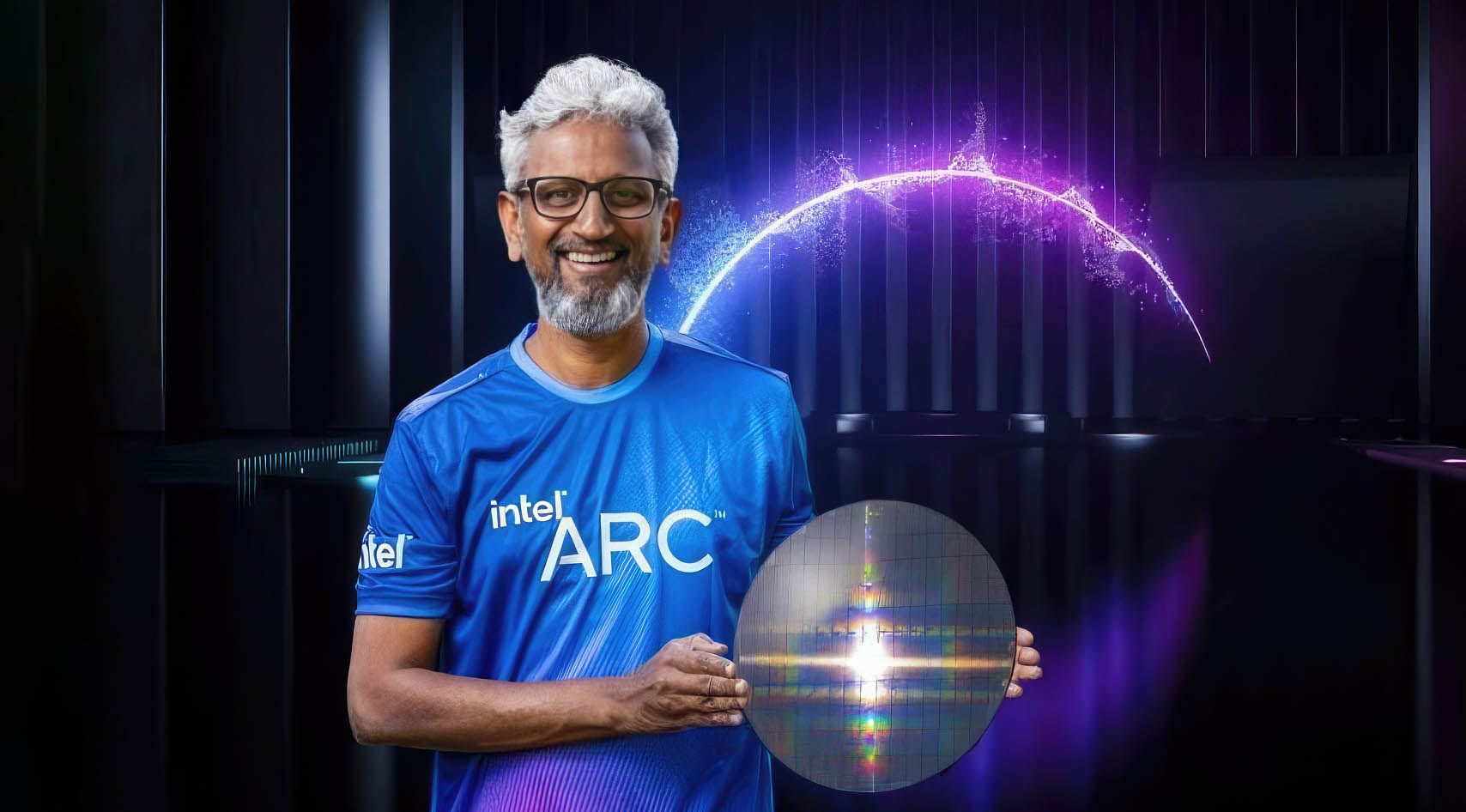

He was a manager,with good experience of the software side,and some knowledge of the hardware side. But you need to have a clue about software to target where the hardware can be good at. He was at S3 Graphics,ATI,AMD,Intel and Apple over 30 years. He can't be that bad if so many leading companies hired him.

Raja Koduri was director of advanced technology development at ATI from 2001 to 2009. Many of us older folk know of him because of that. He joined ATI to work on the Radeon R300,aka,the 9700 series and the follow up X800 series. The reality is that when he was at ATI they made decent hardware most of the time(OK the X1800 had some issues and the R600 was a flop),but the 9000 series,X800 series,X1900 series,HD4000 series and HD5000 series were developed when he was there. The R300 was the DX9 graphics hardware standard for years.

He was at Apple,and was behind the push to Retina displays and probably their move to their own dGPU designs.

When he came back a second time to AMD you had Polaris,Vega and RDNA1/RDNA2. Considering Polaris and Vega had to be made on a subpar GF 14NM,because the people at AMD before Rory Read put AMD into a lose/lose scenario to stave off bankruptcy. Just look at the R and D budget for graphics at the time against Nvidia. 70% of AMD R and D was put towards CPUs. Their total R and D was still less than Nvidia. Vega was released firstly as compute card called Radeon Instinct. Only two Polaris designs were made.

Because of all the moaning AMD fans,they went re-released these cards to consumers,and had to make a loss on them. That is one of the biggest mistakes AMD made - they should have just not bothered,as it was a loss leader card.

Yet,Polaris wasn't a flop in the end? Same as Vega. It had relevance for 6 years as a uarch,especially since it was designed on a shoestring budget. Vega IGPs are still part of the Ryzen 5000 series APUs - they are still beating what Intel has. CDNA1 and CDNA2 are Vega derivatives and RDNA1 was developed when he was there,and by extension that means RDNA2.

It takes years to develop new designs,and more importantly get the design teams together.

The guy has worked at S3 Graphics,ATI,AMD and Intel since 1996. Have people not noticed he likes jumping into challenging scenarios? S3 was already having issues back then when he joined,and ATI when he joined in 2001 was a massive underdog compared to Nvidia at the time. The R300 was the design which made ATI's name in the industry.

He joined Apple as "Director, Graphics Architecture" meaning he had a hand in that too.So like Keller he stepped into something different. Rejoins AMD,literally as they are trying to starve off bankruptcy. Goes to Intel to try and push into a mature market with two incumbents. Now is going to found an AI gaming software startup.

If he was so bad,then he would have been out of the industry a long time ago,not nearly 30 years. Even Jim Keller only stays for a few years at many places,yet gets all the credit for those companies achievements years later. Jim Keller had good things to say about him IIRC.

Raja Koduri gets dunked on,even when a number of the designs he was involved with have stood the test of time.

Plus Jim Keller joined Intel only in 2018 and left two years later.Who do you think got Jim Keller onboard at Intel? Raja Koduri. Are people blaming him for the current Intel design problems? Why did he leave so quickly? He spend more time at AMD.Intel is having problems,left,right and centre and almost all their units.They let go of 1000s of older,more experience workers:

People over 40 were two-and-a-half times more likely to lose their jobs in this spring's layoffs than Intel employees under 40.

www.oregonlive.com

CPU,NAND,GPU,etc. The company has had too many people butting heads inside for the last 5~8 years. To try and blame Raja Koduri for all of them,when so many people have left comes across as people getting annoyed AMD couldn't beat Pascal,on a fraction of the budget.It's not only the hardware but the whole software stack for 100s if not 1000s of games. If it was so easy,then look at the numerous Chinese dGPUs,some made on 7NM which have horrible performance due to poor drivers and lack of gaming support. Apple has taken years to move to its own GPU uarch(they started moving towards it in 2014),and even then look at gaming performance? This is with a company that controls their whole ecosystem.I remember back in the late 90s and early 2000s,when we had more dGPU companies out there,how software could make or break things.

Lisa Su had made the correct decision to push more budget to CPU R and D. When AMD was competitive people just bought more Nvdia graphics cards anyway,irrespective of drivers,power consumption,etc.

Yet,where is all the moaning,that AMD still lost to Nvidia with RDNA2 on a far better node,or Nvidia is now ahead again with Ada Lovelace? This time R and D is much more,and AMD went back onto TSMC full time. They had access to TSMC 4NM(their new APUs are made on them). Why not make their new dGPUs on TSMC 4NM,like Nvidia? They clearly think APUs are more important. The reality is dGPUs are just a secondline priority for AMD,and Nvidia throws money at them.

The second reality is Intel,is cancelling projects everywhere and there is massive infighting. They have no clear vision of anything. Just look at their advanced packaging technologies,stuff such as L4 cache,etc. Intel had all the technology to out-AMD,AMD years ago. They had a process node advantage over AMD until 2019. It only took until Zen3 for AMD to finally field a faster core. They had decades to improve the software side on the IGPs.