There is a VAST amount of scientific literature regarding motion, blur and How your eyes and brain process data. And none of it would suggest any benefit beyond about 30Hz.

That's Trump and Putin level BS.

To handle motion reasonably for especially sports, TV broadcasting standards were precisely made with image rates significantly above Hollywood's slide show.

50/60Hz difference simply came from need to have image rate synced with electricity, because otherwise artificial lightning would have caused flickering of the image.

Just like how (American standard based) 60Hz CRT monitors visible in PAL TV broadcasts showed flickering image.

And those 60Hz CRTs were plain horrible monitors, because of visible flickering in anything above "post card" size.

And flicker of even those small screens was easy to see, if monitor was outside center of vision:

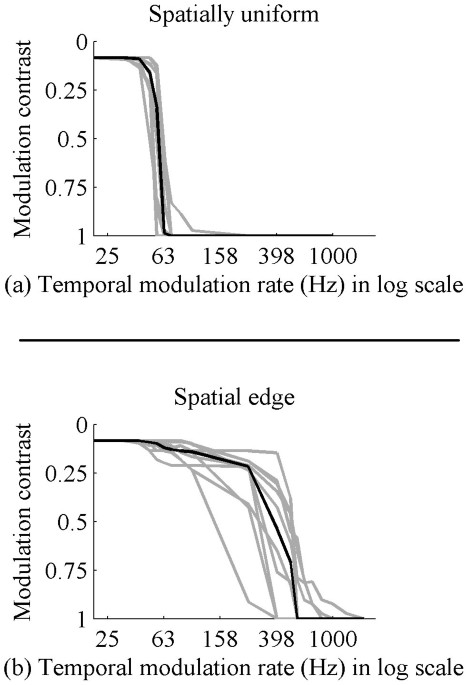

Center of eye's FOV is focused to maximizing spatial resolution, but going outside it situation moves toward higher temporal resolution.

Really 75Hz was any kind minimum refresh rate for CRT monitors to not be incomfortable because of flicker and I run my last 19" CRT at 85Hz.

LCDs again hold image static until next refresh avoiding brightness flicker and hence manufacturers started by sticking to old 60Hz.

(to lower signal bandwidth and processing power needs and liquid crystals would have been just too slow for faster)

I don’t know about 48Hz movies, but you never really hear anyone complain that the cinema is jerky or blurry, do you?

Given that most people work on 30-60Hz monitors and have no complaints.

Because criticism is always silenced by Earth is the center of the universe Church Inquisition like you defending outdated technological compromises!

I've always said 24Hz belongs to museum of medieval history.

It's simply completely incapable to any kind faster than crawling motion over bigger portion of image.

Watching starting scene of the Day After Tomorrow in movie theaters was headache causing experience, because of extremely jerky motion.

And drunkard's vomit amount of blur is hardly any better.

There has been no below 60Hz monitors in modern home PC era!

Which just shows your ignorance of facts.