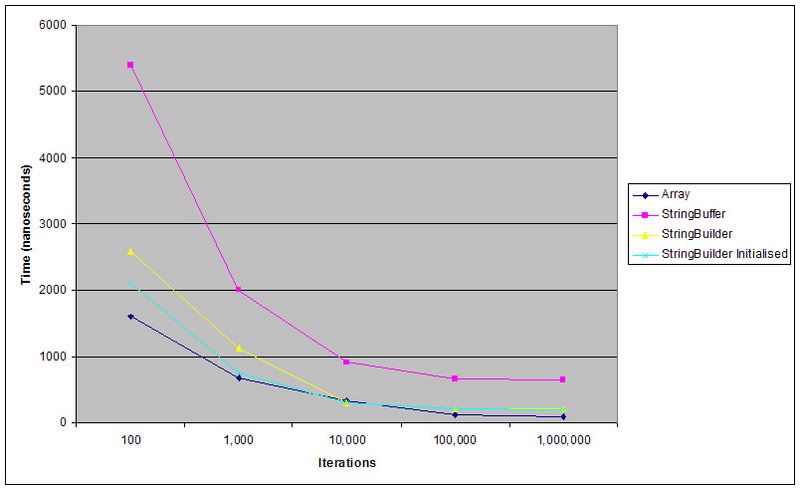

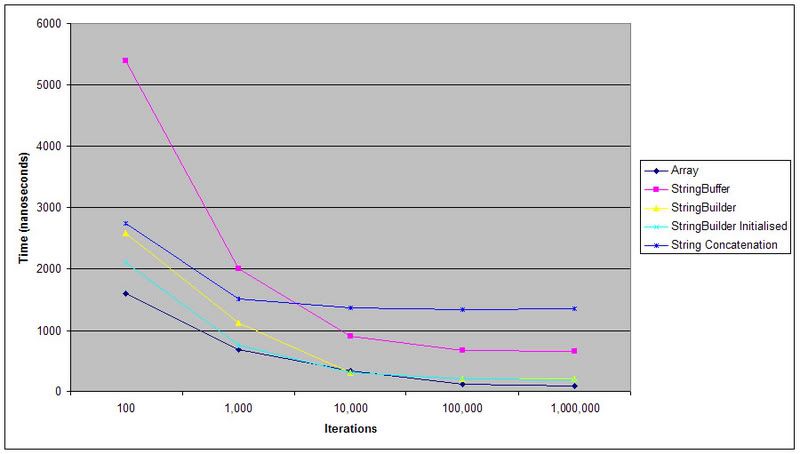

Our discussion here shows how important minor changes to the same method can affect performance.

Many moons ago I was a Software Developer and back in 99/00 I was working on a project for a major multinational.

Our system was like the borg steadily taking over as the authoritative repository for client information from a pile of legacy systems. Once we had all of a legacy system's client data associated functionality would be disabled on their system and any changes made through ours. Changes would be synced back to their systems through various means.

CORBA, COM (through a COM-CORBA bridge) for a smalltalk system, MQSeries messaging middleware for AS400s, email for some transactions! and for one crusty old mainframe we generated text files and used ftp.

It was these text files that ended up causing a problem. It had worked fine for a couple of years, as these things often do..

We had completed a component that would communicate with Dun and Bradstreet's Worldbase of company information. The aim was to update client information we held like addresses, industry codes etc..

First time we exchanged information with DnB it was done via couriered CD (encrypted I'm glad to say

) as there were rather a lot of records and DnB were having trouble launching their automated online system. More ftp, no fancy schmancy api's exposed over the net in those days

One sunny Sunday morning I traipsed into the office with the entire team for a major release. New functionality, database changes and new servers. Import from DnB completed successfully and the system came back up.

Yey, easy work for a day in lieu

Then one of our components went down.. restarted and it maxed out CPU for a while and crashed again on an out of memory exception. Hmmm, whacked up the max heap size and restarted. Waited.. and waited some more.. and then some more.. Project manager started mumbling about rollback strategies and cutoff times... waited some more.. and praise the lord after something like three hours it spat out a file.

The following day I located and fixed the problem though it would have to wait for the next release to go into production.

For reason's known only to the writer this particular component was generating the entire contents of a file in memory using Strings basically like

Code:

String tmp;

While (more lines) {

tmp += someFileFormatterObject.getNextLine();

}

someOtherObject.writeFile(tmp);

I modified two lines of code and the execution time went from hours to imperceptible. I've been a StringBuffer evangelist ever since

lol, that turned out to be quite a long story.

) as there were rather a lot of records and DnB were having trouble launching their automated online system. More ftp, no fancy schmancy api's exposed over the net in those days

) as there were rather a lot of records and DnB were having trouble launching their automated online system. More ftp, no fancy schmancy api's exposed over the net in those days