Caporegime

- Joined

- 18 Oct 2002

- Posts

- 31,202

World ran out of vRam, needs to catch up.Just need FarCry 7 to be announced.

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

World ran out of vRam, needs to catch up.Just need FarCry 7 to be announced.

You mean like this statement ?(pot ... kettle)

With bats, chains and knives.

Using just TLOU, what would you say has happened here with rdna 2 and the 3090:

And it's basically the same for the other listed titles i.e. hogwarts, forgotten

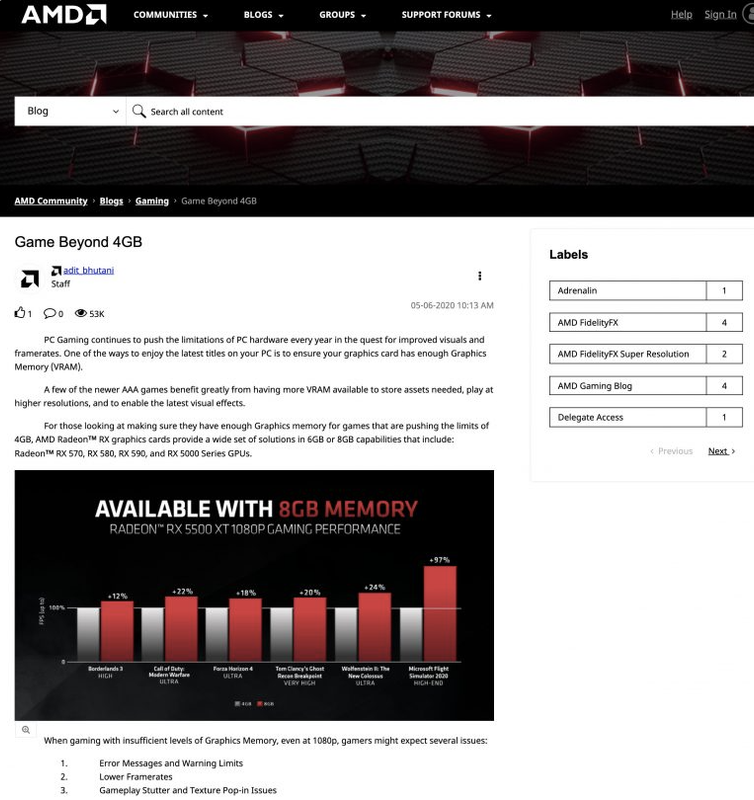

I see all the talk is about Nvidia but lets not forget AMD are guilty of short changing customers on VRAM also,

This was what AMD said in 2020

Then over 2 years later the successor arrives with just 4gb and offers less performance for more money.

You do realise the game was designed to be played at 30fps all the way back to the PS3 days, then the PS4 remaster version and only the PS4 Pro I think had the 60fps performance mode then the PS5 on the remake. That's also why the game is broken on a mouse when you look around as the game was never designed to be moved around that fast and why a controller is the best way to play it.

Let's move on from such things causing such threads to get locked. I get your point as you know but you have posted these graphs enough that we all know them by heart now. Let's try keep this topic open and on topic and not walls of text or images to show something I'm sure 99% in this topic know already.

If going to debunk, provide evidence

If going to debunk, provide evidence

It's funny you posted that as I was going to do the same and remind everyone it's not an AMD vs NVIDIA thing but a thing they all do at some point be it VRAM or some other hardware or feature issue. Thanks for posting that as that was a classic that at one stage they tried to hide but got called out on it and it came back after "technical issues".

The technical issue was that they had sent that slide to the shredder!It's funny you posted that as I was going to do the same and remind everyone it's not an AMD vs NVIDIA thing but a thing they all do at some point be it VRAM or some other hardware or feature issue. Thanks for posting that as that was a classic that at one stage they tried to hide but got called out on it and it came back after "technical issues".

Let me ask it a different way, why is the 4090 24gb getting considerably higher fps than a 3090 24gb?

4090 =Transistors 76,300 million

Base Clock 2235 MHz

Boost Clock 2520 MHz

Memory Clock 1313 MHz 21 Gbps effective

3090 =Transistors 28,300 million

Base Clock 1395 MHz

Boost Clock 1695 MHz

Memory Clock 1219 MHz 19.5 Gbps effective

4090 has 2.7x the Transistors of a 3090 and much higher clocks on core and memory, but is 55-65% faster depending on the game. Both so far not suffering VRAM issues in games, but by next gen games they will be coming close for the extreme ultra settings on games then if not sooner as mods now for certain games can use more than 24GB too.

Keep Calm and Downplay the Lack of VRAM

also

- It's a console port.

- It's a conspiracy against nVidia.

- 8GB cards aren't meant for 4K / 1440p / 1080p / 720p gaming.

- It's completely acceptable to disable ray tracing on nVidia cards while AMD users have no issue.

The technical issue was that they had sent that slide to the shredder!

BoM obviously matters, but segmentation and planned obsolescence is why we are not given the choice of buying a 16GB 6600 XT or 3070, a 20GB 3080, or a 24GB 6700 XT.

So in other words, it has the grunt over the 3090

So it should it's a card with 2.7x the transistor count and new gen replacing the 3090.. if it went backward and worse.. would you find that normal ?

Don't make me use the gif with "I feel the answer is in front of you"

So 3090 has the vram but not the grunt

So 3090 has the vram but not the grunt

Never said it didn't have the grunt. Don't put words in my mouthFinally we got thereSo 3090 has the vram but not the grunt

Think I could use that gif too tbh

..

..How long until this one gets locked?