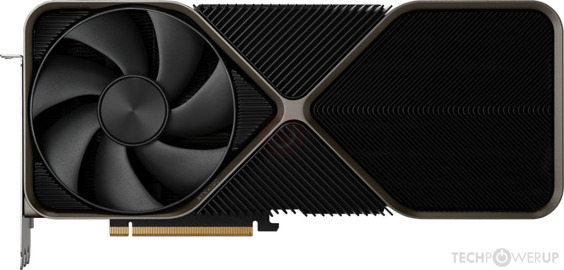

DLSS 2

DLSS 3

Frame generationlame

Ray tracing.

Tensor cores for AI processing.

Floating point performance (e.g. 48.74 TFLOPS)

Huge amounts of VRAM

G.Sync

'Low latency' modes

Support for very highframerates refresh rates

Fancy new power connectors

Ampere +++

Do these things seem familiar?

None of these things are direct indicators of the performance of the GPU itself. They don't indicate a graphics card with more processing cores, or higher pixel rates /texture rates. Nor do they indicate the overall 3D graphics (rasterising) performance of the card.

We see these features on the box and decide they are must have features, and it pushes prices up. I admit that DLSS 2 is a very nice thing to have, if you have a 1440p/4K monitor. But - AMD is now competitive in terms of their resolution upscaling and detail enhancement technologies, so, I think we should basically all consider buying AMD this time around...

Of course, I may end up being a big hypocrite, if the RTX 4070 /4070 TI prices seem affordable. Other wise, based on the prices of the RTX 4080, Nvidia can do one. They have reverted to type, and we are seeing similar prices to the RTX 2080 TI (or even higher) again. And endless product variations and rereleases.

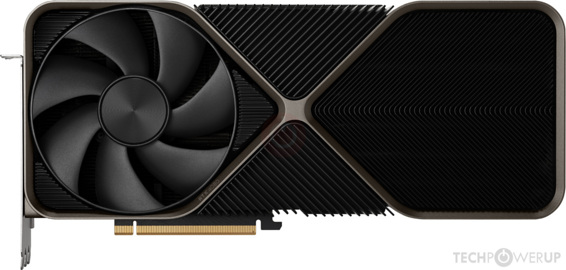

DLSS 3

Frame generation

Ray tracing.

Tensor cores for AI processing.

Floating point performance (e.g. 48.74 TFLOPS)

Huge amounts of VRAM

G.Sync

'Low latency' modes

Support for very high

Fancy new power connectors

Ampere +++

Do these things seem familiar?

None of these things are direct indicators of the performance of the GPU itself. They don't indicate a graphics card with more processing cores, or higher pixel rates /texture rates. Nor do they indicate the overall 3D graphics (rasterising) performance of the card.

We see these features on the box and decide they are must have features, and it pushes prices up. I admit that DLSS 2 is a very nice thing to have, if you have a 1440p/4K monitor. But - AMD is now competitive in terms of their resolution upscaling and detail enhancement technologies, so, I think we should basically all consider buying AMD this time around...

Of course, I may end up being a big hypocrite, if the RTX 4070 /4070 TI prices seem affordable. Other wise, based on the prices of the RTX 4080, Nvidia can do one. They have reverted to type, and we are seeing similar prices to the RTX 2080 TI (or even higher) again. And endless product variations and rereleases.

Last edited: