Caporegime

Seen quite a few mixed opinions on this new card from forum users and some reviewers liking it and disliking it, so what does everyone here think?

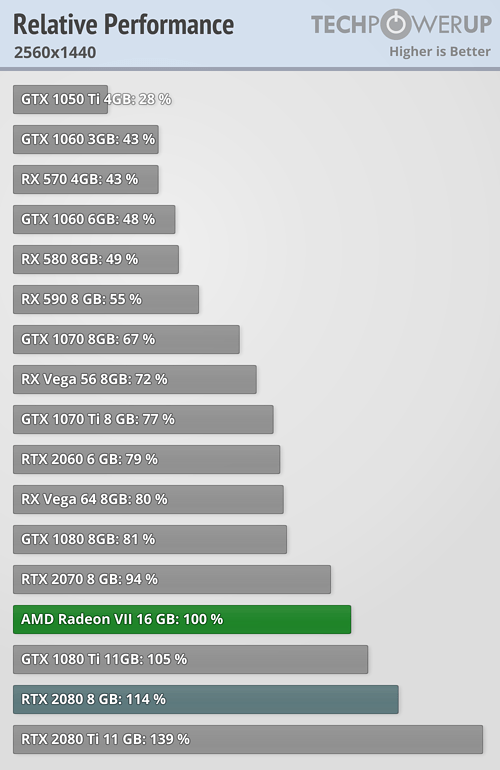

Personally, I am on the fence because it is AMDs fastest gaming card ever and does have good performance. 16GB VRAM is a plus also but noise is meh! It looks a decent 4K card in truth but lower resolutions do kind of make me look at other cards. Price is fair in my opinion but an 8GB variant at £100 cheaper would have been sexy. If I was after a new GPU, would I have jumped on it? Probably not in truth but that doesn't make this a decent card. Overclocking needs looking at and if Roman couldn't do it, something is wrong there. Anyways, a good launch?

Personally, I am on the fence because it is AMDs fastest gaming card ever and does have good performance. 16GB VRAM is a plus also but noise is meh! It looks a decent 4K card in truth but lower resolutions do kind of make me look at other cards. Price is fair in my opinion but an 8GB variant at £100 cheaper would have been sexy. If I was after a new GPU, would I have jumped on it? Probably not in truth but that doesn't make this a decent card. Overclocking needs looking at and if Roman couldn't do it, something is wrong there. Anyways, a good launch?