intel currently doesn't have the fastest processor overall - that should be some EPYC or Threadripper.

https://www.extremetech.com/computi...f-intel-skylake-sp-xeon-massive-server-battle

It is worth going to the actual review itself - where just as often Intel has a faster solution such as:

https://www.anandtech.com/show/11544/intel-skylake-ep-vs-amd-epyc-7000-cpu-battle-of-the-decade/18

And the closing thoughts:

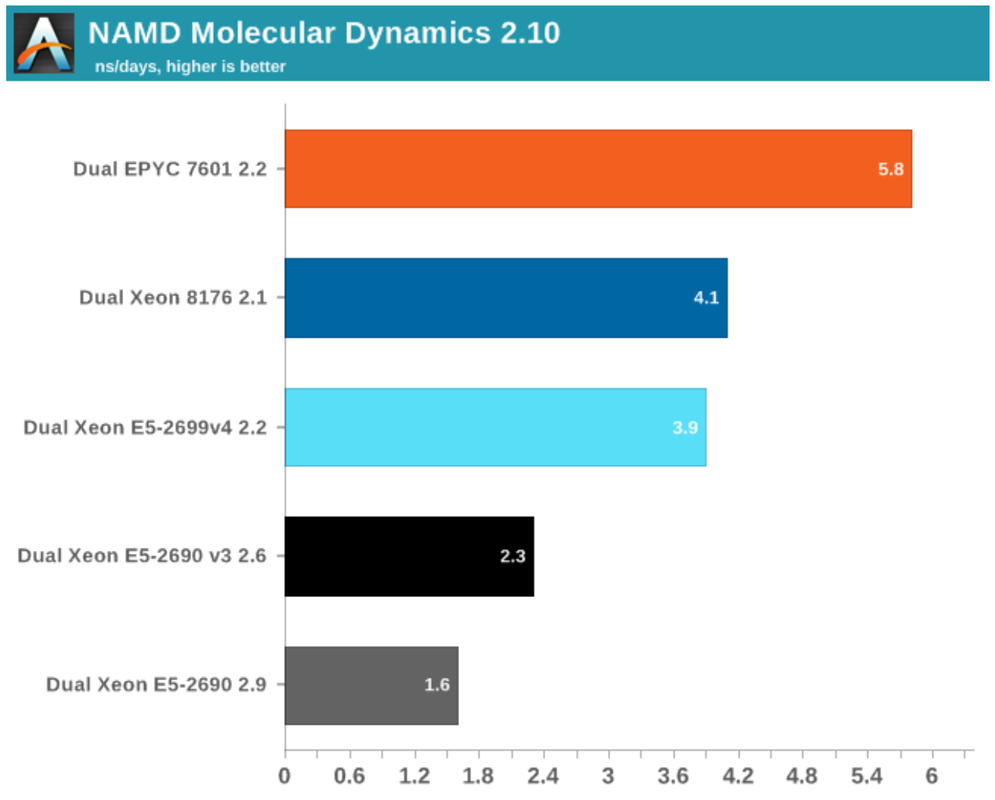

anandtech.com said:With the exception of database software and vectorizable HPC code, AMD's EPYC 7601 ($4200) offers slightly less or slightly better performance than Intel's Xeon 8176 ($8000+). However the real competitor is probably the Xeon 8160, which has 4 (-14%) fewer cores and slightly lower turbo clocks (-100 or -200 MHz). We expect that this CPU will likely offer 15% lower performance, and yet it still costs about $500 more ($4700) than the best EPYC. Of course, everything will depend on the final server system price, but it looks like AMD's new EPYC will put some serious performance-per-dollar pressure on the Intel line.

Who has the fastest CPU depends significantly on workload at the moment with neither netting significantly more wins across a broader range of tasks.

.

.