OK so I have been smashing the data. This thread can serve multiple purposes really.

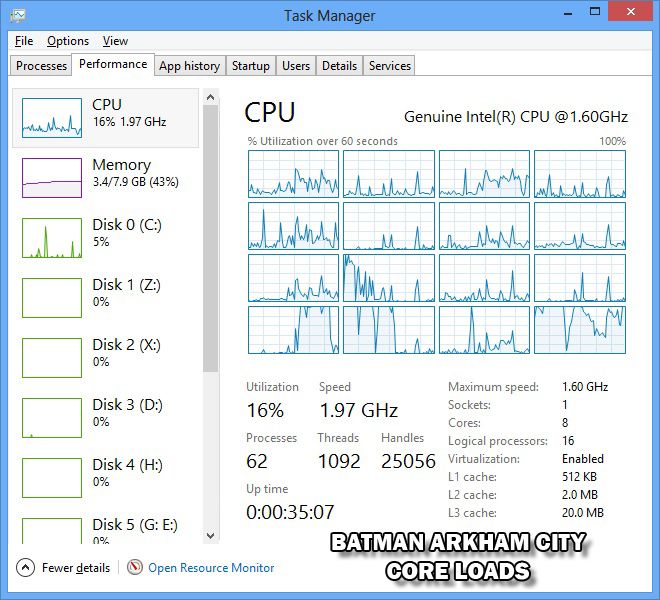

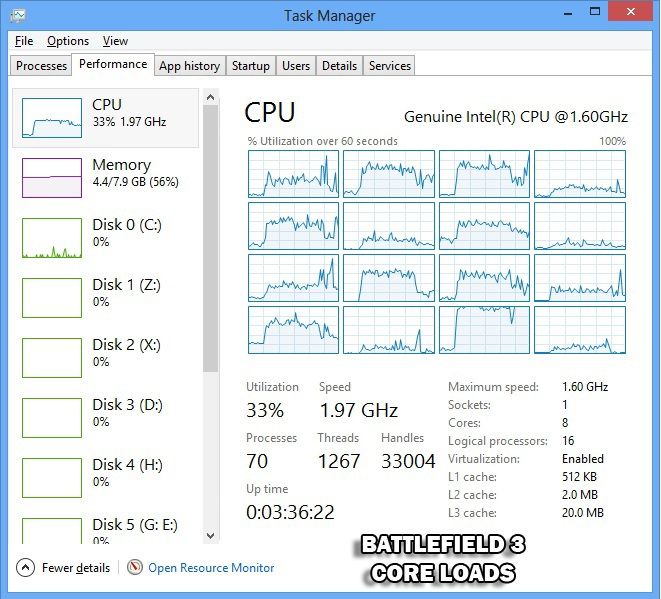

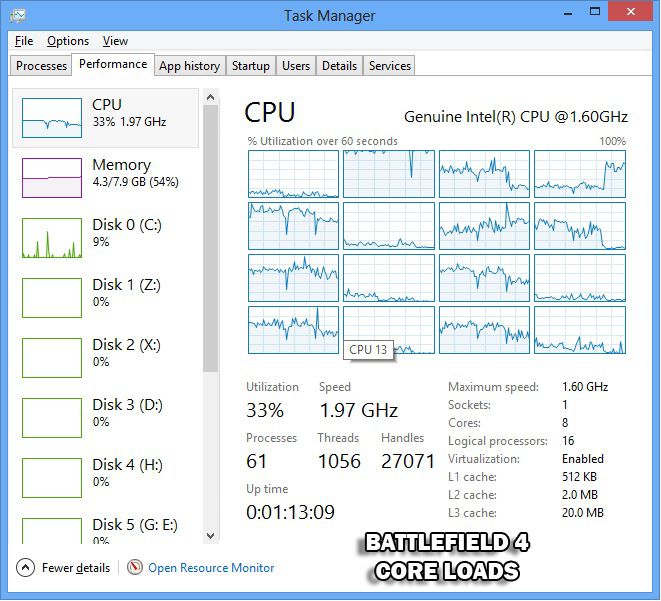

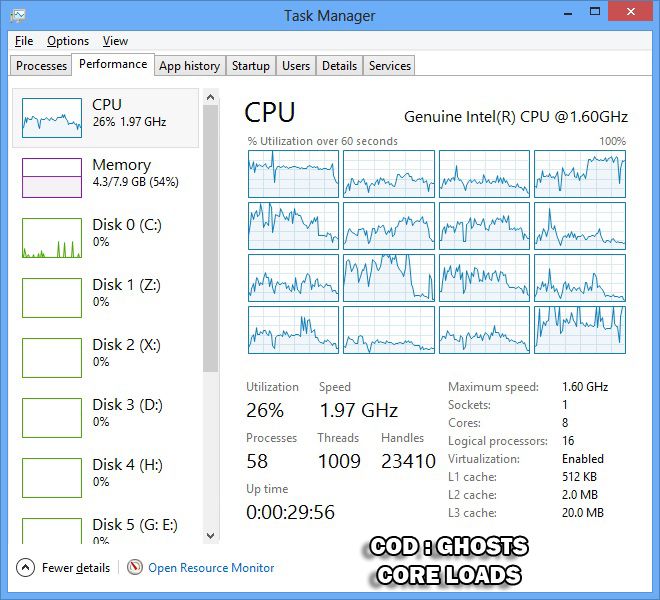

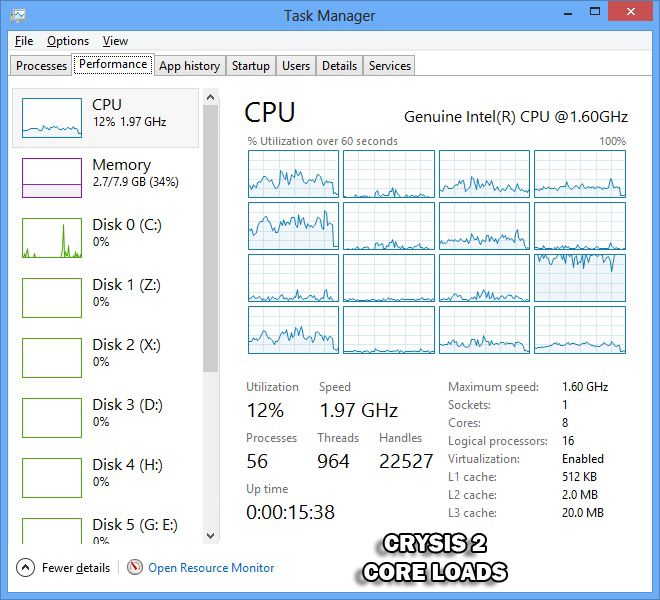

* To see if an 8 core CPU is a viable proposition to a gaming PC.

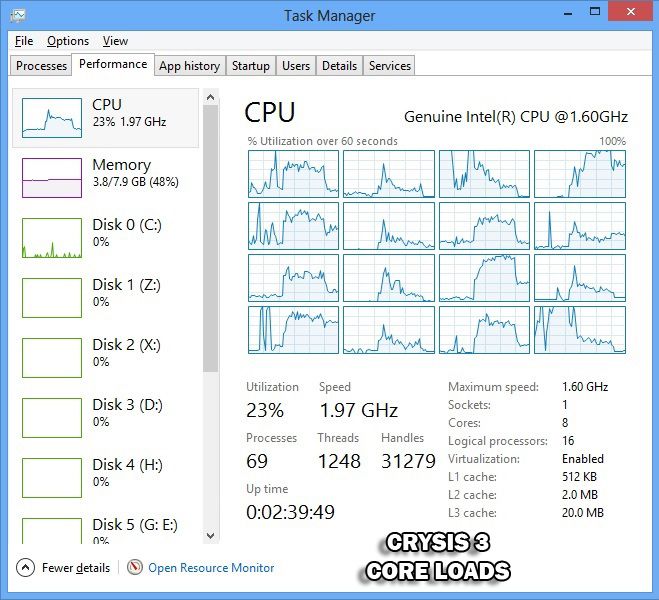

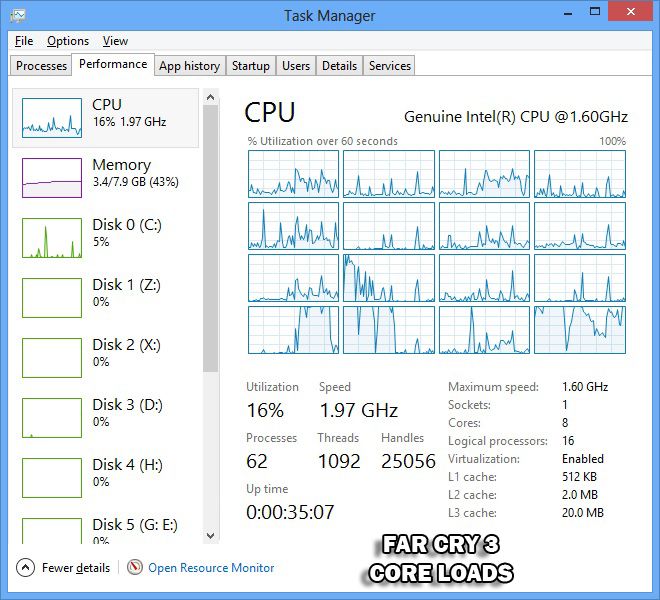

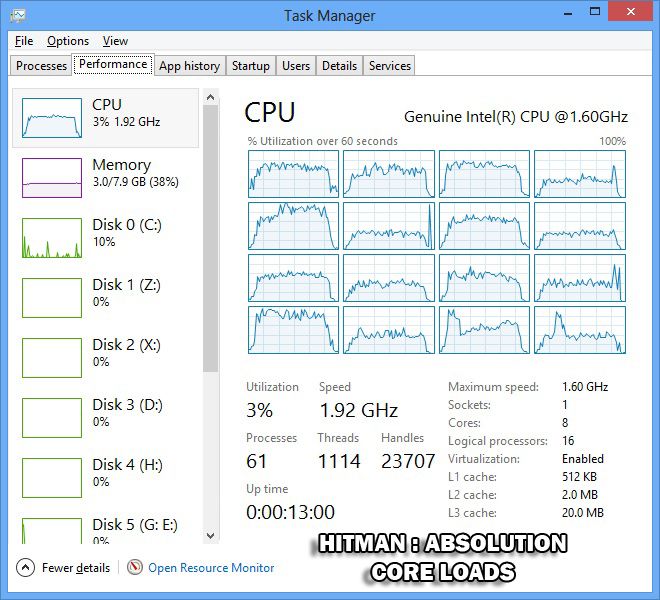

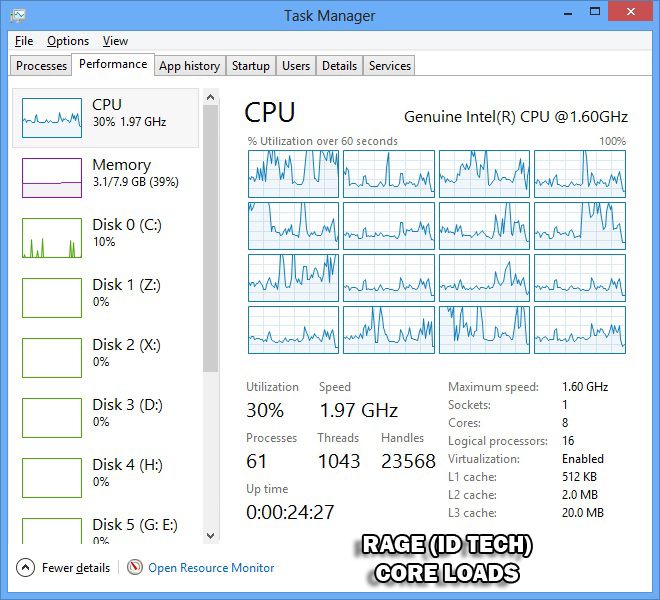

* To see core use in games over the past year, to see if things have changed.

* To give a purpose to the forthcoming 8 core Haswell E CPU in a desktop machine.

* To see if the rumours that Xeons are crap for a gaming rig are true.

I'm going to compare an 8 core* AMD FX 8320 CPU clocked to 4.9ghz (that cost £110) with an Intel Xeon 8 core 16 thread CPU (socket 2011) that I also paid £110 for. Then I am going to analyse which games actually make use of all of those threads and how well they load up the CPU.

NOTES.

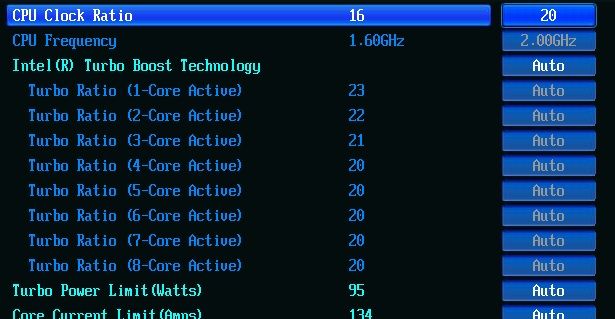

First up I'm fully aware the Xeon only boosts to 2ghz under load. I can not overclock it, not even in tiny increments via the FSB because even 101mhz makes the PC stick in a boot loop. So before the Intel boys dive in with accusations of comparing totally different clock speeds; there's nothing I can do about it. It's not my fault Intel decide to lock their CPUs at given speeds and then set a price structure for speed.

It's not always about speed and figures. At the end of the day a CPU can be perfectly suitable for a task, even if it does not appear as good as another one. You'd actually be amazed just how little CPU power you need for most of the time.

Heat and power are not a part of this analysis. Simply because I don't care, nor do I want to become embroiled in a stupid argument. This thread is strictly 8 cores only. I don't care about, nor want to know your results with your overclocked 4770k. Remember - 8 cores. I no longer care about clock speeds and IPC. I want to see more cores, being used, at lower prices. What I'd ideally like to see is a 6/8 core CPU by Intel that simply drops into a socket 1150 without the need to buy ridiculous motherboards or ram.

Hey, a guy can dream, right?

OK. So let's get it on then...

Here are the specs to concentrate on. The AMD rig is as follows.

AMD FX 8320 @ 4.9ghz

Asus Crosshair V Formula Z

8GB Mushkin Blackline running at 1533mhz (offsets with the FSB)

Corsair RM 750 PSU

Corsair H100

AMD Radeon 7990 ghz

OCZ Revodrive 120gb running RAID 0

Windows 8 Professional X64 (note, not 8.1 !)

Then onto the Intel rig. Note, this was a rebuild, so components stayed identical barring the board and CPU.

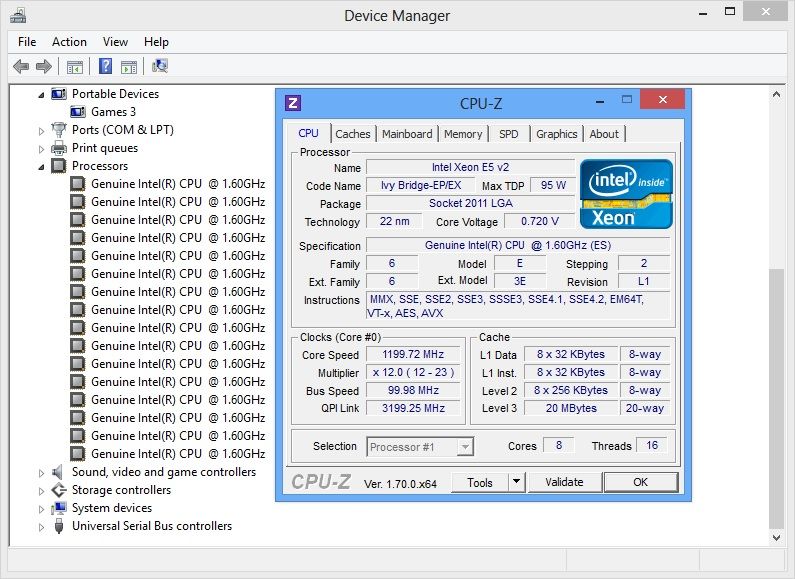

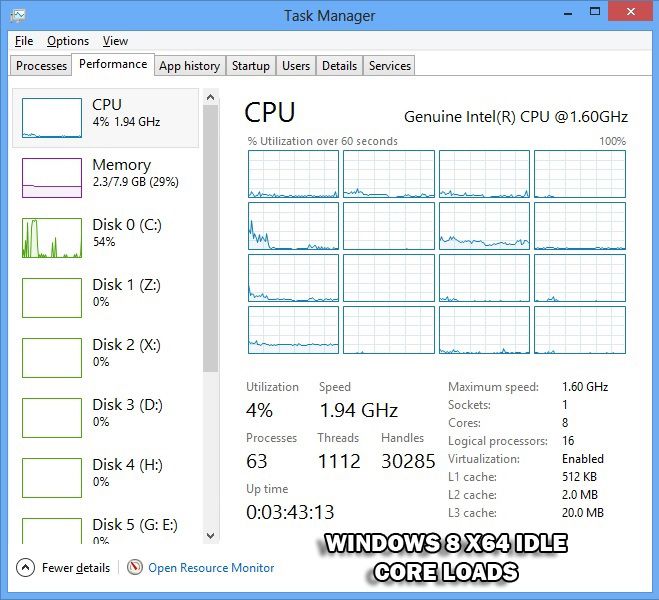

Intel Xeon V2 Ivybridge. 8 core, 16 thread, 2ghz

Gigabyte X79-UD3 motherboard.

8GB Mushkin Blackline running at 1600mhz XMP

Corsair RM 750 PSU

Corsair H100

AMD Radeon 7990 ghz

OCZ Revodrive 120gb running RAID 0

Windows 8 Professional X64 (note, not 8.1 !)

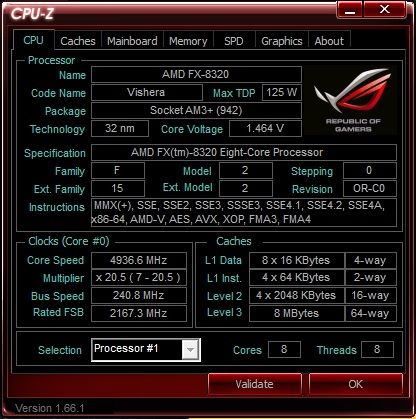

CPU validations.

AMD

Intel

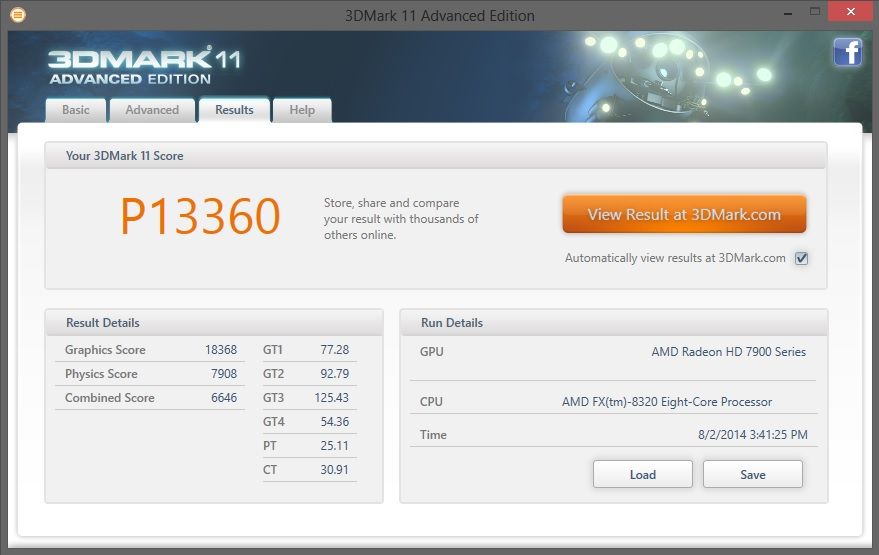

I start with some benchmarks. First up was 3Dmark 11

AMD result

And the Intel

And already strange things happen. The Intel scored a higher physics score (which pertains to the CPU) yet even though the Intel also runs PCIE 3.0 (IB) it loses out overall. Very, very strange.

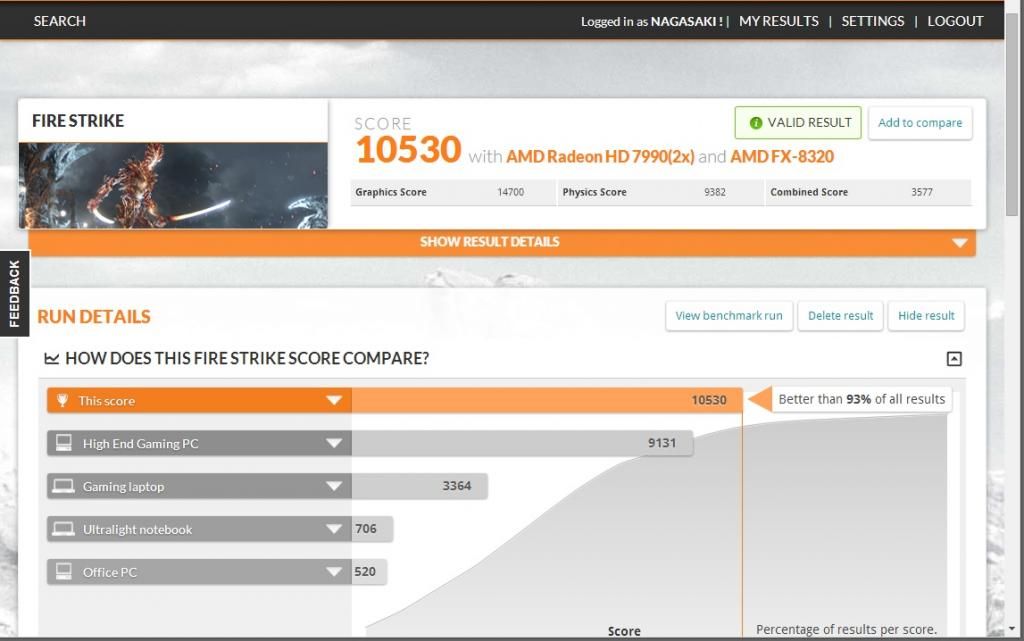

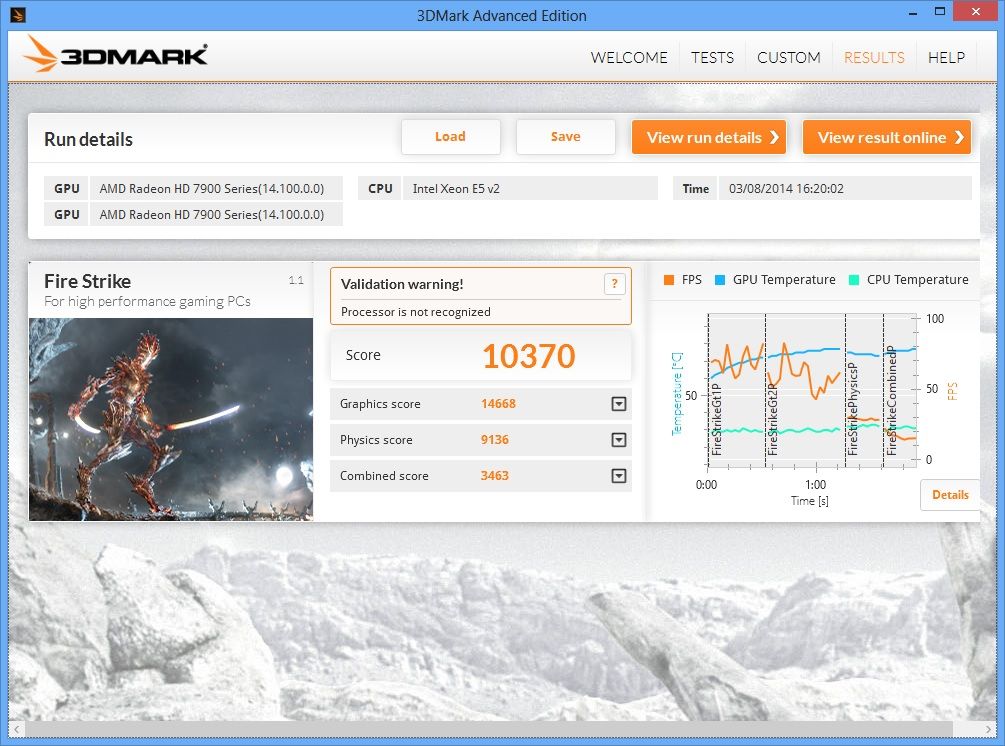

Then it was on to 3dmark (13) AMD up.

And then it was the Intel's turn.

TBH that's bloody, awfully close. It's actually within the margin of error but I promised myself before I began that I would not obsess over one benchmark and become sidetracked running it over and over again.

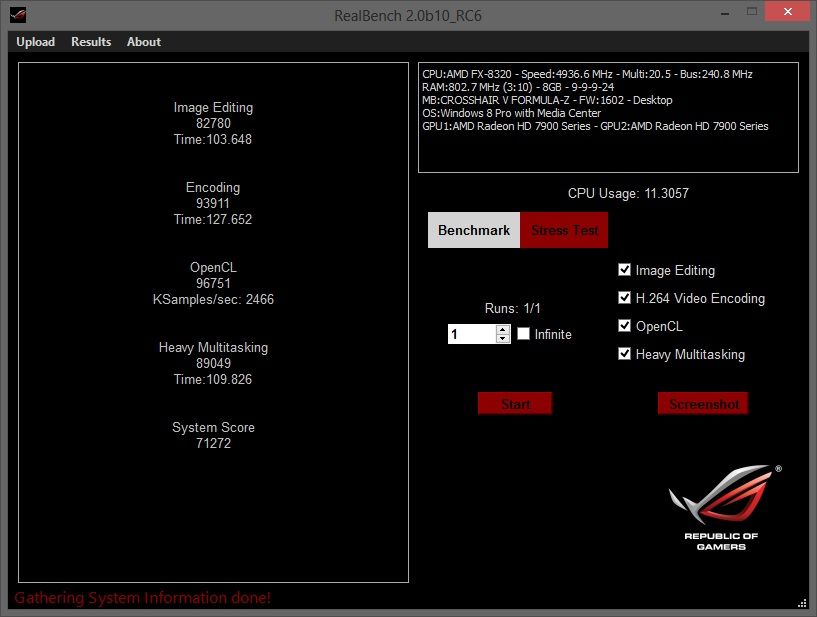

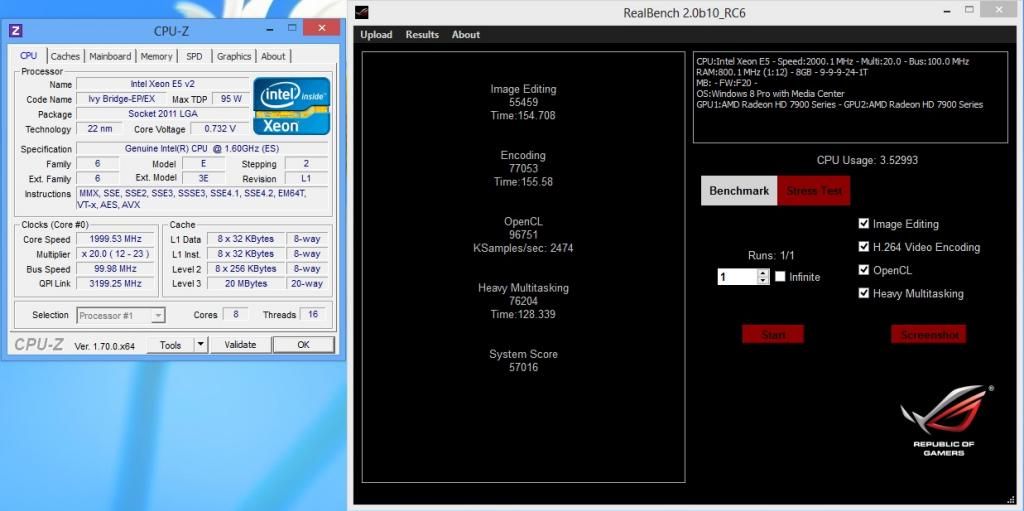

OK, so round three, Asus Realbench 2.0.

Interlude.

Asus Realbench is *the* most accurate benchmark I have ever ran in my entire life. Instead of making their own synthetic, unrealistic benchmark they simply took a bunch of programs and then mashed them together. This way the results are actual real world results. As an example, test one is GIMP image editing. Then it uses Handbrake and other benchmarks to actually gain a good idea of what a system is capable of.

This is also the toughest benchmark I have ever ran. I can run Firestrike all day long, but Realbench absolutely tortures a rig to the breaking point.

I ended up having to remove the side of the AMD rig and aim a floor standing fan at it to get it through.

So here is the AMD result.

And the Intel result.

Wow. Now this one truly knocked me sideways. I never expected the AMD rig to win on IPC alone (GIMP). Even an I7 920 runs the AMD close in GIMP, but the AMD absolutely trumped the Intel all the way through.

And this, lads and ladies, is why Asus make very high end boards for these chips. Simply as Bindi (an employee of Asus) points out, the AMDs are actually very good CPUs.

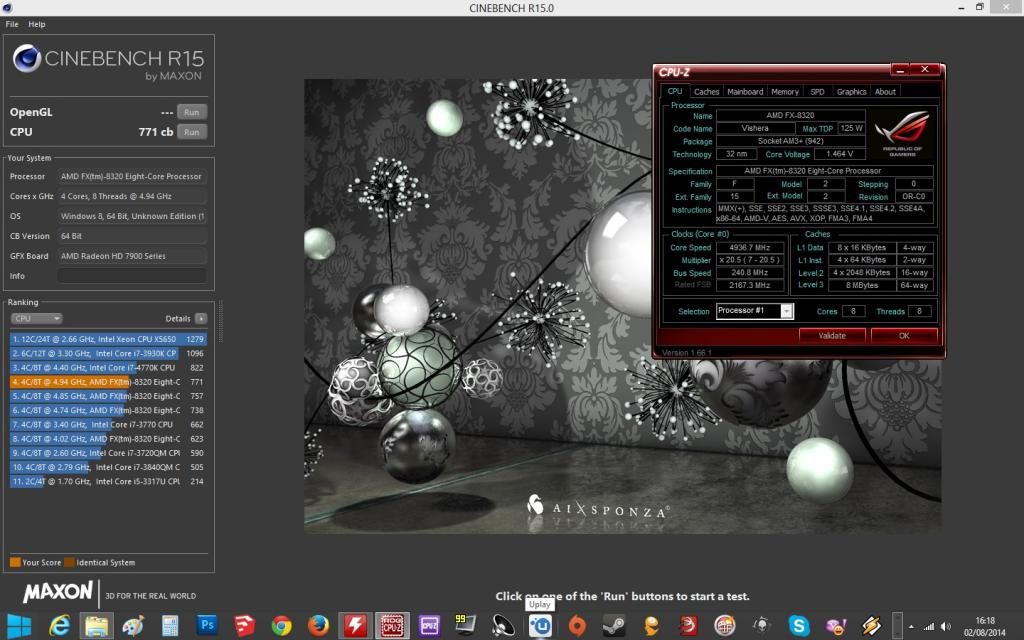

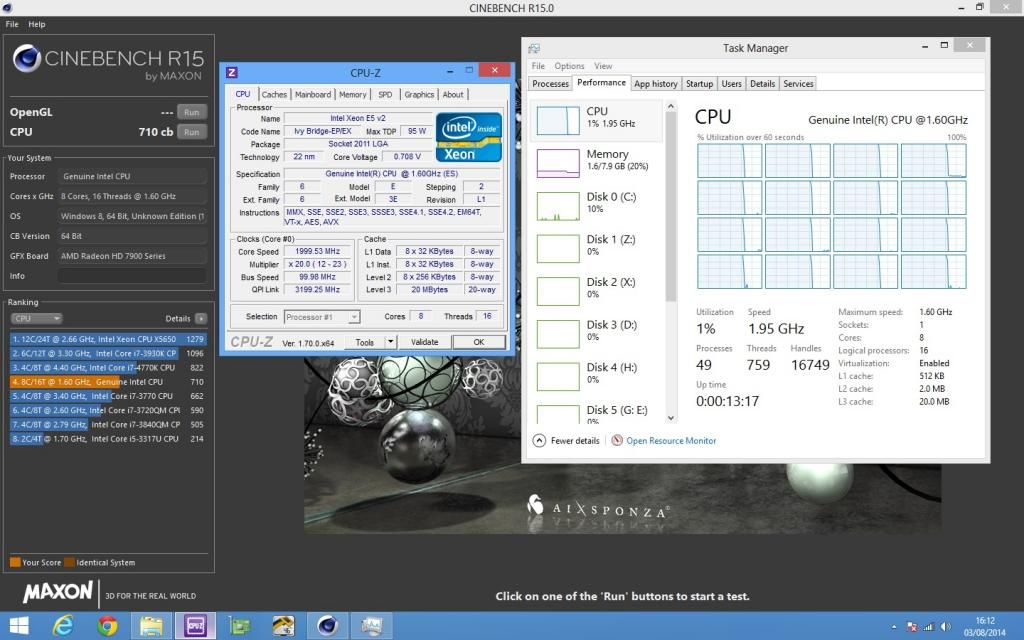

Then it was on to Cinebench, and another surprise..

AMD

And then Intel.

The surprise? not that AMD won. I was actually very impressed with the Intel's performance, given it is clearly running at less than half of the speed it's actually capable of. I hazard a guess that this CPU could actually double that speed if unlocked and overclocked, which does make me a teeny bit excited about Haswell E.

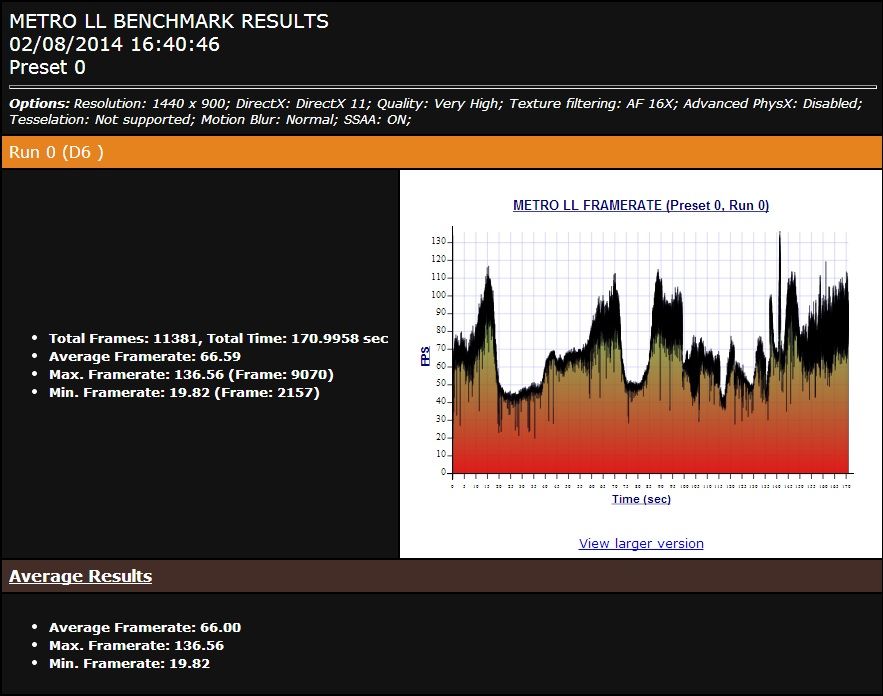

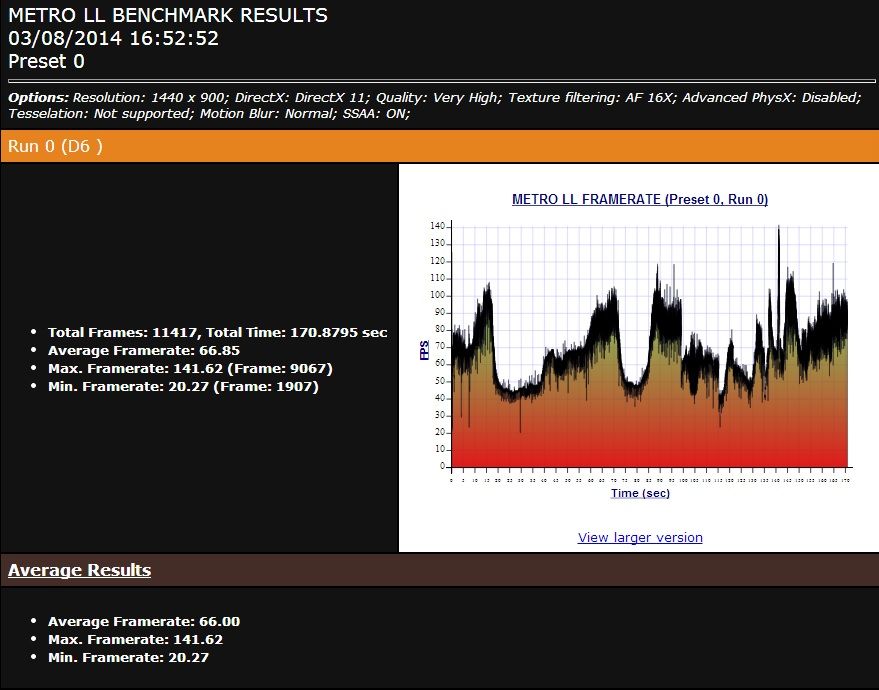

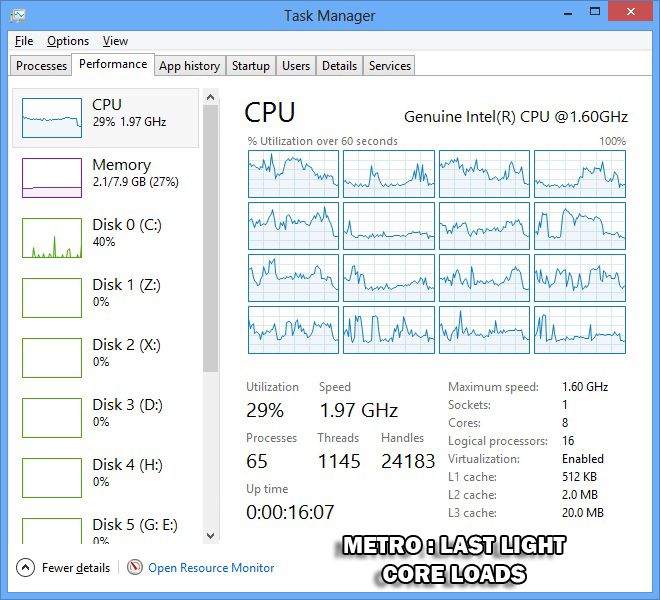

OK so no set of benchmarks would be complete without at least one game. I decided to choose Metro : Last Light. You'll see why later when I get onto the part about core use, but here is the AMD's result.

And the Intel.

And it was finally victory to the Intel. Not by much, but Metro clearly absolutely loves the cores and wants as many as you can throw at it.

Due to this result I decided to keep the Intel. There are other reasons of course, this played a part.

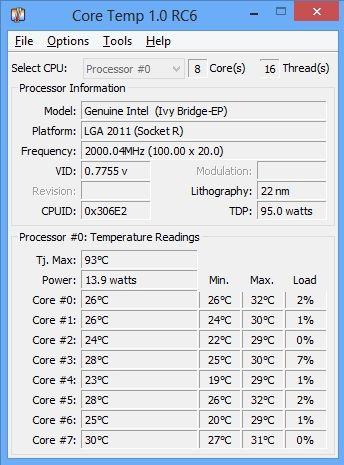

Less than 14 watts idle, and I had real trouble making it use more than 90w under load. Temps are always under 40c no matter what which means the rig is now very quiet.

Last edited:

may well be a bit of an eye opener

may well be a bit of an eye opener